RHEL + ZFS - The Real TrueNAS Upgrade

It's been about 2 months since I "upgraded" from TrueNAS Core to TrueNAS Scale, and to be completely honest, it's been a complete disaster. I gave it the old college try, and ultimately I just found TrueNAS Scale to be a mess. I would best describe my experience using TrueNAS Scale as a "dumpster fire". The CSI drivers are buggy and unreliable, even more so if you use the API drivers. A lot of basic functionality that used to be part of TrueNAS Core was ripped out and replaced with "apps". The "Apps" feature on TrueNAS Scale is essentially a Rancher K3s cluster and applications are deployed via custom helm charts. On paper, that doesn't sound so bad, but in practice, the apps are frequently broken or just flat out won't install, and getting them to bind to the correct interface(s) is nigh impossible. In my experience, I went from TrueNAS Core which was always rock solid and reliable, to the point where I literally almost never even thought about my storage, to a platform that is so unstable and unreliable that I can't in good conscience recommend anyone even attempt to use TrueNAS Scale. Outside of the absolute bare minimum of being a decent NFS server on top of solid ZFS storage, TrueNAS Scale is an absolute sh** show. The last straw for me though, was after running the MinIO app for a few weeks, the buggy k3s cluster corrupted my S3 dataset and caused all kinds of havoc, including completely breaking iSCSI storage. I honestly don't really understand how the MinIO app could affect the iSCSI service, but it did. Among the casualties in the data loss was my Primary Domain Controller. Not cool.

I ran TrueNAS Core since FreeNAS 8.x and other than having to replace a failed disk every once in a while, I've literally never had a single problem with it. I didn't even make it 2 months on TrueNAS Scale before I ran into a catastrophic data loss event, and this was on a ZFS pool of all brand new SSD and NVMe drives. To say I'm disappointed would be the understatement of the century.

After losing my primary domain controller, Active Directory is limping along for now on the secondary, but the DNS service is down on the domain and is non-recoverable, which means I'm looking at a full redeployment. That was the last straw for me, and I started looking at alternatives. One option of course would be to just roll back to TrueNAS Core, but I don't want to do that because iX Systems has announced that they're sunsetting TrueNAS Core by 2025 and TrueNAS Core 13.x will be the last version released. Not willing to leave something as integral to my lab as my NAS on abandonware, I needed another option, ideally one that wouldn't require me to abandon ZFS. I did briefly consider rolling back to Ceph, but I'm not a huge fan. First, I just went to great lengths to migrate all my data off of Ceph and onto TrueNAS, so I really don't want to have to go back. Second, ODF (Openshift Data Foundation) is a resource hog, and I don't want to use it anymore if I don't have to.

I did a little research and testing and the solution I ended up landing on was ZoL (ZFS on Linux) on RHEL. This allows me to keep my zpools in tact, and gives me a path to natively supported services like MinIO. It'll just take a little extra work playing musical chairs with my data, in particular my PVCs. I'm waiting on some new disks to replace the spinners that have some bad sectors on my my secondary TrueNAS box. I am currently using it as a replication target for the main zpool on my primary TrueNAS server. The plan is going to be wipe all of the disks and build a new RAIDz1 zpool, and redeploy the server as either bare metal RHEL or a Single Node Openshift cluster with a RHEL VM set up with disk pass thru. I'm leaning towards just plain bare metal RHEL for simplicity's sake, but considering how under utilized this hardware is (it's an old Mac Pro 5,1 with 12c/24t and 128GB of RAM) I may want to deploy it as a SNO cluster. Managing VMs is just a bit easier to do on Openshift than it is on RHEL + KVM. Not that it can't be done - cockpit-machines has come a long way - but I found that when I was still running Ceph, it was just easier to manage with Openshift.

Due to the age of the CPUs on these old Mac Pros, I'm stuck with RHEL 8. the latest version of RHEL 9 (9.3 at time of writing) does not install on this hardware due to missing CPU instruction sets. That being said, RHEL 8 will continue to be supported for several more years - going end of life in June of 2029, which is realistically longer than the expected service life of the old NAS servers. This may also be a limiting factor for Openshift. The version of RHCOS for OCP 4.15 is based on RHEL 9, so OCP 4.14 may be the last version of Openshift that can run on these old Mac Pros. I haven't tried deploying OCP 4.15, and probably won't bother.

Either way, RHEL 8 is going to be the thing handling storage and sharing protocols.

Preparing the system for ZFS

Installing ZoL (ZFS on Linux) on RHEL is actually pretty straightforward. It would be nice to have out of the box support for ZFS on RHEL, but due to incompatible licensing schemes, RHEL can't be shipped with ZFS, but adding it to an existing system is easy. Just follow the instructions here:

subscription-manager repos --enable codeready-builder-for-rhel-8-$(arch)-rpms

dnf -y install https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm

dnf install https://zfsonlinux.org/epel/zfs-release-2-3$(rpm --eval "%{dist}").noarch.rpm

dnf config-manager --disable zfs

dnf config-manager --enable zfs-kmod

dnf install zfs

echo zfs >/etc/modules-load.d/zfs.conf

modprobe zfs

# create the zpool

zpool create pool0 raidz1 /dev/sda /dev/sdb /dev/sdc /dev/sdd

# optionally, add some vdevs for cache and log

zpool add pool0 log /dev/nvme0n1

zpool add pool0 cache /dev/nvme1n1

# enable deduplication and compression

zfs set dedup=on pool0

zfs set compression=lz4 pool0

# view your new zpool

zpool status

Now with our zfs pool created, we can start exposing services on our server like NFS and iSCSI. But first thing's first, you're either going to need to create some firewall rules and tune some things with SELinux, or (if you're lazy like me) just disable both. I don't feel like handling dozens of firewall rules and fiddling with setroubleshootd to figure out every time something throws an AVC denial.

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/enforcing/disabled/g' /etc/selinux/config

reboot

NFS

ZFS on Linux can share datasets via NFS. You just need to install the NFS server, and then enable NFS sharing on the appropriate dataset

dnf -y install nfs-utils

systemctl start nfs-server rpcbind

systemctl enable nfs-server rpcbindThen with your NFS server up and running, exposing a dataset via NFS is simple

zfs set sharenfs=on pool0/volsSubsequent datasets under the parent dataset will be automatically added to the NFS exports

[root@stor01 ~]# showmount -e

Export list for stor01.cudanet.org:

/pool0/vols/pvc-8b08ca81-4083-4e86-9793-e48d2121d455 *

/pool0/vols/pvc-0d8434be-28ca-42b8-9378-f072c4e242db *

/pool0/vols *

sudo echo "nfs.client.mount.options = vers=4" >> /etc/nfs.confNot sure why the NFS stack on macOS is so difficult to work with these days, but at least I got it working again. Also, if you need to mount a specific share and Finder doesn't want to play nice, you can work around that by doing something like

mkdir -p nfs

sudo mount -t nfs -o resvport stor02.cudanet.org:/pool0/nas nfsiSCSI

iSCSI is even easier to set up than NFS. Basically, just just need to install targetcli and start the iSCSI service

dnf -y install iscsi-initiator-utils targetcli

systemctl start iscsid

systemctl enable iscsidDemocratic-CSI

Ultimately, my main use case for TrueNAS or for RHEL + ZFS is to provide persistent storage to my Openshift clusters, and that is done via democratic-csi drivers https://github.com/democratic-csi/democratic-csi.

It is true that Democratic CSI is primarily targeted at TrueNAS, but it does generally support any generic ZFS, including ZoL on RHEL.

Here are some example YAML files for deploying the helm chart for generic ZoL

NFS:

csiDriver:

name: "org.democratic-csi.nfs"

storageClasses:

- name: zol-nfs

defaultClass: false

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

parameters:

fsType: nfs

mountOptions: []

secrets:

provisioner-secret:

controller-publish-secret:

node-stage-secret:

node-publish-secret:

controller-expand-secret:

volumeSnapshotClasses:

- name: zol-nfs-snapclass

parameters:

detachedSnapshots: "true"

secrets:

snapshotter-secret:

driver:

config:

driver: zfs-generic-nfs

sshConnection:

host: 192.168.60.104

port: 22

username: root

password: "<root-password>"

zfs:

cli:

paths:

zfs: /usr/sbin/zfs

zpool: /usr/sbin/zpool

sudo: /usr/bin/sudo

chroot: /usr/sbin/chroot

datasetParentName: pool0/vols

detachedSnapshotsDatasetParentName: pool0/snaps

datasetEnableQuotas: true

datasetEnableReservation: false

datasetPermissionsMode: "0777"

datasetPermissionsUser: 0

datasetPermissionsGroup: 0

nfs:

shareStrategy: "setDatasetProperties"

shareStrategySetDatasetProperties:

properties:

sharenfs: "rw,no_subtree_check,no_root_squash"

#sharenfs: "on"

# share: ""

shareHost: "192.168.60.104"iSCSI:

You'll need to get your system IQN first,

cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1994-05.com.redhat:bc10524a297csiDriver:

name: "org.democratic-csi.iscsi"

storageClasses:

- name: zol-iscsi

defaultClass: false

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

parameters:

fsType: ext4

mountOptions: []

secrets:

provisioner-secret:

controller-publish-secret:

node-stage-secret:

node-publish-secret:

controller-expand-secret:

volumeSnapshotClasses:

- name: zol-iscsi-snapclass

parameters:

detachedSnapshots: "true"

secrets:

snapshotter-secret:

driver:

config:

driver: zfs-generic-iscsi

driver: zfs-generic-iscsi

sshConnection:

host: 192.168.60.104

port: 22

username: root

password: "<root-password>"

zfs:

cli:

paths:

zfs: /usr/sbin/zfs

zpool: /usr/sbin/zpool

sudo: /usr/bin/sudo

chroot: /usr/sbin/chroot

datasetParentName: pool0/vols

detachedSnapshotsDatasetParentName: pool0/snaps

zvolCompression:

zvolDedup:

zvolEnableReservation: false

zvolBlocksize:

iscsi:

shareStrategy: "targetCli"

shareStrategyTargetCli:

#sudoEnabled: true

basename: "iqn.1994-05.com.redhat:bc10524a297"

tpg:

attributes:

authentication: 0

generate_node_acls: 1

cache_dynamic_acls: 1

demo_mode_write_protect: 0

auth:

targetPortal: "192.168.60.104:3260"

interface: ""

namePrefix:

nameSuffix:To install with helm, just do the following

helm upgrade --install --namespace democratic-csi \

--values zfs-iscsi.yaml \

--set node.driver.localtimeHostPath=false \

--set node.rbac.openshift.privileged=true \

--set controller.rbac.openshift.privileged=true \

zfs-iscsi democratic-csi/democratic-csi

helm upgrade --install --namespace democratic-csi \

--values nfs-remote.yaml \

--set node.driver.localtimeHostPath=false \

--set node.rbac.openshift.privileged=true \

--set controller.rbac.openshift.privileged=true \

zfs-nfs democratic-csi/democratic-csi

Keep in mind, if you already have democratic-csi installed, this will nuke the existing secrets and break your storage classes for TrueNAS. I would recommend putting your new ZOL drivers in their own namespace.

oc patch storageprofile democratic-csi-iscsi -p '{"spec":{"claimPropertySets":[{"accessModes":["ReadWriteMany"],"volumeMode":"Block"}],"cloneStrategy":"csi-clone"}}' --type=merge

oc patch storageprofile democratic-csi-nfs -p '{"spec":{"claimPropertySets":[{"accessModes":["ReadWriteMany"],"volumeMode":"Filesystem"}],"cloneStrategy":"csi-clone"}}' --type=mergeAnd you may also want to set your default storage class, eg;

oc annotate storageclass democratic-csi-nfs "storageclass.kubernetes.io/is-default-class=true"MinIO

It's astounding to me how unbelievably difficult it has been to get reliable, stable S3 storage on TrueNAS Scale. There was a native MinIO plugin on TrueNAS Core which I used for years without issue. Moving this functionality to the "apps" feature was a big mistake IMHO.

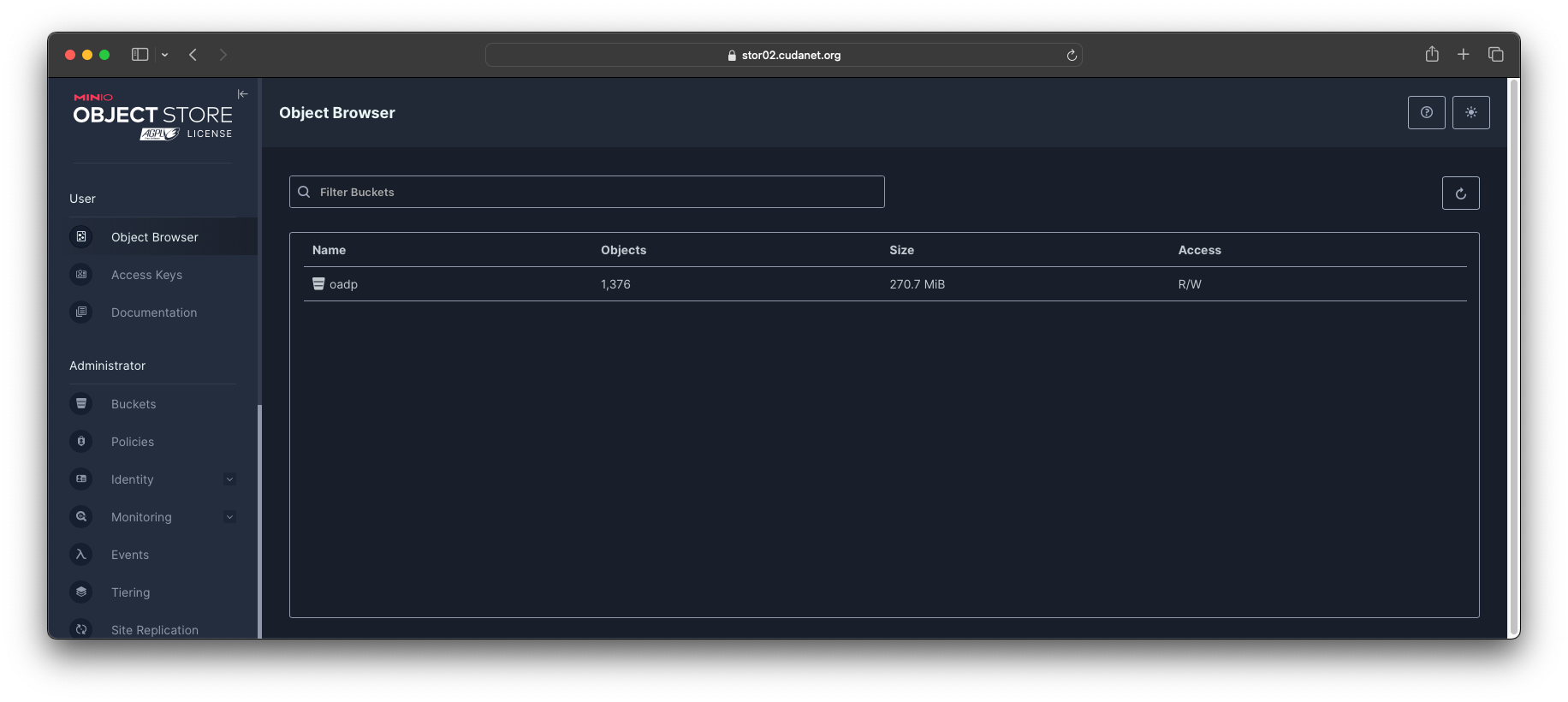

Thankfully, on RHEL, installing MinIO is done natively and is a pretty straightforward process. However, enabling SSL does take some additional work. In my experience it was a lot easier to handle using nginx as a reverse proxy than trying to enable SSL natively on the MinIO server itself. And, it comes with the added benefit of being able to take advantage of the deduplication for the entire zpool, whereas running it as an App on TrueNAS Scale means it can't take advantage of dedupe. This is kind of a big deal, since my primary use case for S3 storage on prem is as a backup target for OADP, which unfortunately, does not have any kind of change block tracking algorithm like VMware does. So, if you want any kind of efficiency, you need to rely on the underlying ZFS system to handle deduplication for you. Thankfully, changes to persistent volume data are small and infrequent, so backups should deduplicate quite well.

To install MinIO on RHEL, follow the instructions here

dnf -y install wget

wget https://dl.min.io/server/minio/release/linux-amd64/archive/minio-20240418190919.0.0-1.x86_64.rpm -O minio.rpm

dnf -y install minio.rpm

Create a ZFS dataset for MinIO

zfs create pool0/minioCreate the appropriate user and set ownership on the dataset

groupadd -r minio-user

useradd -M -r -g minio-user minio-user

chown minio-user:minio-user /pool0/miniothen create the minio config file

vi /etc/default/minio

# MINIO_ROOT_USER and MINIO_ROOT_PASSWORD sets the root account for the MinIO server.

# This user has unrestricted permissions to perform S3 and administrative API operations on any resource in the deployment.

# Omit to use the default values 'minioadmin:minioadmin'.

# MinIO recommends setting non-default values as a best practice, regardless of environment

MINIO_ROOT_USER=EH1MLGYF24GWFXNHEP16

MINIO_ROOT_PASSWORD=480E2AONQWN41S0NAGY4

# MINIO_VOLUMES sets the storage volume or path to use for the MinIO server.

MINIO_VOLUMES="/pool0/minio"

# MINIO_OPTS sets any additional commandline options to pass to the MinIO server.

# For example, `--console-address :9001` sets the MinIO Console listen port

MINIO_OPTS="--console-address :9001"

# MINIO_SERVER_URL sets the hostname of the local machine for use with the MinIO Server

# MinIO assumes your network control plane can correctly resolve this hostname to the local machine

# Uncomment the following line and replace the value with the correct hostname for the local machine and port for the MinIO server (9000 by default).

#MINIO_SERVER_URL="http://minio.example.net:9000"Then in order to wrap your MinIO server in SSL, you're going to need to use nginx to reverse proxy to it. I'm sure there's a way to configure MinIO to serve it's own SSL cert, but considering their own documentation for multinode deployments rely on nginx to load balance and reverse proxy, I just went that route.

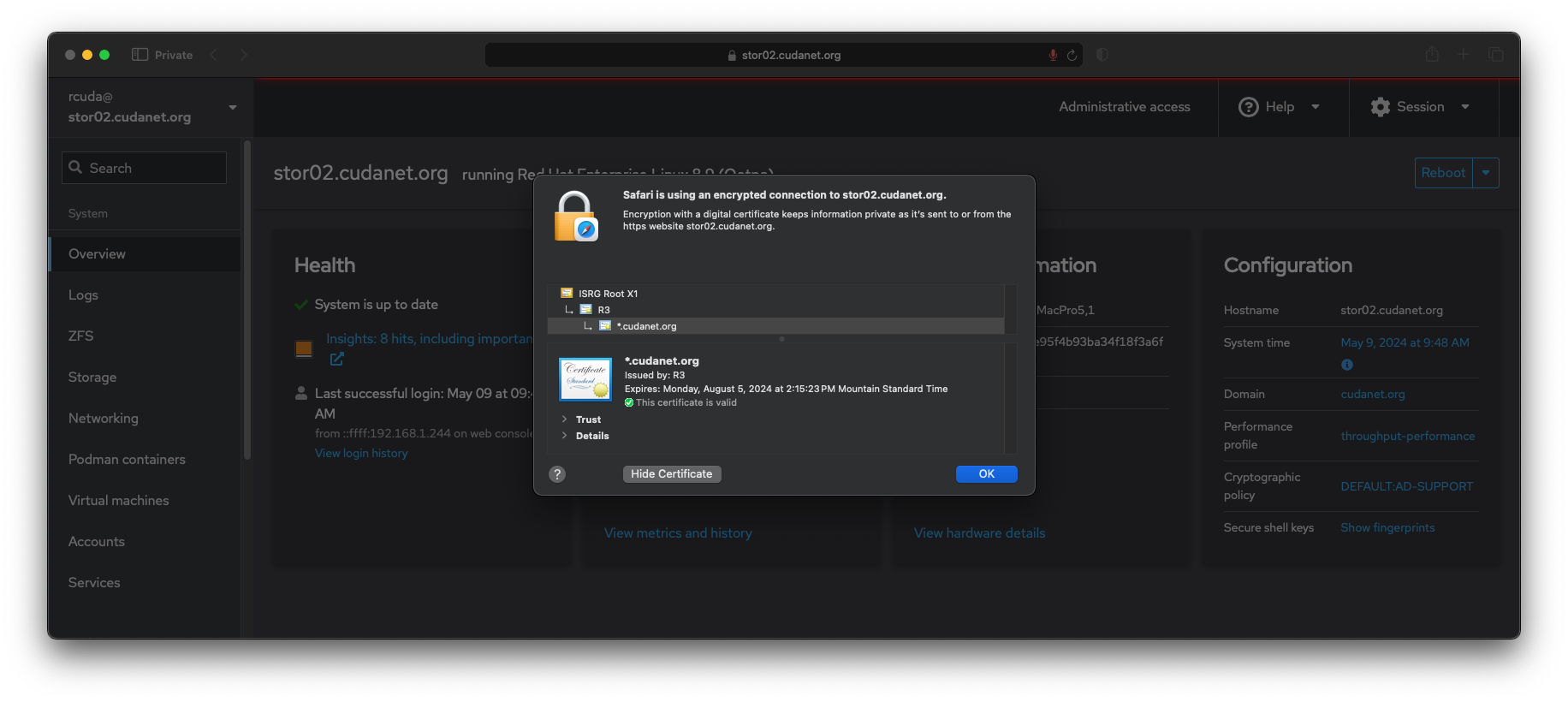

SSL

We're going to be using a few different web services and having valid signed SSL certs is going to save you a lot of headaches in the future, especially when dealing with Openshift, which is notoriously picky about security. I've been using Letsencrypt with Cloudflare DNS for my generating certs for a while now, and other than the fact that letsencrypt certs expire every 90 days, they work great.

To set up your system with a valid letsencrypt cert, you need to install certbot. If, like me, your ISP blocks port 80, you aren't going to be able to use the HTTP challenge method (the easy/preferred method for certbot) so you'll have to configure certbot to use DNS challenge. I use Cloudflare, but many other DNS providers like route53 are also supported. YMMV. The certbot package is available in the EPEL repo.

sudo dnf -y install certbot

# DO NOT RUN PIP3 AS ROOT

pip3 install certbot-dns-cloudflare

Then you will need to create a file with your cloudflare API credentials, eg;

# as root

mkdir -p ~/.secrets/certbot

touch ~/.secrets/certbot/cloudflare.ini

cat <<EOF > ~/.secrets/certbot/cloudflare.ini

# Cloudflare API credentials used by Certbot

dns_cloudflare_email = [email protected]

dns_cloudflare_api_key = 123456789012345678901234567890

EOF

chmod -vR 0644 ~/.secrets

chmod 0600 ~/.secrets/certbot/cloudflare.iniThen you can generate your certificate like this.

certbot certonly \

--dns-cloudflare \

--dns-cloudflare-credentials ~/.secrets/certbot/cloudflare.ini \

--dns-cloudflare-propagation-seconds 60 \

-d *.cudanet.org \

-d *.prod.cudanet.org \

-d *.dev.cudanet.org \

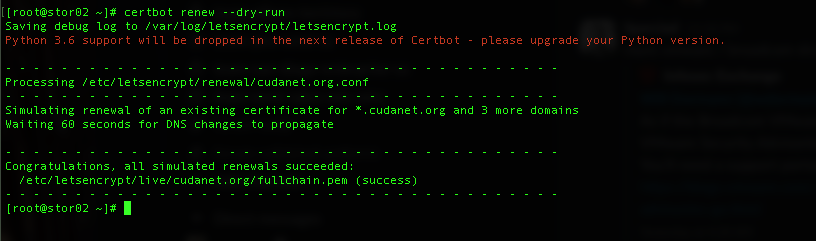

-d *.infra.cudanet.orgThen theoretically, all you need to do to rotate the cert in the future is run certbot renew

You can test renewing your cert by running certbot renew --dry-run

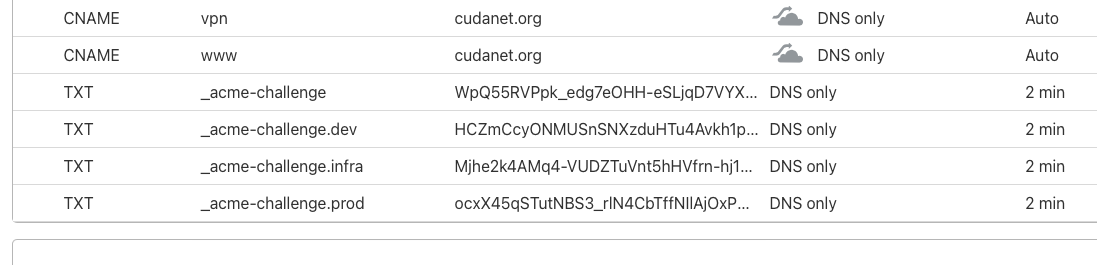

if we check Cloudflare, we can see certbot generating the appropriate TXT records. Also, it has the added benefit of 'cleaning up after itself' so you DNS domain doesn't get littered with unnecessary TXT records

Install Nginx

Now, with a fresh letsencrypt cert, we can spin up nginx to wrap all our web services in SSL.

dnf -y install nginx

systemctl enable --now nginxThen you can set up your reverse proxy for MinIO like the example here:

# For more information on configuration, see:

# * Official English Documentation: http://nginx.org/en/docs/

# * Official Russian Documentation: http://nginx.org/ru/docs/

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

# Load modular configuration files from the /etc/nginx/conf.d directory.

# See http://nginx.org/en/docs/ngx_core_module.html#include

# for more information.

include /etc/nginx/conf.d/*.conf;

upstream minio_s3 {

least_conn;

server 192.168.1.191:9000;

}

upstream minio_console {

least_conn;

server 192.168.1.191:9001;

}

server {

listen 9443 ssl;

server_name minio.cudanet.org;

ssl_certificate "/etc/letsencrypt/live/cudanet.org/fullchain.pem";

ssl_certificate_key "/etc/letsencrypt/live/cudanet.org/privkey.pem";

# Allow special characters in headers

ignore_invalid_headers off;

# Allow any size file to be uploaded.

# Set to a value such as 1000m; to restrict file size to a specific value

client_max_body_size 0;

# Disable buffering

proxy_buffering off;

proxy_request_buffering off;

location / {

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_connect_timeout 300;

# Default is HTTP/1, keepalive is only enabled in HTTP/1.1

proxy_http_version 1.1;

proxy_set_header Connection "";

chunked_transfer_encoding off;

proxy_pass http://minio_s3; # This uses the upstream directive definition to load balance

}

}

server {

listen 443 ssl;

server_name stor02.cudanet.org;

ssl_certificate "/etc/letsencrypt/live/cudanet.org/fullchain.pem";

ssl_certificate_key "/etc/letsencrypt/live/cudanet.org/privkey.pem";

# Allow special characters in headers

ignore_invalid_headers off;

# Allow any size file to be uploaded.

# Set to a value such as 1000m; to restrict file size to a specific value

client_max_body_size 0;

# Disable buffering

proxy_buffering off;

proxy_request_buffering off;

location / {

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-NginX-Proxy true;

# This is necessary to pass the correct IP to be hashed

real_ip_header X-Real-IP;

proxy_connect_timeout 300;

# To support websocket

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

chunked_transfer_encoding off;

proxy_pass http://minio_console/; # This uses the upstream directive definition to load balance

}

}

}In my example, I am redirecting the MinIO web console to port 443 which can be accessed at https://stor02.cudanet.org/login and the S3 API itself can be accessed at https://minio.cudanet.org:9443 instead of HTTP ports 9000 and 9001 respectively.

Configure LDAP Authentication

I use Active Directory in my lab for authentication and RBAC. Domain joining a RHEL server is pretty straightforward. RHEL uses SSSD to join to Active Directory domains (or any other LDAP/Kerberos server). Configuration may vary slightly for your environment, but this is what works for me

dnf install -y realmd sssd oddjob oddjob-mkhomedir adcli samba-common samba-common-tools krb5-workstation openldap-clients

realm join -U rcuda cudanet.org

cat <<EOF > /etc/sssd/sssd.conf

[sssd]

domains = cudanet.org

config_file_version = 2

services = nss, pam

[domain/cudanet.org]

ad_domain = cudanet.org

krb5_realm = CUDANET.ORG

realmd_tags = manages-system joined-with-adcli

cache_credentials = True

id_provider = ad

krb5_store_password_if_offline = True

default_shell = /bin/bash

ldap_id_mapping = True

use_fully_qualified_names = False

fallback_homedir = /home/%u

access_provider = simple

ad_gpo_ignore_unreadable = True

ad_gpo_access_control = permissive

EOF

systemctl restart sssd

echo "%domain\ admins ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers

realm permit [email protected]

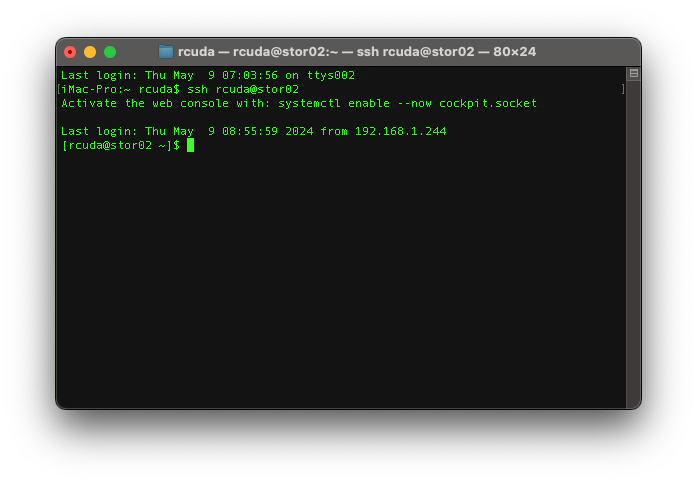

update-crypto-policies --set DEFAULT:AD-SUPPORTThen try to SSH to your server as a domain user to verify you have configured LDAP correctly

Success!

Cockpit

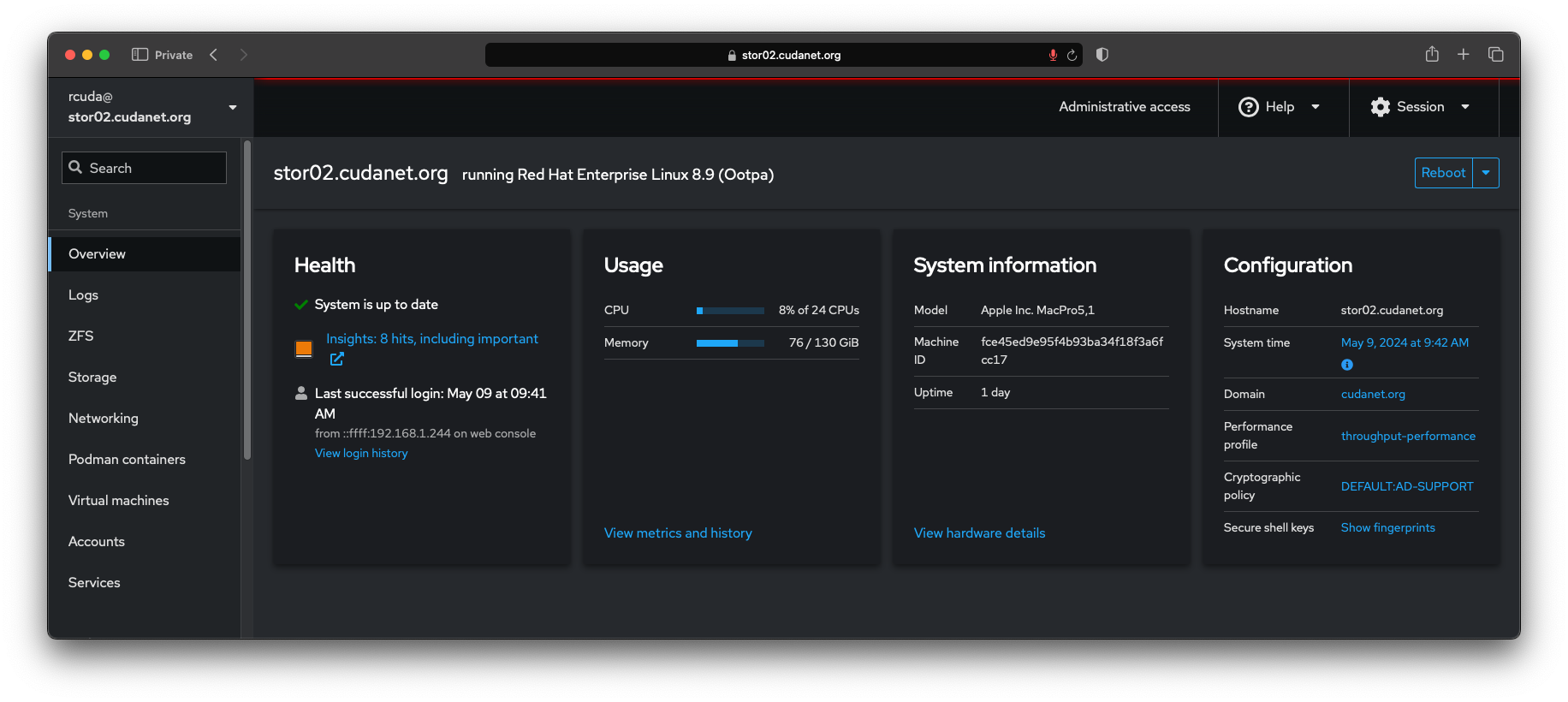

Cockpit is a handy web UI for accessing and managing your server(s). It has lots of plugins for various functions like managing networking, storage, virtual machines and containers to name a few.

dnf -y install cockpit cockpit-ws

systemctl enable --now cockpit.socketThen you can access your server's cockpit interface on HTTPS port 9090, eg; https://stor02.cudanet.org:9090

Bonus points - configuring SSL

By default, cockpit uses a self signed certificate + keypair which is stored in 3 files

/etc/cockpit/ws-certs.d/0-self-signed-ca.pem

/etc/cockpit/ws-certs.d/0-self-signed.cert

/etc/cockpit/ws-certs.d/0-self-signed.keyI'm not sure if there's a native mechanism for replacing cockpit's cert, but this works for me. First, generate a cert and privatekey using, eg; certbot with letsencrypt. then copy the contents of fullchain.pem to 0-self-signed-ca.pem (the second cert in the chain) and 0-self-signed.cert (the first cert in the chain), and then copy your privkey.pem to 0-self-signed.key and then restart the cockpit service.

One of these days I'll script this for automated cert rotation but for now something like this works. Just keep in mind, the format of this cert differs from the format of the certificate chain generated by certbot, so you'll have to re-run this every time the certbot cert is renewed. Porbably something you could put into a cron job.

mkdir cockpit-cert

cd cockpit-cert

awk 'BEGIN {c=0;} /BEGIN CERT/{c++} { print > "cert." c ".pem"}' < /etc/letsencrypt/live/cudanet.org/fullchain.pem

cat cert.1.pem > /etc/cockpit/ws-certs.d/0-self-signed.cert

cat cert.2.pem > /etc/cockpit/ws-certs.d/0-self-signed-ca.pem

cat /etc/letsencrypt/live/cudanet.org/privkey.pem > /etc/cockpit/ws-certs.d/0-self-signed.key

systemctl restart cockpit.socket

Or... we could reverse proxy it using Nginx, but I don't want to keep track of yet another non-standard port number.

AFP/Time Machine

The fact that iX Systems decided to rip out this function from TrueNAS really irritated me. There is an open source implementation of Apple Filing Protocol for Linux called netatalk. Netatalk is included in the EPEL repo which we already enabled earlier to install ZFS. I've never been a fan of samba/CIFS for filesharing due to the complexity of getting permissions correct, especially across multiple platforms. By comparison, Netatalk is brain-dead simple to set up.

dnf -y install netatalk

systemctl enable --now netatalkThen configuring AFP fileshares is quite simple. Fileshares are configured in /etc/netatalk/afp.conf and utilize your server's authentication methods, eg; if you have your server domain joined using SSSD, you can use domain groups or individual users to manage access to fileshares. First, create the appropriate zfs dataset(s). For instance, if you want individual user shares for Time Machine backups, you might do something like this

zfs create pool0/timemachine

zfs create pool0/timemachine/rcuda

chown -vR rcuda /pool0/timemachine/rcudaHere's my AFP config

;

; Netatalk 3.x configuration file

;

[Global]

mimic model = MacPro5,1

; Global server settings

; [Homes]

; basedir regex = /home

; [My AFP Volume]

; path = /path/to/volume

; [My Time Machine Volume]

; path = /path/to/backup

; time machine = yes

[timemachine]

path = /pool0/timemachine/rcuda

time machine = yes

valid users = rcuda

[nas]

path = /pool0/nas

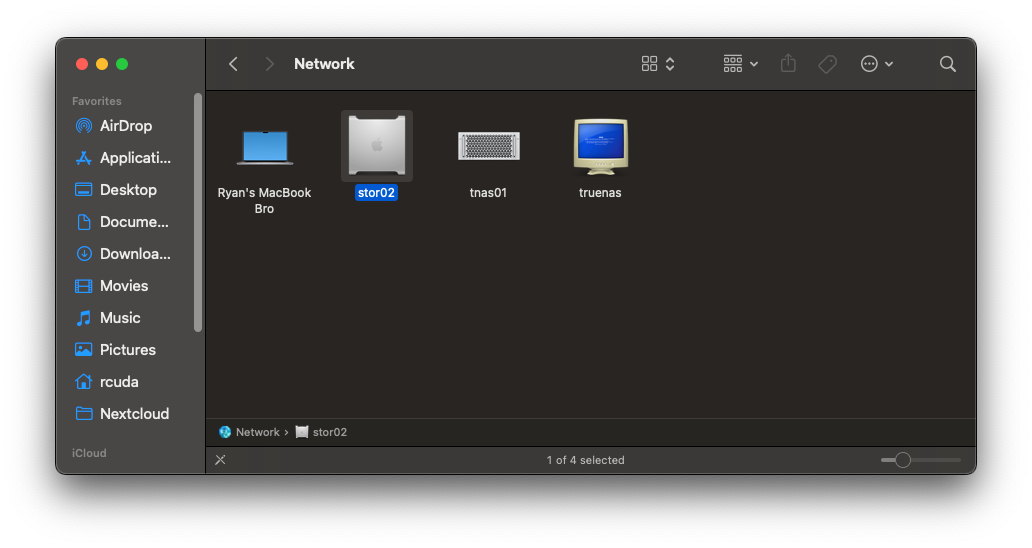

time machine = no

valid users = "@domain users"With this configuration, I have a share set up for myself specifically for Time Machine backups, and I have my main "nas" share (where all my movies and ISO files and such are stored) configured for AFP shares for all domain users without Time Machine enabled. I really wish that configuring samba was this straightforward. Also, by default netatalk configures Avahi (aka; bonjour, aka; mDNS) for advertising services. Bonus points - since this is actually running on an old Mac Pro, I used that as the model it identifies itself as, which is what you'll see in the Finder.

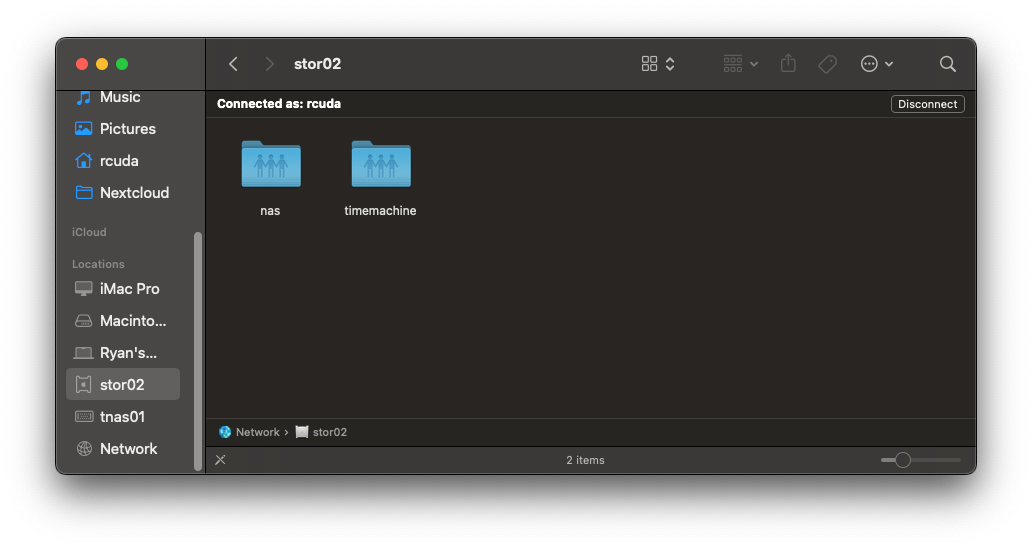

You should be able to browse to any shares that your domain user has access to

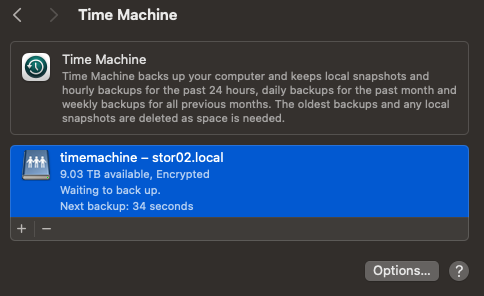

Setting up Time Machine

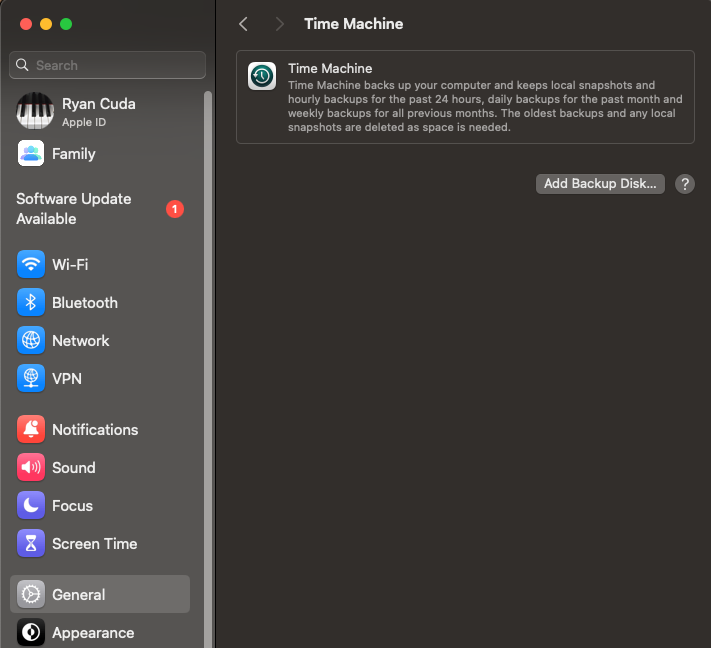

On your mac, open System Settings and navigate to General > Time Machine.

Then click on Add Backup Disk...

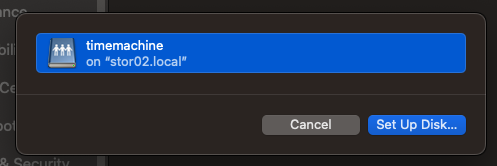

Select your Time Machine fileshare and click Set Up Disk...

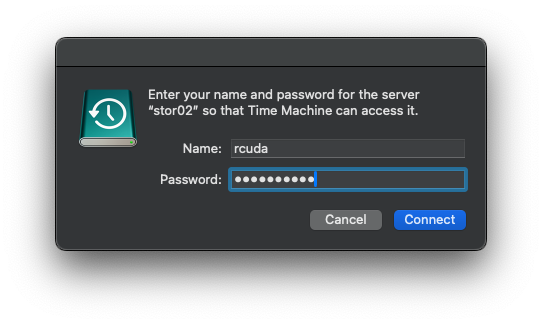

enter your domain user credentials

Run your first backup

That's it. Clean and simple.

Libvirt + KVM

this part is totally optional, but considering the loss of my primary domain controller VM is what prompted me to bail on TrueNAS, I figure it's good to go over how to set up your server as a virtualization host. Eventually, the plan is to run one domain controller per NAS server on direct attached storage, so theoretically, things like CSI driver outages shouldn't affect my AD environment. I'm going to be using cockpit-machines to manage VMs from the web console.

dnf install cockpit cockpit-ws cockpit-machines libvirt

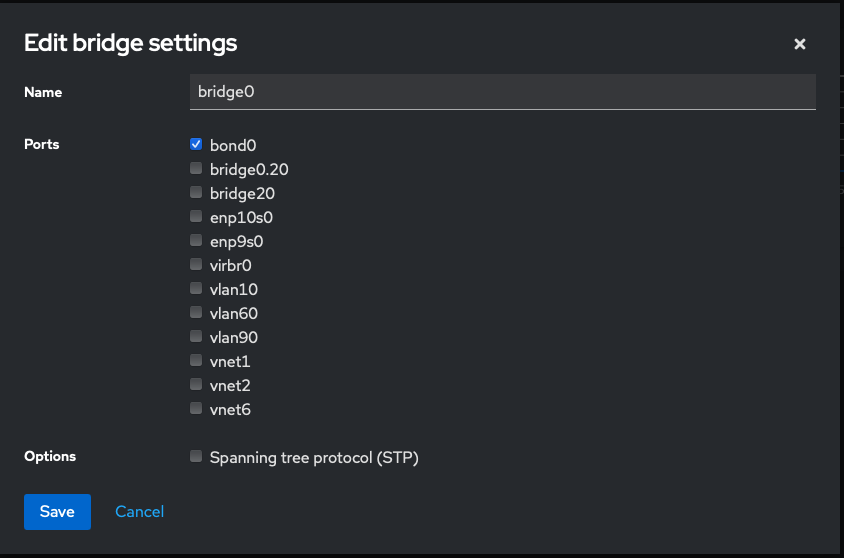

systemctl enable --now cockpit.socketThen, figuring out bridged networking was kind of confusing, but ultimately this is what worked for me. I typically run my VMs on VLAN 20. In order to get bridged networking functioning properly I had to set up a bridge interface like this

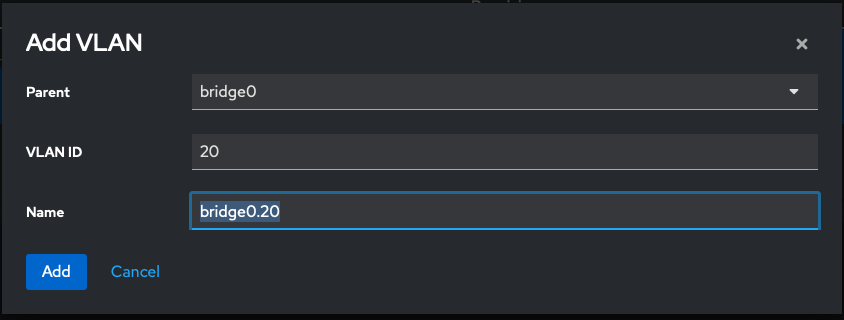

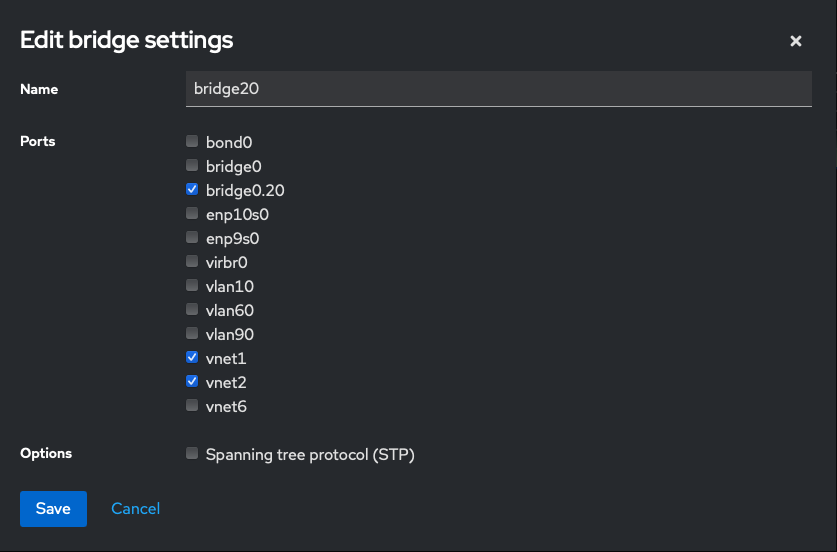

enp9s0 + enp10s0 > bond0 > bridge0 > bridge0.20 > bridge20 > vm

For what it's worth, I was not able to create the appropriate bridge interfaces using the nmtui tool like I typically use to manage networking on RHEL servers. I had to use the cockpit web console.

step 1. Create a bridge interface

Create a bridge interface, eg; bridge0 selecting the bond0 interface as it port

Step 2. Create a VLAN

Add a VLAN, eg; bridge0.20

Step 3. Create a bridge to the VLAN

Add another bridge selecting the VLAN bridge0.20 as it's port.

Step 4. Attach VM to the bridge

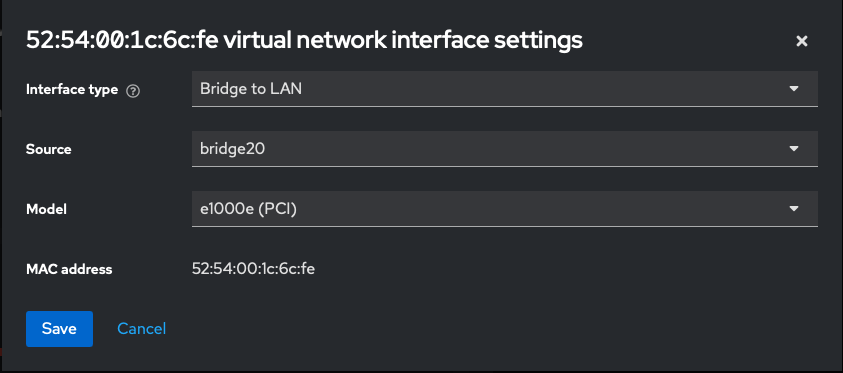

Finally, create a VM and configure it's NIC with the following settings -

Interface Type: Bridge to LAN

Source: bridge20

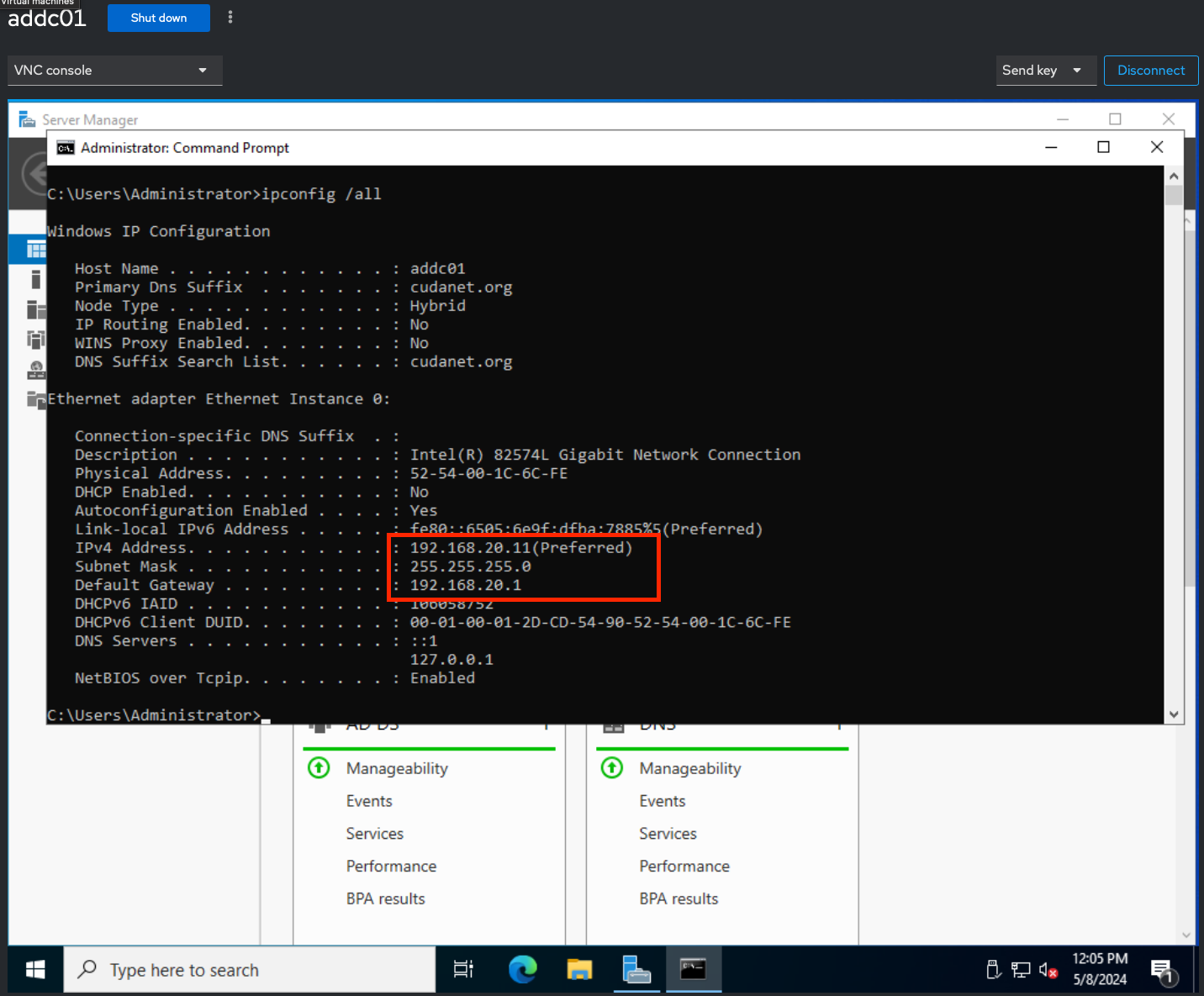

As we can see, the VM now has an IP address in the 192.168.20.0/24 subnet (VLAN20)

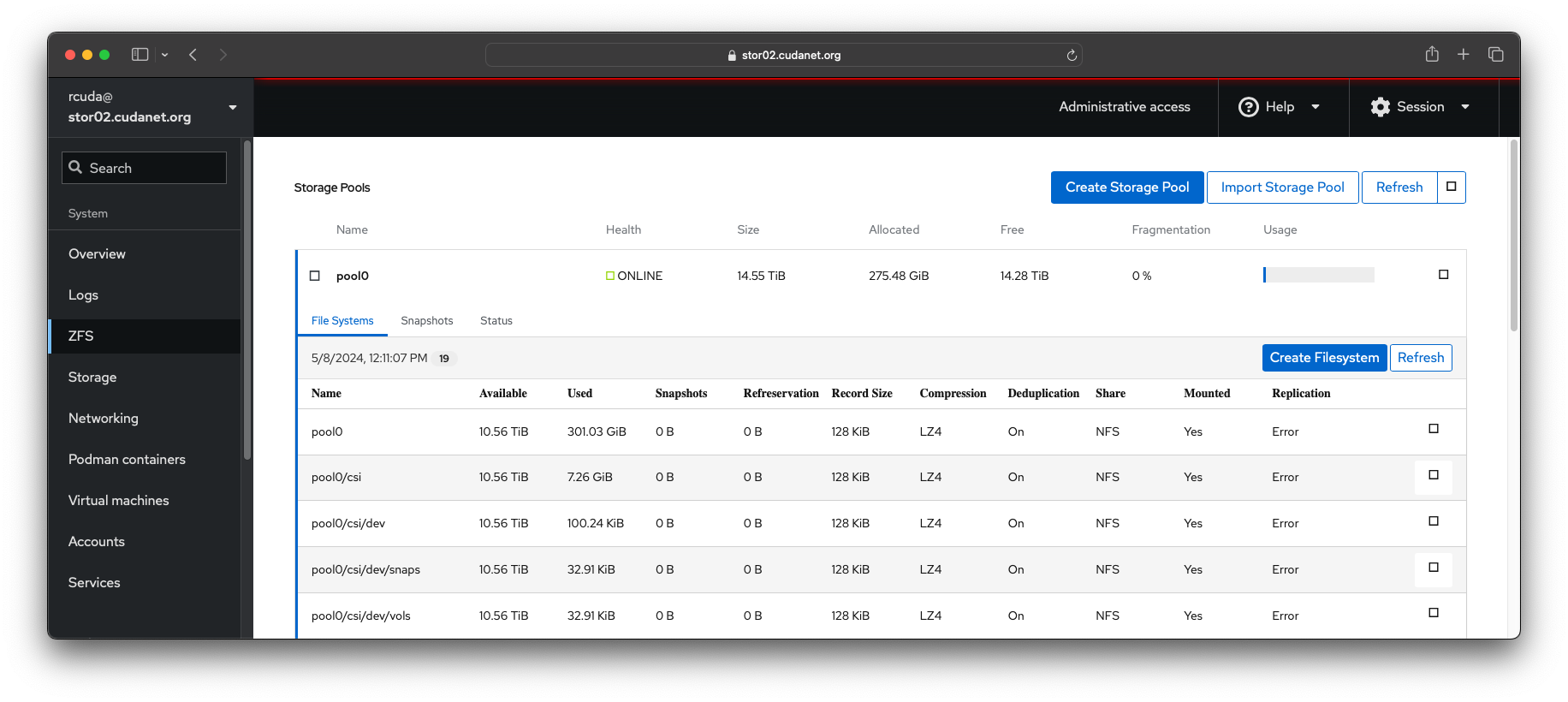

cockpit-zfs-manager

The last thing that was missing for me was a way to easily view and manage ZFS volumes. A colleague introduced me to this project https://github.com/optimans/cockpit-zfs-manager which does exactly that- it adds a plugin to Cockpit for managing your ZFS pools.

Installing cockpit-zfs-manager is easy

git clone https://github.com/optimans/cockpit-zfs-manager.git

sudo cp -r cockpit-zfs-manager/zfs /usr/share/cockpit

systecmtl restart cockpit.socketUpon initial setup, you may also be prompted to install samba among other things. You don't have to install samba if you don't plan on using it, but cockpit-zfs-manager does make it easy to configure new SMB shares as well

Wrapping up

I'm in the process of migrating my data (again) from TrueNAS to RHEL + ZFS, and will end up moving it back (re-again) to the TrueNAS server after I wipe it. Unfortunately, I was hoping to be able to just import my zpool as is, but the ZFS implementation on the latest version of TrueNAS Scale is not compatible with the version of ZoL for RHEL. I ended up hitting an error:

This pool uses the following feature(s) not supported by this system: com.klarasystems:vdev_zaps_v2

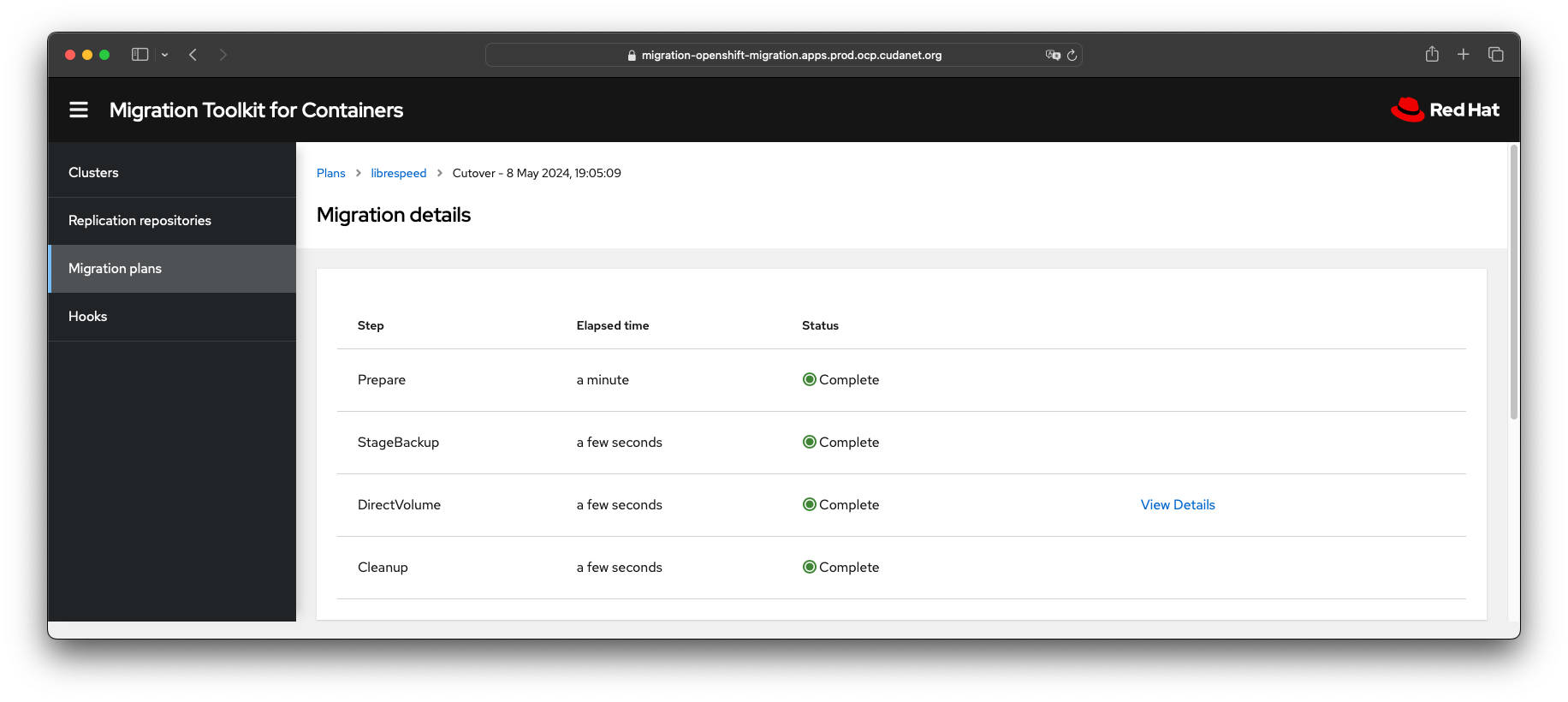

Which was a bummer, but not the end of the world. I'm also in the process of migrating my PVCs off of TrueNAS using the Migration Toolkit for Containers, which has been working great