From AI to the Edge - Red Hat Device Edge on a Raspberry Pi 4

I just got back from Red Hat One yesterday and my head is buzzing with all of the exciting new places technology will go in 2024 and beyond. It should come as no surprise to anyone that AI is going to be at the center of it all. If you're not thinking about AI at this point, you're doing it wrong. I mean, I get it. AI is scary. It's daunting. It's hard to fathom how this new disruptive technology is going to change your organization's IT operations, and what harnessing the power AI is going to bring to the enterprise. It's going to revolutionize the way that companies operate, in ways both good and bad. But what does that have to do with a Raspberry Pi? Read on and I'll tell you a story...

Back when my son was in middle school, I helped him with a science fair project where he built an automatic fish feeder using a Raspberry Pi and a step motor. Granted, I did most of the "heavy lifting", but I taught my son about SSH, python and cron jobs (which I'm sure he promptly brain-dumped after the science fair). At the time, the term "edge" wasn't really a thing. Back then the buzz word was "IoT", or "Internet of Things". The Raspberry Pi in it's various forms is for all intents and purposes the go-to device for all things IoT. Almost without exception, if someone is going to start tinkering with edge or IoT, you can bet they're going to start their project on a Pi.

Now, building an automatic fish feeder isn't about to disrupt the fish feeding industry or eliminate any jobs. But it did illuminate the possibilities of IoT devices - small, non-traditional, purpose built devices that have real world applications to do "stuff". In this case, it fed a fish once a day. That is an example of automation in the real world - leveraging computers to do work that a human normally would do, or perhaps in some cases, work that a human can't do. Granted, that science fair project wasn't going to replace anyone's job, but the concept is sound. If I can automate this one task using technology, what else can I automate? Imagine the concept applied at scale and we start to see a bigger picture.

But what does all that have to do with AI? Well, to answer that question, we really have to define what AI actually is. You watch any science fiction movie and you'll quickly learn that AI is going to be the thing that either enslaves or destroys mankind. Taking a slightly less dystopian view, put as simply as possible, AI is a catch all term for emerging technologies like LLMs (Large Language Models) which are essentially computers getting better at being able to find patterns in sets of data that previously would not have been impossible. The larger the dataset you feed an AI model, the better it gets at finding patterns. So no, AI is not computers becoming sentient. It's just the next evolution in computing capabilities. But let's circle back to the Raspberry Pi - where does an IoT or edge device fit into this picture?

While IoT and edge are not necessarily synonymous, there is considerable overlap between the two concepts. An IoT device is typically an Internet connected "smart" device that does "stuff". Think like smart lightbulbs. An edge device is generally a purpose built non-traditional computing device that exists at the periphery (edge) of a network. An IoT device can be an edge device and vice versa, but the two are not exactly the same. So let's think about this concept as it pertains to AI. obviously, you can't train AI models on a Raspberry Pi. For that you need lots of RAM, lots of CPU and preferably, lots of GPU to throw at those workloads. But then once you've trained a model, it becomes something portable that can run on much less beefy hardware, so you can deploy that to a small Openshift cluster at the 'near edge' - things like compact clusters or SNO start to look like viable deployment targets here. But what are you training your model against? Let's take a factory floor for instance. You might want to be able to monitor the machines stamping out products for faults or failures. Surely that doesn't take much compute power at all, you're literally just taking sensor data and streaming it somewhere. This is where edge devices come into play - you could deploy a simple, low cost device that runs exactly one workload, for instance, scraping vibration data via a sensor to predict hardware failures in a production line. That's where microshift comes into play.

What is Microshift exactly? Microshift - or more specifically, the Red Hat Build of Microshift - is a lightweight Kubernetes distribution based on Openshift. It lacks most of the bells and whistles of a full blown Openshift cluster like the web console and operator ecosystem, but still retains it's Red Hat DNA. Like many of Red Hat's products, Microshift - like it's big brother Openshift - is an opinionated Kubernetes distro, enforcing some sensible security standards and comes bundled with some really nice to have features like routes out of the box. Unlike Openshift, Microshift is deployed as a single node, so no high availability. In fact, it's installed as an RPM package and runs as a systemd unit on a regular RHEL server, or preferably you can generate a custom OS tree based image using the RHEL Image Builder. Microshift has extremely light requirements - just 2 cores, 3 GB of RAM and 10 GB of storage. Officially, we call Microshift running on RHEL the "Red Hat Device Edge" platform. Currently, I've only tried starting from a regular (boot from ISO) RHEL install, mainly because the only ARM device I have in my lab is my one Raspberry Pi.

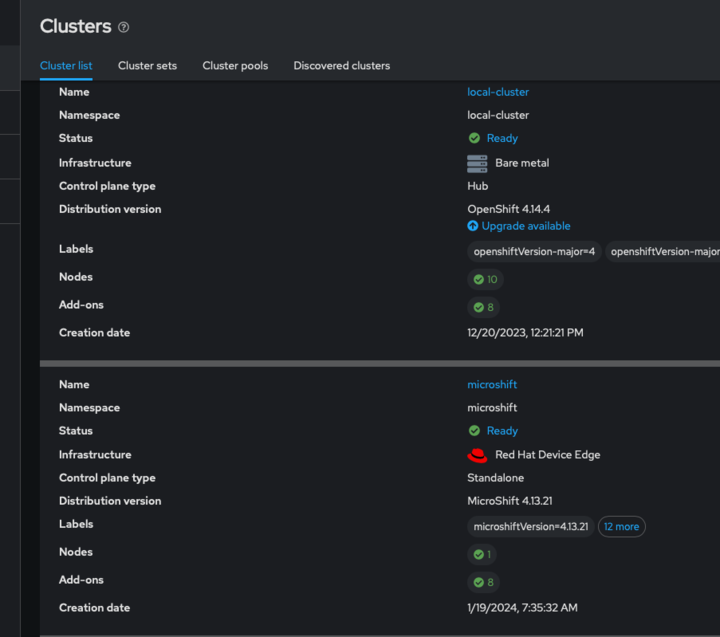

Interacting with a Microshift cluster is largely similar to working with any vanilla k8s cluster - login is handled via kubeconfig, objects on the cluster are instantiated using yaml for the most part. Worth pointing out, you can register a Microshift node to RHACM and you can manage workloads running on it using either subscriptions or ArgoCD application sets just like any other Openshift cluster, so you can easily view and manage workloads on your Microshift clusters without having to get your hands dirty doing manual deployments.

Putting it all together, this is the general adoption pattern I envision for most customers looking to leverage the power of AI across their entire enterprise. Models will likely be built and trained in the cloud. Why? Because training an AI model is a very resource intensive workload, but those resources will only be needed for as long as it takes to train the model - typically a few weeks to a few months. Unless there is an ongoing need to train models often, it's probably more cost effective for customers to consume those resources on demand than to build out large AI farms on-prem (although certainly not outside the realm of possibility). Then once the model has been trained you're going to want to actually run that model as close to where the data is coming from as possible - either in the datacenter, or possibly at the near edge simply due to latency. The "near edge" might be something like a compact or even single node cluster that sits on or near the factory floor with just enough power to run the AI model, which is far less resource intensive than actually training the model. This might be a handful of servers in an MDF closet for example. Often times, these may be airgapped environments and may not have stringent requirements for high availability or five nines of uptime. If a forklift runs over something, or there's a natural disaster like a flood, production on the factory floor will stop anyway. Finally, we come to the "edge", on the factory floor where we are capturing the data that will be fed to the AI model. This is where Microshift comes into play. Microshift gives you the ability to run kubernetes workloads pretty much anywhere. Taking the Raspberry Pi for example, we're talking about a single board computer the size of a credit card that runs on USB power. You might have an application running on Microshift on the factory floor that gathers sensor data in real time and streams it to a more powerful Openshift cluster to be processed.

Or the tl;dr version -

Model gets trained in the cloud.

Trained model is run on Openshift at the near edge.

Data is fed from Microshift at the edge and processed by the AI model at the near edge.

Apologies for the long pre-amble, I just wanted to illustrate the "why". Now let's get into the "how".

Installing RHEL on a Raspberry Pi 4

It's no secret that the Raspberry Pi is basically the go-to device for all things edge, IoT or just plain tinkering. They're small, relatively inexpensive and pack plenty of features that make them a very attractive option for developers, tinkerers and hardware hackers. I'd wager that just about any project that needs a platform to start developing for any one of those use cases is going to start out on a Raspberry Pi. In fact, I'd even go so far as to say that the Raspberry Pi is likely where a fair amount of people are first introduced to Linux. With that being said, it's kind of baffling to me that for years now, Red Hat has pretty much pretended like the Raspberry Pi didn't exist, which really is a shame because while we neglected to acknowledge the RPi, Debian and it's derivatives claimed the throne in the ARM based SBC market. As a result, Red Hat based distros have lagged pretty far behind on the Raspberry Pi. In fact, it wasn't until the release of Fedora 37 that we supported the Raspberry Pi and only then on the RPi3 and up, giving Debian a near decade head start. As for RHEL? Yeah, you could pretty much forget about that. There have been projects to port CentOS 7 to the RPi starting with model 4B going back to 2020, but up until fairly recently installing actual RHEL has been impossible. However, thanks to this project, it is now possible to boot generic UEFI based Linux distros (compiled for the appropriate CPU architecture, ARM v8 64 bit w/ HFP) which has opened the door for a much wider array of operating systems, RHEL included – with a few caveats. First and foremost, using the UEFI method, hardware support for some components on the Raspberry Pi is not included in Linux until Kernel 5.7 or later. Read the notes on the Raspberry Pi 4 UEFI Firmware Images Github project for further details. What that means as of right now is that only RHEL 9 for ARM is supported via this method, which ships with Linux Kernel 5.14.x. RHEL 8 might boot, but won't have ethernet support, making it relatively useless for IoT/edge.

Prerequisites

What you'll need:

A Raspberry Pi 4 - I chose this starter kit that comes with everything you need including a case, power supply, cooling fan, heat sinks and an SD card. For the purpose of running RHEL Device Edge, you'll need at least the 4GB model but I went with the 8GB

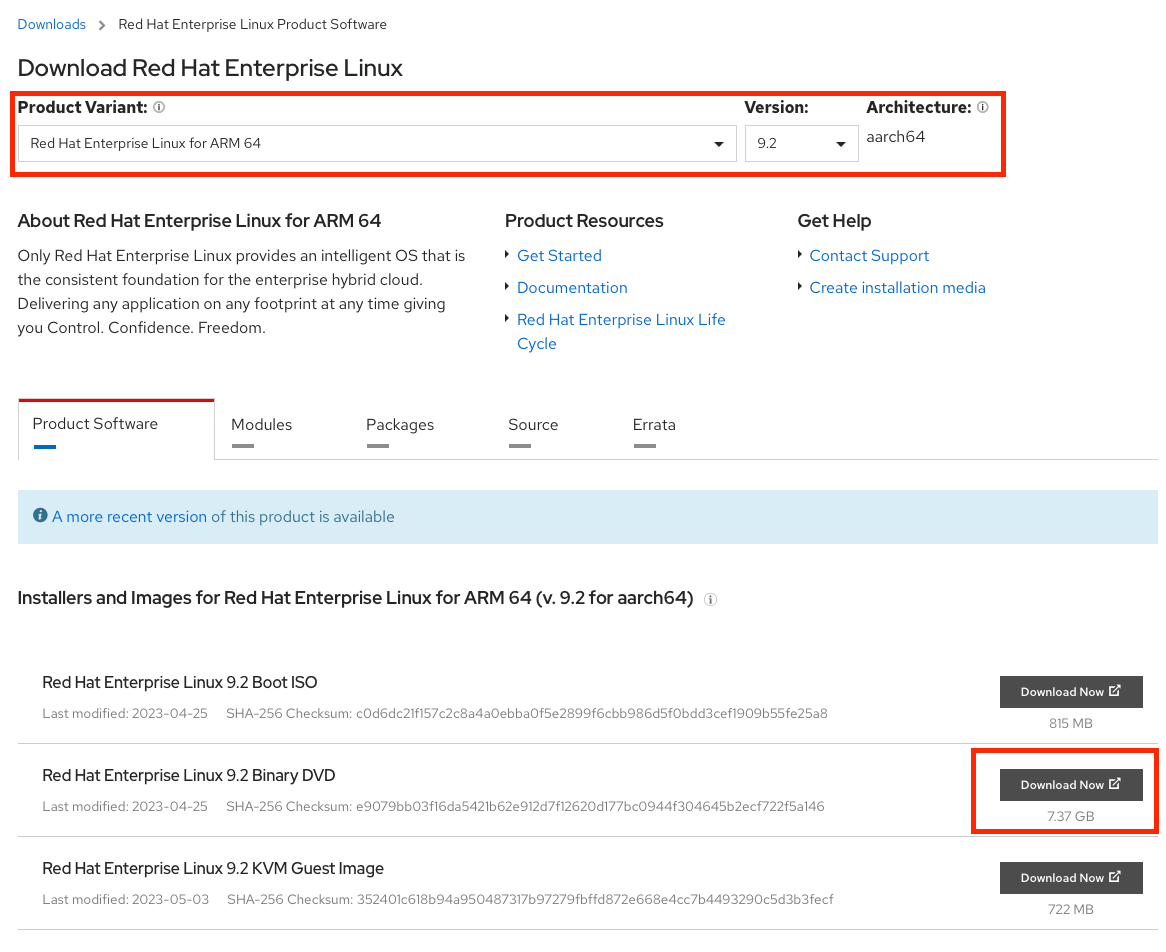

RHEL 9 for ARM - at least at the time I tested this, RHEL 9.3/Microshift 4.14 had a bug that prevented the microshift systemd service from starting properly on the node. This has probably been fixed by now, but in my case I ended up just rolling back to RHEL 9.2/Microshift 4.13 for the time being. Make sure you select 'Red Hat Enterprise Linux for ARM 64 - 9.2 - aarch64' and download the Binary DVD image. I ran into issues that caused the install to hang while downloading packages when I tried using the Boot ISO.

a 8GB USB drive - any thumb drive should do, but anecdotally, in my experience USB 2.0 devices, although slower, seem to boot more reliably on the Raspberry Pi.

RPi UEFI Firmware - My first attempt was to use the latest UEFI firmware, version 1.35, but it had some kind of bug that prevented the RHEL installer from booting. Rolling back to version 1.34 fixed that problem.

Procedure

- Prepare your SD Card for UEFI boot

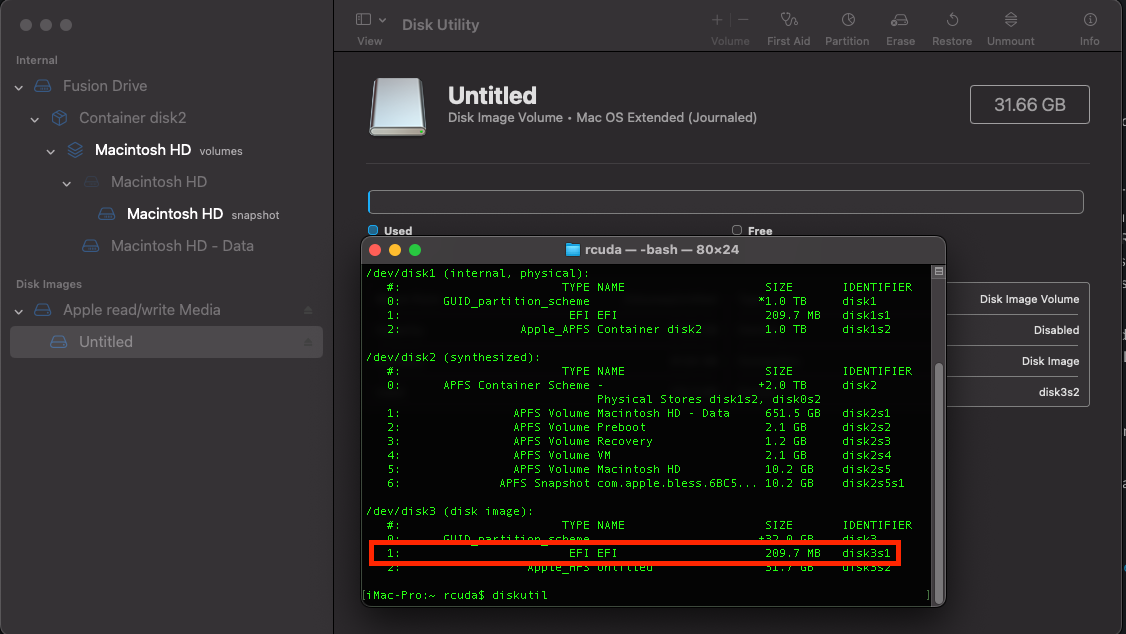

The first thing you're going to need to do is prepare your Raspberry Pi to boot into UEFI firmware. To do so, you need to format your SD card with GPT format, with the first partition being at least 100MB formatted as FAT32. If you're working on a Mac, just used Disk Utility and erase your SD card, selecting 'Mac OS Extended (Journaled)' (aka; JHFS+) as the filesystem type and GUID partitioning as the scheme. Disk Utility will only display the single data partition, but if you use diskutil in the terminal, you'll see that your SD card does in fact have a hidden 200MB EFI partition.

On most recent versions of macOS, you have to be root to mount EFI partitions. From the terminal do the following

$ sudo su -

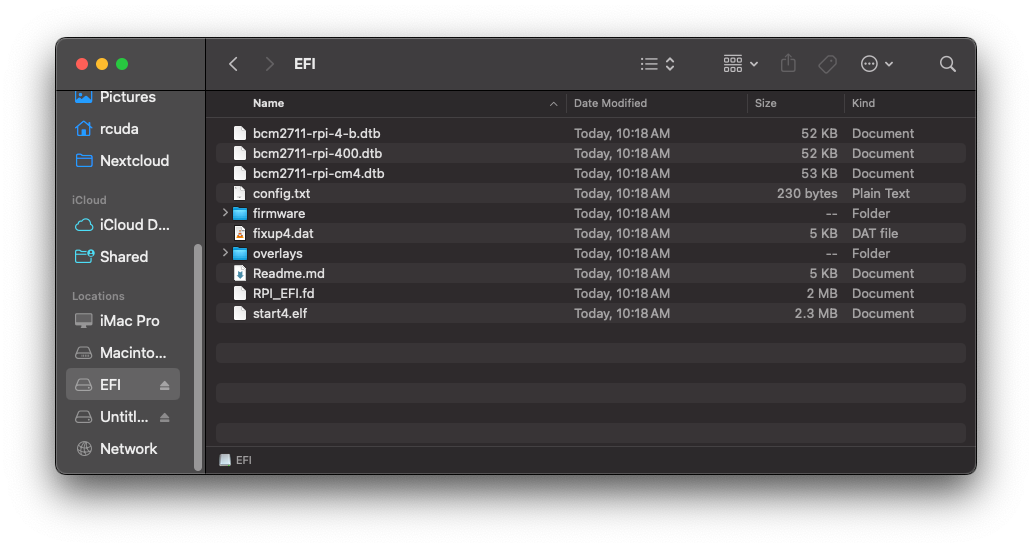

# diskutil mount /dev/disk3s1then copy the contents of the RPi4_UEFI_Firmware_v1.34.zip file into the root of the sdcard EFI partition, eg;

cp -vR ./RPi4_UEFI_Firmware_V1.34/* /Volumes/EFI/so that root EFI parition of your sdcard should look like this

Eject the sdcard and pop it into your Raspberry Pi.

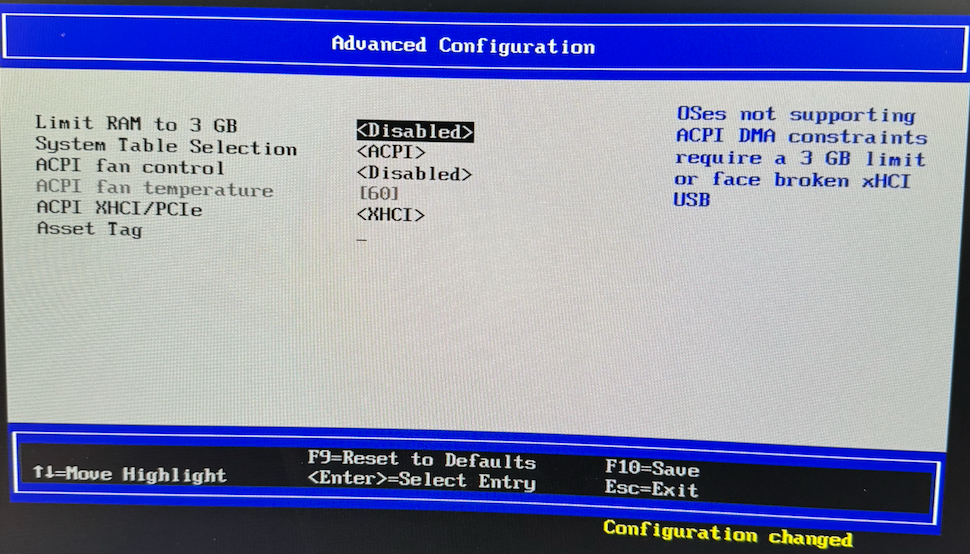

2. Configure your Raspberry Pi to use more than 3 GB of RAM

There is a bug with the Broadcom SOC which requires the OS to support DMA in order to boot with more than 3 GB of RAM, even on models that have more memory. To work around this, the RPi4 is set to use only 3 GB of RAM by default. You can read further on this here.

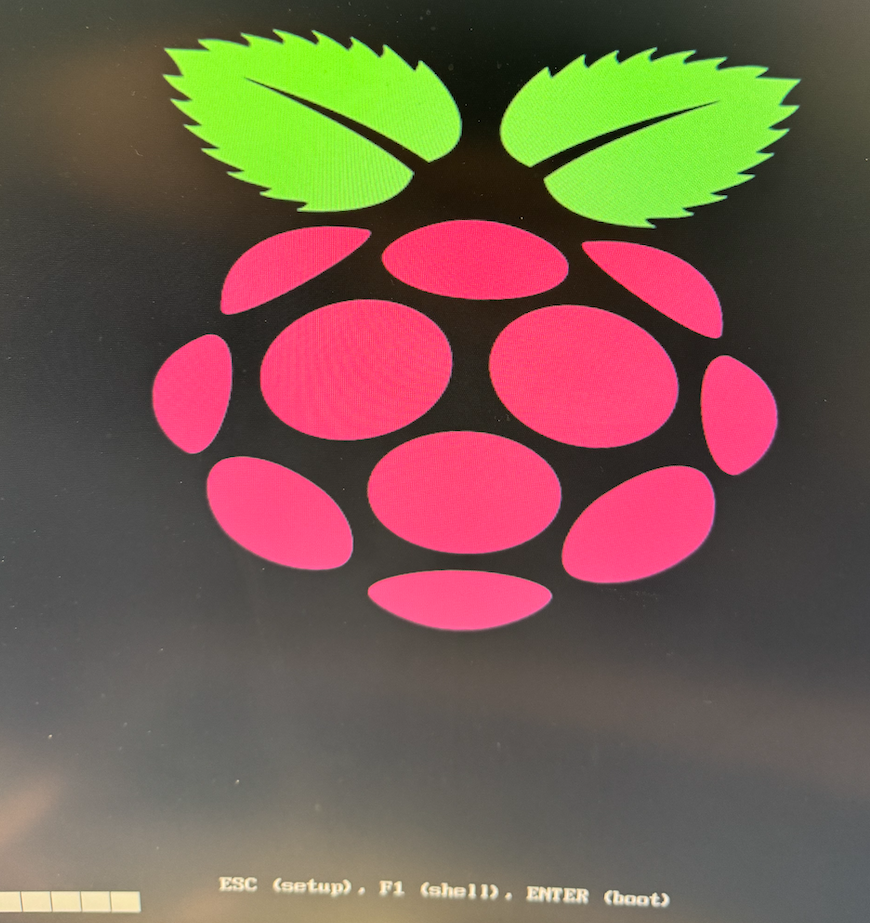

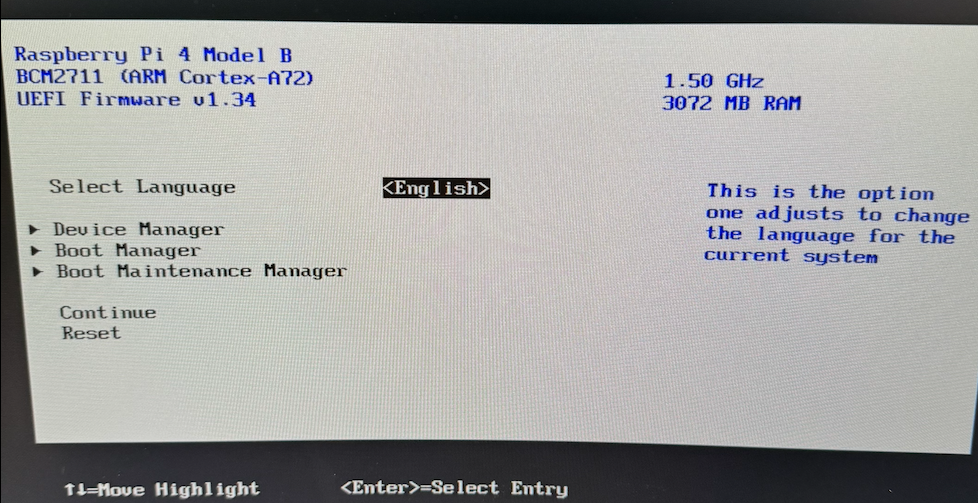

step 1) boot your RPi4 (with keyboard, mouse and video connected, sdcard installed).

step 2) At the UEFI boot screen, press esc.

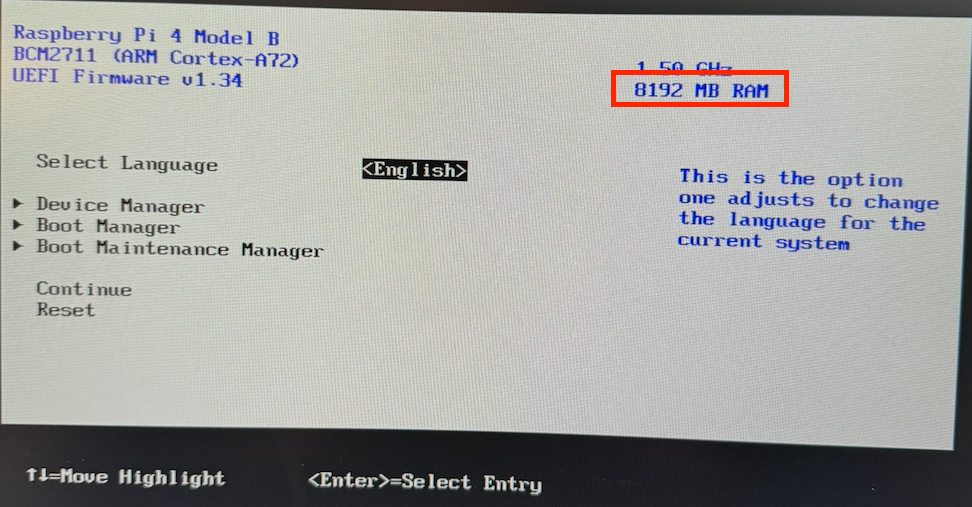

step 3) in the UEFI firmware menu (looks like a standard BIOS) select 'Device Manager'. Note that your Raspberry Pi is only reporting 3072 MB RAM

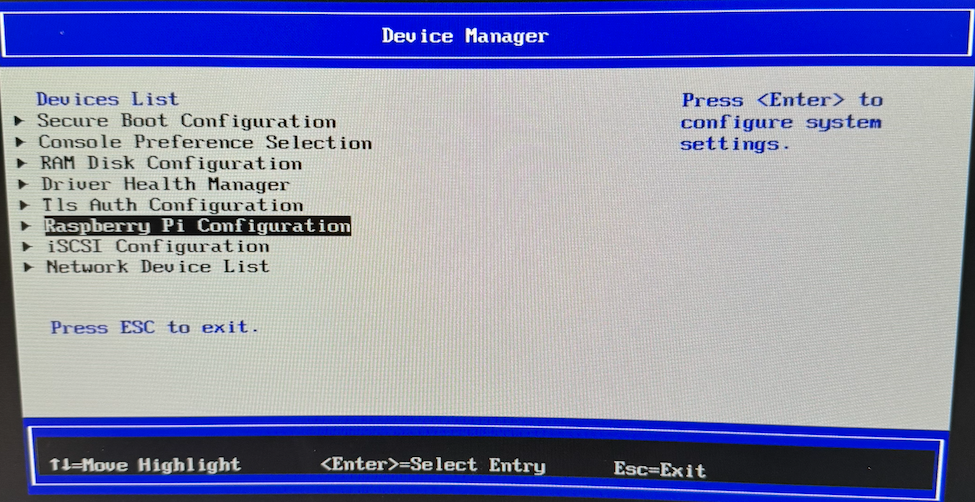

step 4) in the Device Manager menu, select Raspberry Pi Configuration

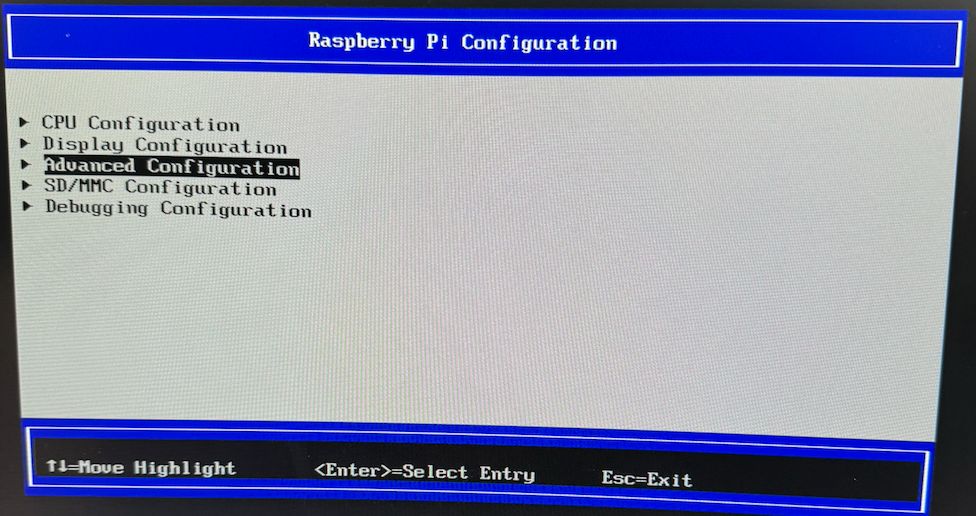

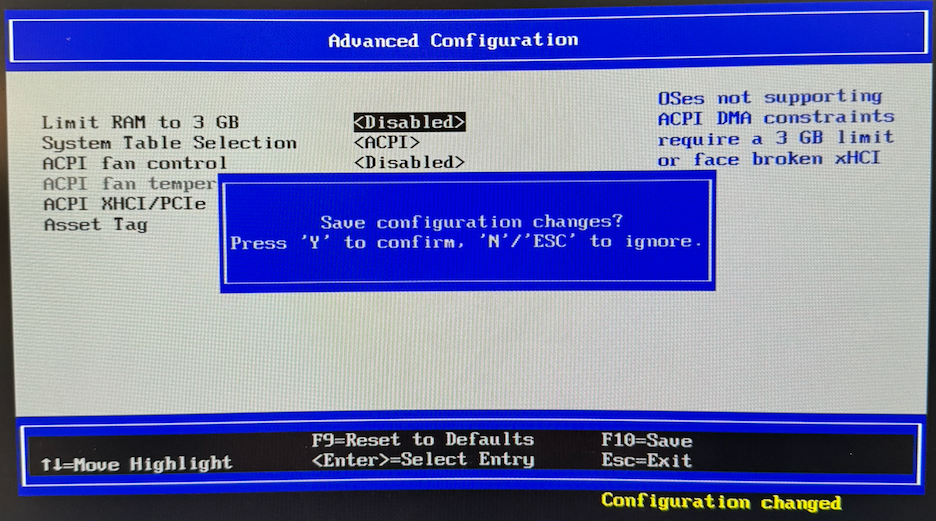

step 5) in the Raspberry Pi Configuration menu, select Advanced Configuration

step 6) in the Advanced Configuration menu, select Limit RAM to 3 GB and set it to Disabled

step 7) hit F10 to save the configuration and hit Y to save the changes

step 8) restart the Raspberry Pi. If you boot back into the UEFI firmware, you should see that it is reporting the full 8GB of RAM now

3. Install RHEL 9

From here on, installing RHEL 9 is pretty much a straightforward RHEL install but there are a couple of things you need to be aware of:

First, since the end goal here is to run Microshift, it's important to note that Microshift requires - at a minimum - for /var to be on a logical volume. Considering the small size of the sdcard, you can just put your root partition on an LV.

Second, when you install RHEL it will reformat the sdcard and wipe out the UEFI firmware you previously loaded onto the sdcard, so you will need to reload the firmware onto the EFI partition after the install is completed. You can either do that manually post install from another device, or you can do it while you're still booted into anaconda.

Procedure:

step 1) plug your RHEL install thumb drive into one of the USB ports on your Raspberry Pi and turn it on.

Step 2) at the EFI boot screen, hit esc to access the UEFI firmware menu

Step 3) from the UEFI firmware menu, select Boot Manager

Step 4) from the Boot Manager menu, select your RHEL thumb drive

Step 5) you may or may not need to do this, but on my RPi4 I had to select Boot in low graphics mode to get the Anaconda UI to load

Step 6) once booted into the RHEL installer, set up your system as you normally would - set a system name, set up networking (I'd recommend DHCP, but it's not 100% necessary), set the time zone, etc. As previously mentioned, when you format the sdcard, just make sure you use LVM partitioning. Given the small size of the sdcard, letting the installer automatically create the partitions for you should be fine. By default, it should create a GPT disk layout with 3 partition:

1. 600MB standard (EFI) partition formatted as FAT32, mounted at /boot/efi

2. 1GB standard Linux partition formatted as ext4 or xfs, mounted at /boot

3. the rest of the disk will be a single PV which belongs to a VG with a single LV for the root partition, formatted as ext4 or xfsSelect 'minimal install' as the system target and proceed with the installation as normal. Considering your booting from a slow USB stick on a low power ARM device and writing to an even slower sdcard, don't expect the install to be quick. On my Raspberry Pi, the install took well over an hour.

Step 7) once you've completed the install you need to reload the UEFI firmware in order to boot your new RHEL system. Before rebooting your Raspberry Pi, hit alt+f2 to drop from the GUI to a console. from the console do the following

cd /tmp

wget https://github.com/pftf/RPi4/releases/download/v1.34/RPi4_UEFI_Firmware_v1.34.zip

unzip RPi4_UEFI_Firmware_v1.34.zip

cp -vR /tmp/RPi4_UEFI_Firmware_v1.34/* /mnt/sysroot/boot/efi/Or, like I said before, you can pop the sdcard back into another computer and reload the UEFI firmware like you did the first time.

And that's it, you're done. Feel free to reboot your new RHEL 9 powered Raspberry Pi. Your mileage may vary, but you may or may not have to manually add GRUB to the UEFI boot menu on first boot. If your Raspberry Pi does not automatically boot into RHEL 9, from the EFI boot menu, hit esc and then select UEFI shell. From within the UEFI shell do the following;

fs0:

bcfg boot add 0 FS0:\EFI\redhat\grubaa64.efi

resetand you should be able to boot normally without intervention.

4. Install Microshift

Installing Microshift on RHEL is actually really easy. The install instructions are very well documented here, but in broad strokes you just need a copy of your pull secret saved at /etc/crio/openshift-pull-secret with 600 perms and root:root ownership, and then you need to install the microshift RPM. Here's the quick and dirty instructions to do that

# enable repo and install microshift

sudo subscription-manager repos \

--enable rhocp-4.13-for-rhel-9-$(uname -m)-rpms \

--enable fast-datapath-for-rhel-9-$(uname -m)-rpms

sudo dnf install -y microshift

# install your pull secret

sudo cp $HOME/openshift-pull-secret /etc/crio/openshift-pull-secret

sudo chown root:root /etc/crio/openshift-pull-secret

sudo chmod 600 /etc/crio/openshift-pull-secret

# configure firewalld

sudo firewall-cmd --permanent --zone=trusted --add-source=10.42.0.0/16

sudo firewall-cmd --permanent --zone=trusted --add-source=169.254.169.1

sudo firewall-cmd --reload

# enable microshift

sudo systemctl enable --now microshiftAnd that's about all there is to it. If needed, before starting the microshift service for the first time, you can customize your microshift node configuration by editing the file /etc/microshift/config.yaml. Just make sure if you do change things like the IP ranges that your updated your firewall rules to reflect. See here for more details.

And you're done! Enjoy using Microshift.

A couple things to note - Microshift lacks a lot of the functionality of Openshift, and you won't be able to do things like create custom CRDs to override security settings, so make sure you're using unprivileged containers, etc. But other than that, Microshift is just a really small extension/subset of the Openshift platform. You can even manage it with ACM. Technically you could install the ACS agents on it too, but given the resource constraints on an RPi I doubt the pods would even be able to spin up.

Next Steps:

The next logical step from here would be to capture your current RHEL install using image builder (it's a plugin for cockpit) to build a true RHEL for Edge (aka; ostree) image, see here for more details. But that's a project for another day.