Putting VMs on the same VLAN as your Openshift Cluster

Lately I've been really trying to simplify a lot of things in my lab to reduce the administrative burden of managing my lab, and to save myself time and mental bandwidth - especially when it comes to networking. Coming from a virtualization background, it was pretty common to have tons of VLANs for everything; a management network, a live migration network, at least two VLANs for storage traffic for multipathing, multiple networks for VM traffic, a separate network for out of band management, etc. etc. etc...

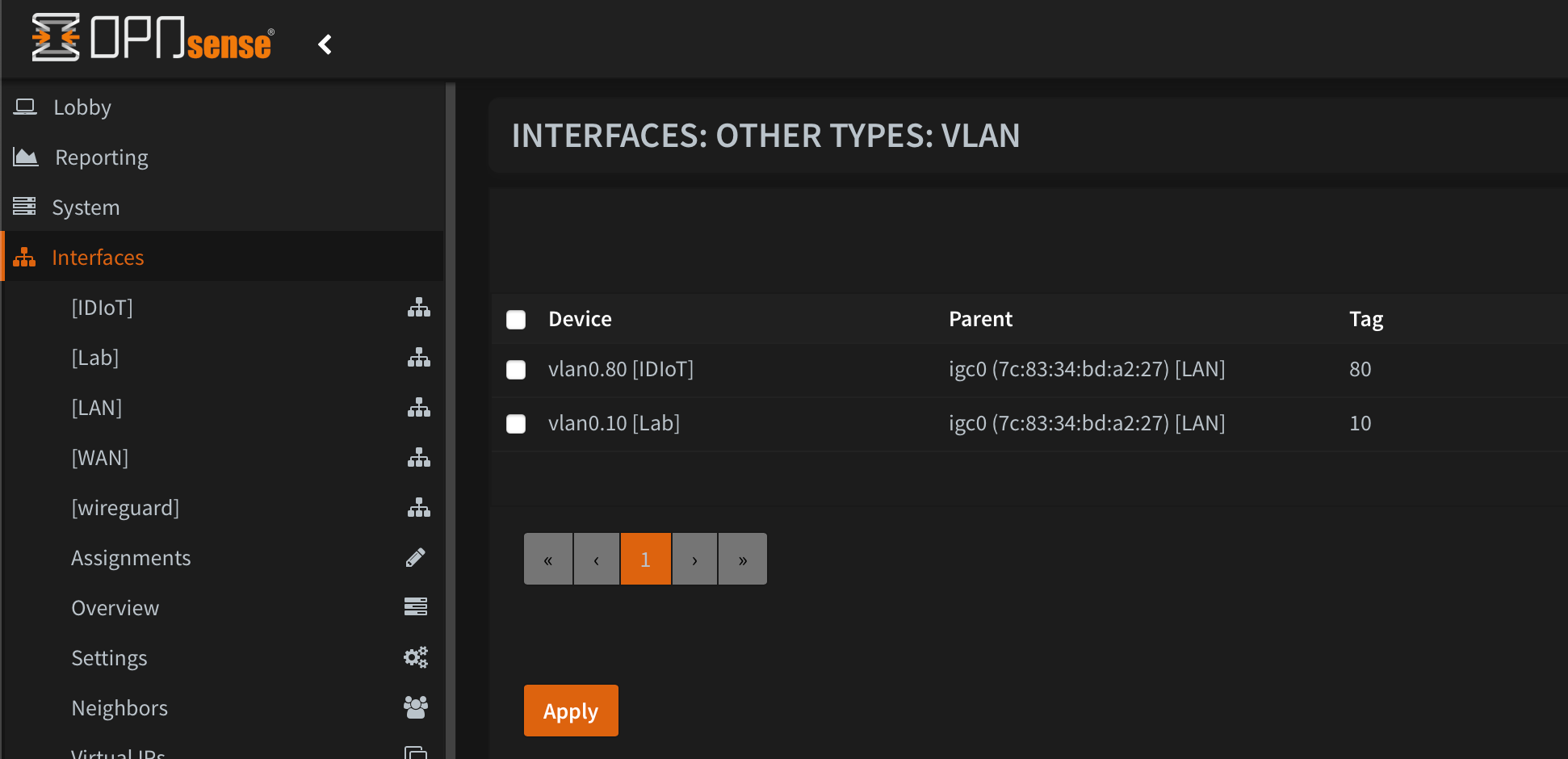

These days, I really like to keep things as simple as possible. In my home network I have just three VLANs; one for my LAN, one for my lab, and one that's firewalled away from the rest of the network for all things IoT/smart devices/streaming boxes that I don't want snooping on me.

However, this created a problem for my Openshift cluster - how do I put VMs on the Lab VLAN? There are basically two approaches to accomplishing this, each with their own pros and cons. By default, when you deploy Openshift Virtualization the only networking option you have for your VMs is the "Pod Network", which is exactly what it sounds like. It places your VMs on the same network as your pods, and you interact with your VMs the same way you do with pods by exposing services.

Services can be one of a few types:

- Cluster IP - typically used for internal traffic within your cluster, or can be exposed as an HTTP/S service externally via a route or ingress which is not really useful at all for VMs in my opinion. Why host a web server on a VM when you could just run it in a pod?

- NodePort - exposes a TCP or UDP service on a random port on all nodes in the cluster, which can be useful for some things like SSH or RDP but the randomized nature of NodePorts makes this cumbersome

- LoadBalancer - a load balancer exposes a TCP or UDP service on it's own external IP address and port, which is a lot closer to how a typical VM administrator would think about interacting with VMs, but still has some drawbacks due to the nature of kubernetes networking.

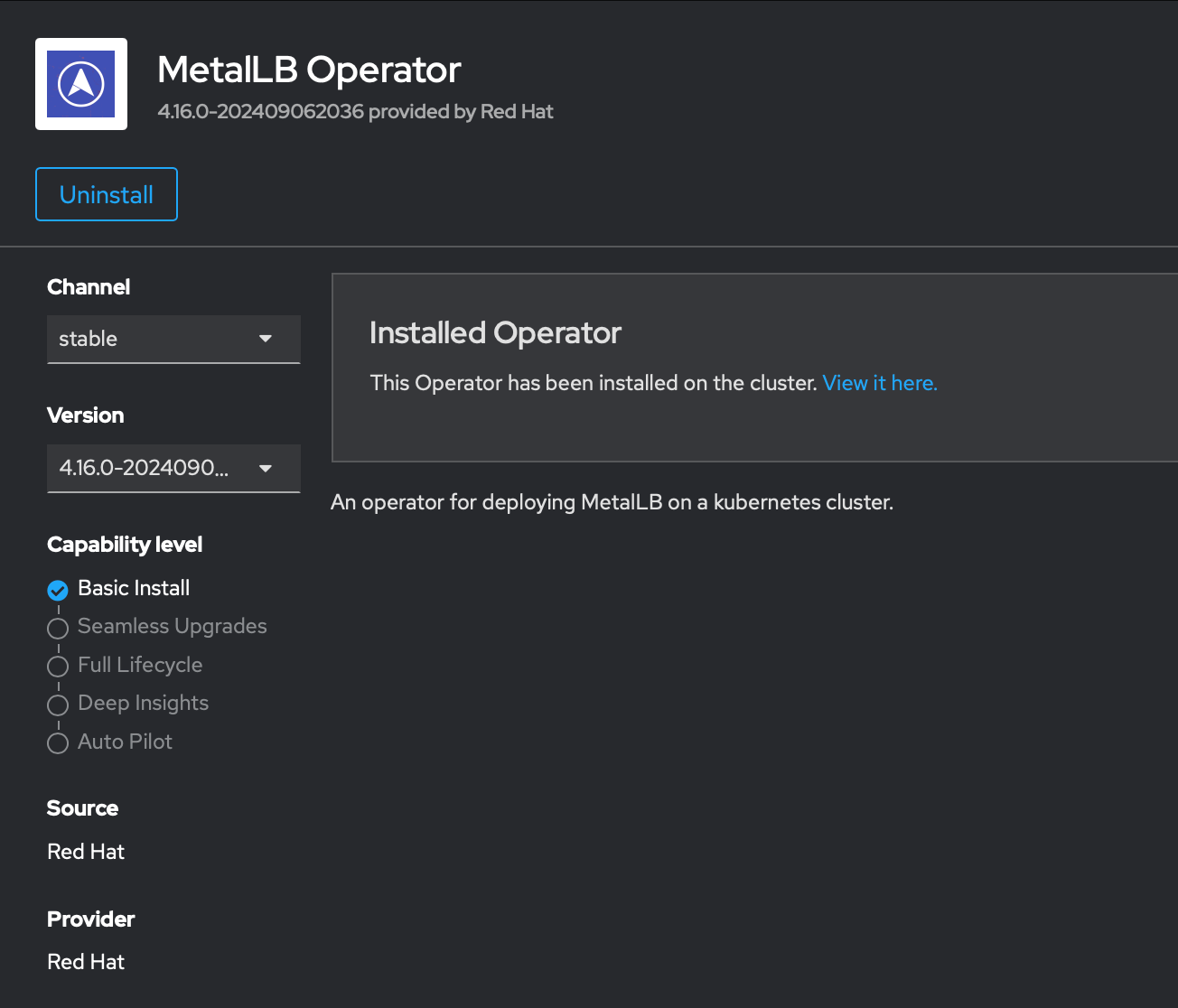

With regard to load balancer services, out of the box these are not supported for on-prem deployments. In the cloud, there will be an API which will give you access to a pool of on-demand IP addresses out of a pool (which is absolutely NOT free). In a non-cloud Openshift cluster, if you want to use load balancer services, your options are either A. to manually set the external IP address to one of the IP addresses of the nodes or VIPs for you cluster, which is not a great idea since for instance, port 22/TCP (SSH) is already in use on all of the nodes in your cluster. Option B. is to use the MetalLB Operator, which provides a cloud like API for providing a pool of IP addresses to use for the ExternalIP of your load balancer services.

That being said, even with a Load Balancer "on your node network", the VM itself still isn't actually on the same network. It simply has a given port NATed out from the pod network, which depending on your use case may be adequate. However, there are still plenty of workloads that simply do not work with this kind of NATing in place, not the least of which being things like Active Directory and IDM. For those services where you need forward and back DNS resolution, where hostnames and other DNS records must resolve to the actual IP address of the server, even load balancer services will not help you. For that you need to bridge your VMs to the actual network.

If your Openshift nodes happen to have more than one NIC, then you could just trunk the same VLAN in to the second NIC and bridge your VMs off of that. However, if - like me - your nodes only have a single NIC, then things get a little more complicated because you cannot attach VMs to the 'br-ex' interface directly, and you can't create another bridge on the same VLAN as your nodes, so what is one to do if you want a "flat" network?

In the past, your only two options for VM networking on Openshift were either pod networking or attaching to a Linux bridge. Thankfully, with recent versions of Openshift we have a bit more flexibility with networking. I don't claim to understand all the intricacies of kubernetes networking (because I really don't...), but there are two newer options for VMs, namely "layer 2 overlay" and "localnet". As you might imagine, the localnet option is exactly what I'm after - putting VMs on my local network.

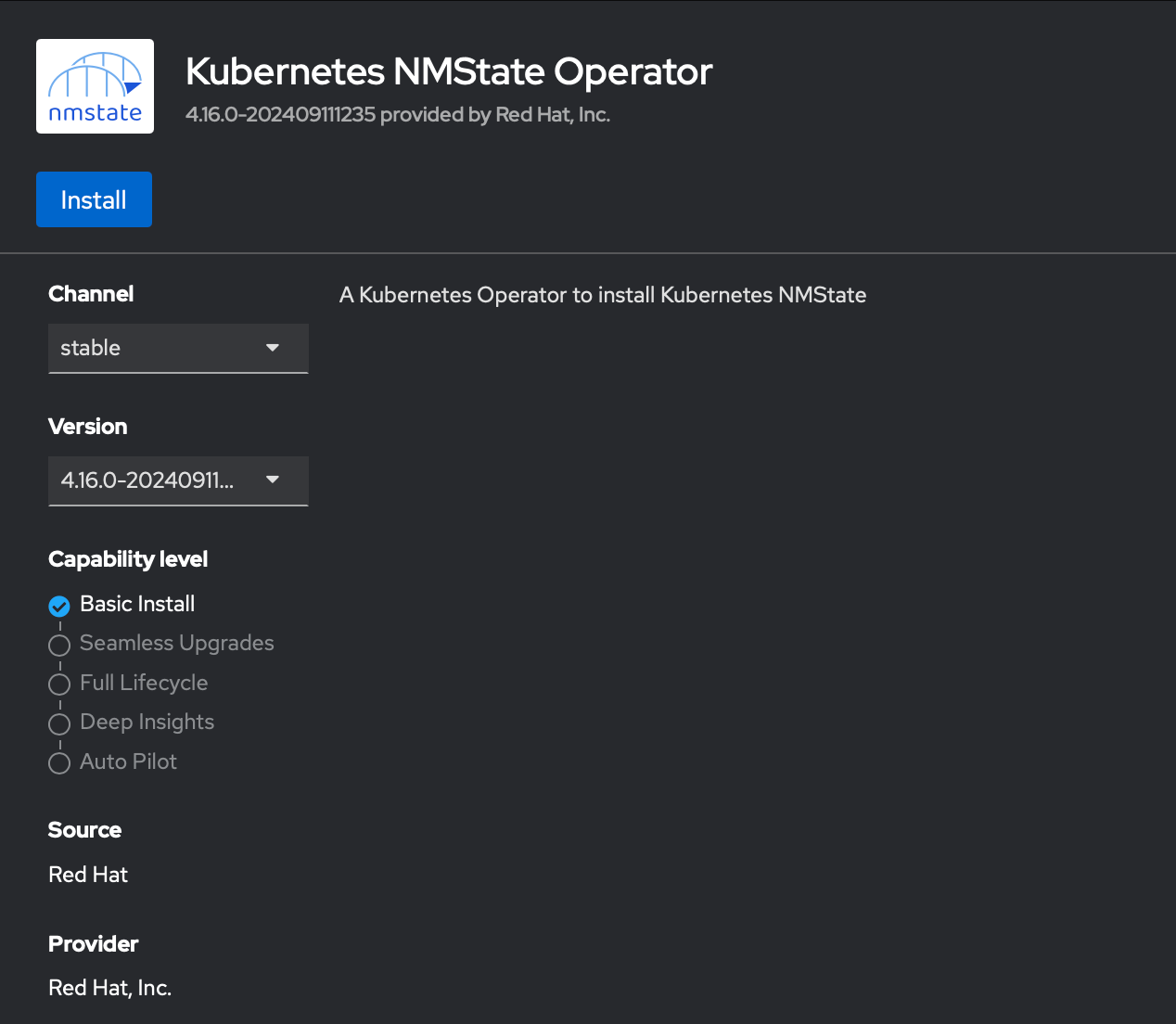

In order to do so, you'll first need to install and configure the Kubernetes NMState operator. The install is really very straightforward. You just install the operator and then create an nmstate instance. Once you do that, it will create a couple of new custom resource definitions including 'nncp' (Node Network Configuration Policy) and 'nad' (Network Attachment Definition).

Here are some examples:

---

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: ovs-bridge-mapping-machine-net

spec:

desiredState:

ovn:

bridge-mappings:

- localnet: machine-net

bridge: br-ex

state: present

---

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

annotations:

description: Attach to machine network segment

name: machine-net

namespace: default

spec:

config: |-

{

"cniVersion": "0.3.1",

"name": "machine-net",

"type": "ovn-k8s-cni-overlay",

"topology": "localnet",

"netAttachDefName": "default/machine-net",

"ipam": {}

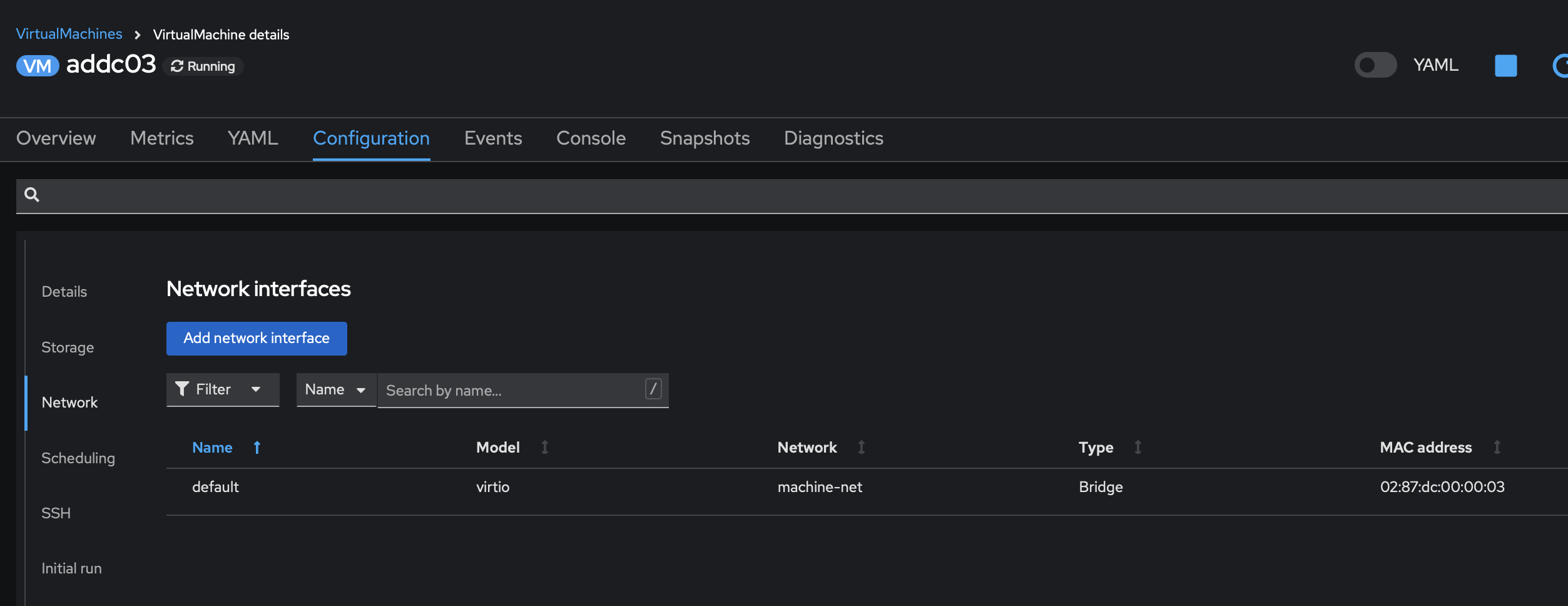

}Then you can attach a VM to your local network by setting it's NIC to use the 'machine-net' bridge

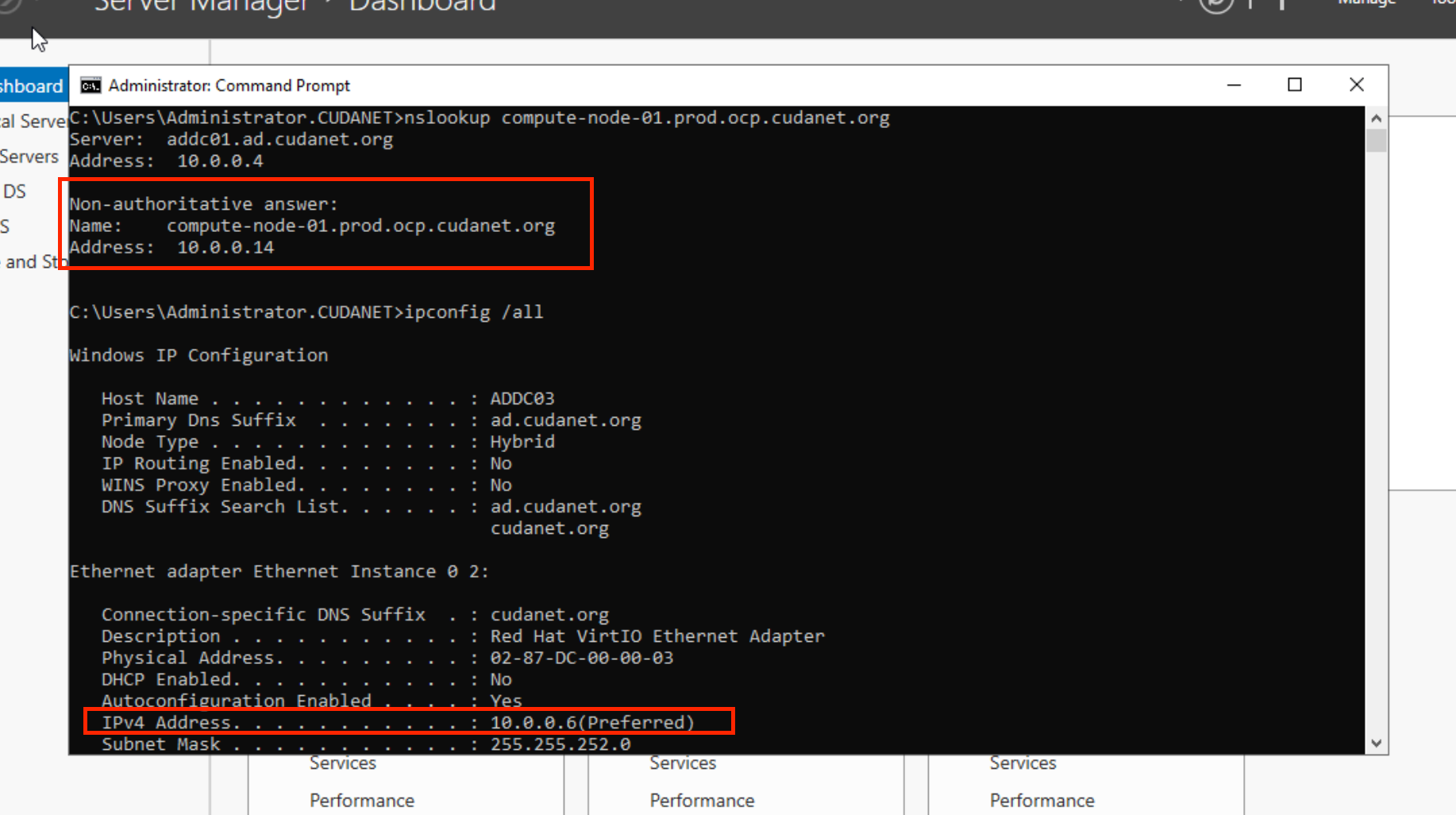

as we can see, my VM has an IP address on the same subnet as my Openshift nodes