Openshift, virt-who and Satellite

There are a lot of benefits to running your VMs and containers on a single platform, but from a an economic buyer's standpoint, one of the most compelling reasons to invest in Openshift Virtualization is the fact that it comes with entitlements for unlimited RHEL guests.

The way this works is very similar to how RHEL for Virtual Datacenters subs work. Essentially, the hypervisor (or in the case of Openshift, the compute node) is entitled to run unlimited RHEL guests. Now, there are actually a couple of different ways to subscribe those systems on Openshift. You could just create an activation key for your Openshift Bare Metal Node subs and use that to register your RHEL guests directly to RHN where they could be viewed and managed on access.redhat.com, or coming soon - console.redhat.com.

However, a lot of customers leverage Satellite for managing their on-prem footprint and entitle their VMs using VDC (Virtual Data Center) subs. This requires you to configure a service called virt-who, which is used for handling entitlements and reporting guest VM status to Satellite. This is especially useful for those who have air-gapped environments. Setting up virt-who for traditional hypervisors is pretty straightforward and well documented. Virt-who does have a mechanism for supporting Openshift Virtualization, but this is a brave new world and configuring virt-who to report your bare metal Openshift compute nodes has a few more gotchas along the way that aren't entirely obvious - even to a veteran Satellite administator.

Preparing your cluster for virt-who

Although the concepts of setting up virt-who on Openshift aren't terribly different from other hypervisors, the steps you take to get there are quite different, which honestly is to be expected. In broad strokes, to configure virt-who you create a service account with limited rights to perform specific tasks (specifically, reading hypervisor and VM status and not much else). In order for virt-who to continue working as expected, that service account should have credentials that do not expire.

Create a service account

On your OCP cluster perform the following:

oc new-project virt-who

oc create serviceaccount virt-who

oc create clusterrole lsnodes --verb=list --resource=nodes

oc create clusterrole lsvmis --verb=list --resource=vmis

oc adm policy add-cluster-role-to-user lsnodes system:serviceaccount:virt-who:virt-who

oc adm policy add-cluster-role-to-user lsvmis system:serviceaccount:virt-who:virt-who

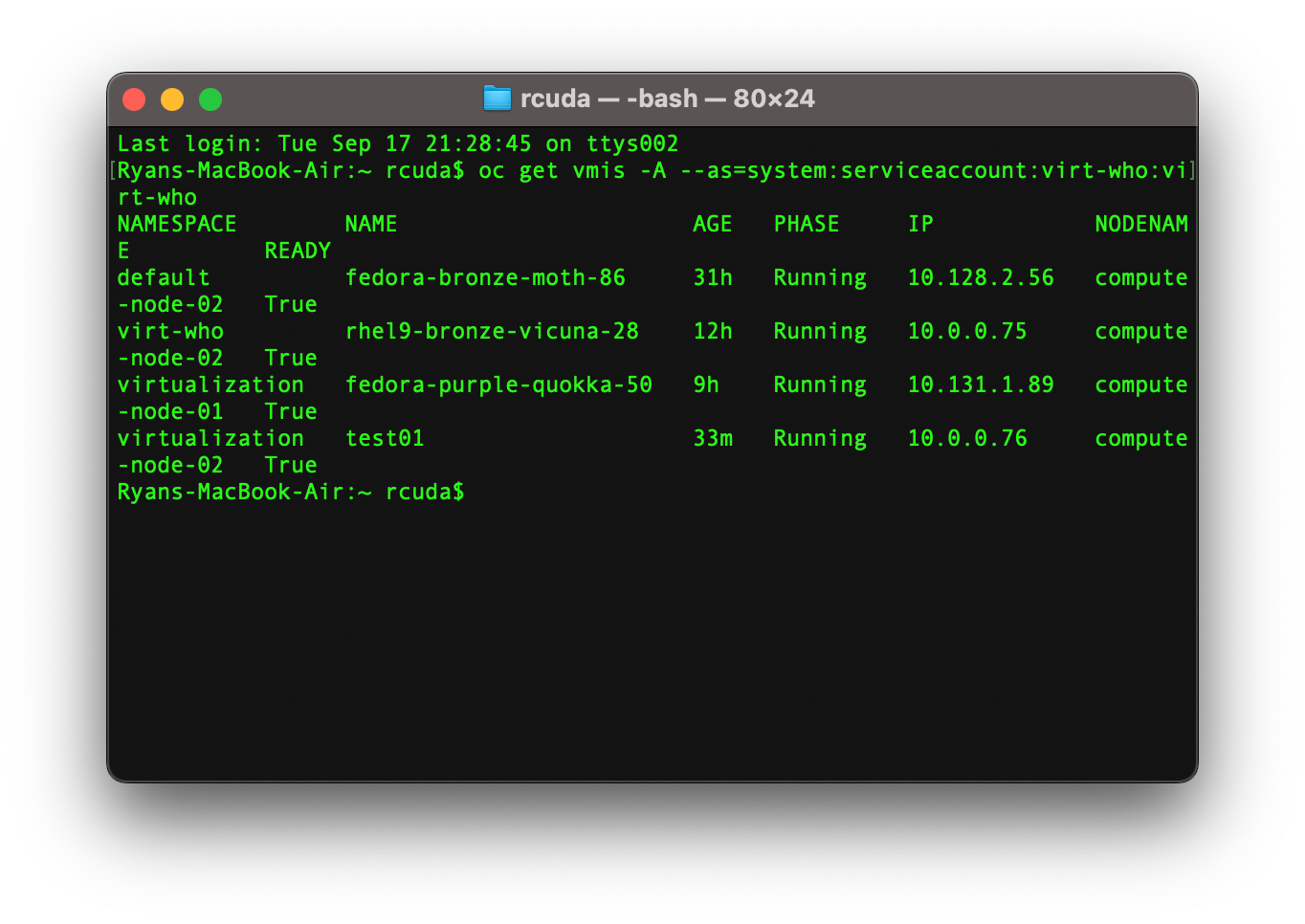

Then verify that the service account you create can list all running VMs in your cluster

oc get vmi -A --as=system:serviceaccount:virt-who:virt-who

Generate a non-expiring token

Next, we'll need to create a non-expiring token for the Service Account to authenticate to the kubernetes API.

cat << EOF > virt-who-token.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: virt-who-token

annotations:

kubernetes.io/service-account.name: virt-who

type: kubernetes.io/service-account-token

EOF

oc apply -f virt-who-token.yaml -n virt-who

Then extract your service account token, eg;

oc get secret virt-who-secret -n virt-who -oyaml | grep token: | awk -F ":" '{print $NF}' | base64 -dwhich will output a very long string. Copy this token to be used in the next step

Create a kubeconfig file

touch kubeconfig

export KUBECONFIG=kubeconfig

oc login https://api.prod.ocp.cudanet.org:6443 --token=<your token>then with your service account specific kubeconfig generated, verify that you can list VMs

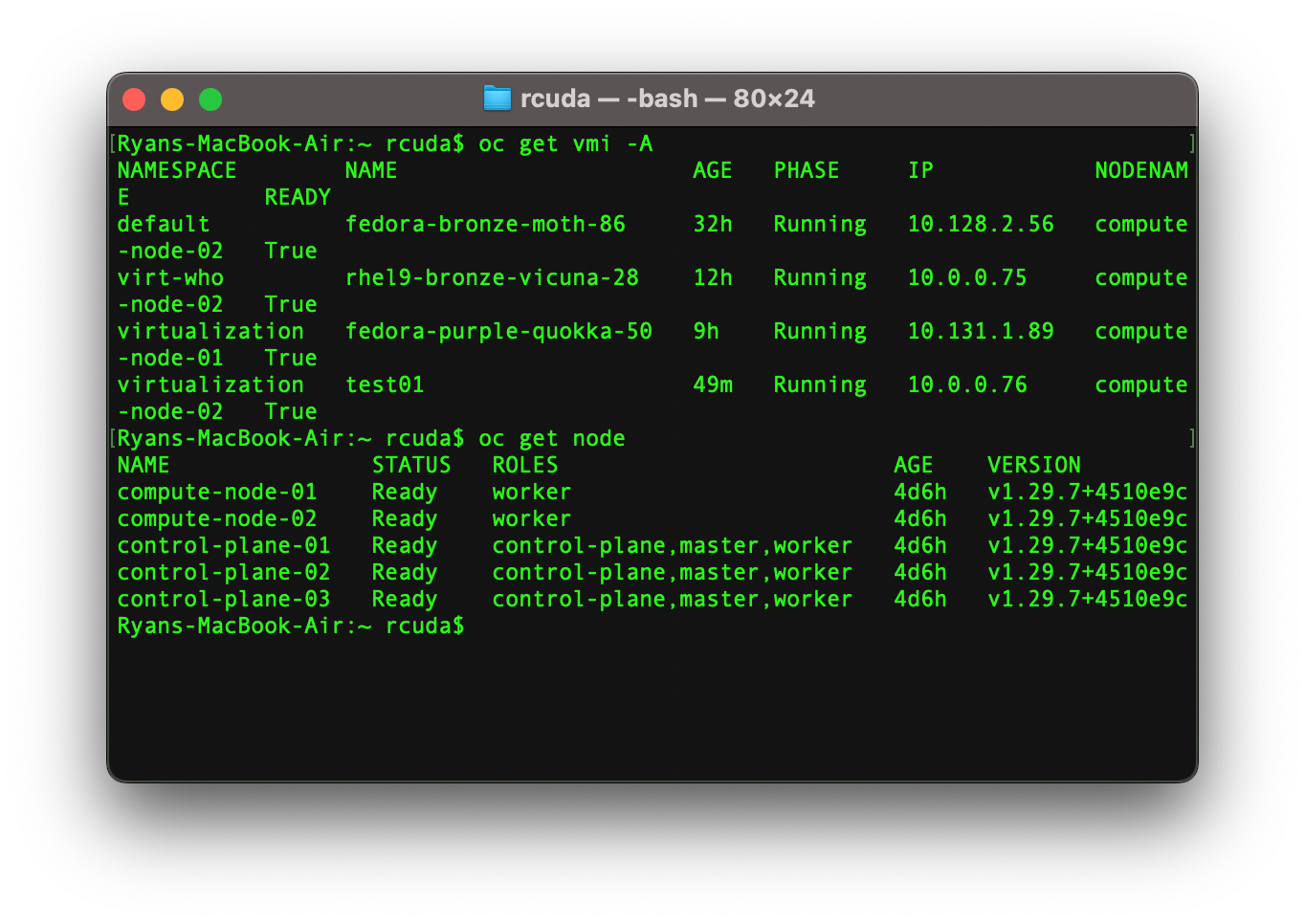

oc get vmi -A

oc get node

Your kubeconfig should look something like this:

apiVersion: v1

clusters:

- cluster:

server: https://api.prod.ocp.cudanet.org:6443

certificate-authority: /opt/cacert.pem

name: api-prod-ocp-cudanet-org:6443

contexts:

- context:

cluster: api-prod-ocp-cudanet-org:6443

namespace: openshift-virtualization-os-images

user: system:serviceaccount:virt-who:virt-who/api-prod-ocp-cudanet-org:6443

name: openshift-virtualization-os-images/api-prod-ocp-cudanet-org:6443/system:serviceaccount:virt-who:virt-who

- context:

cluster: api-prod-ocp-cudanet-org:6443

namespace: virt-who

user: system:serviceaccount:virt-who:virt-who/api-prod-ocp-cudanet-org:6443

name: virt-who/api-prod-ocp-cudanet-org:6443/system:serviceaccount:virt-who:virt-who

current-context: openshift-virtualization-os-images/api-prod-ocp-cudanet-org:6443/system:serviceaccount:virt-who:virt-who

kind: Config

preferences: {}

users:

- name: system:serviceaccount:virt-who:virt-who/api-prod-ocp-cudanet-org:6443

user:

token: <your-token>We've verified that our kubeconfig works, but we're still not done. In order for Satellite to be able to authenticate to your kubernetes API, it's also going to need your API root CA and any intermediate CAs in your certificate chain.

Extract your certificate chain

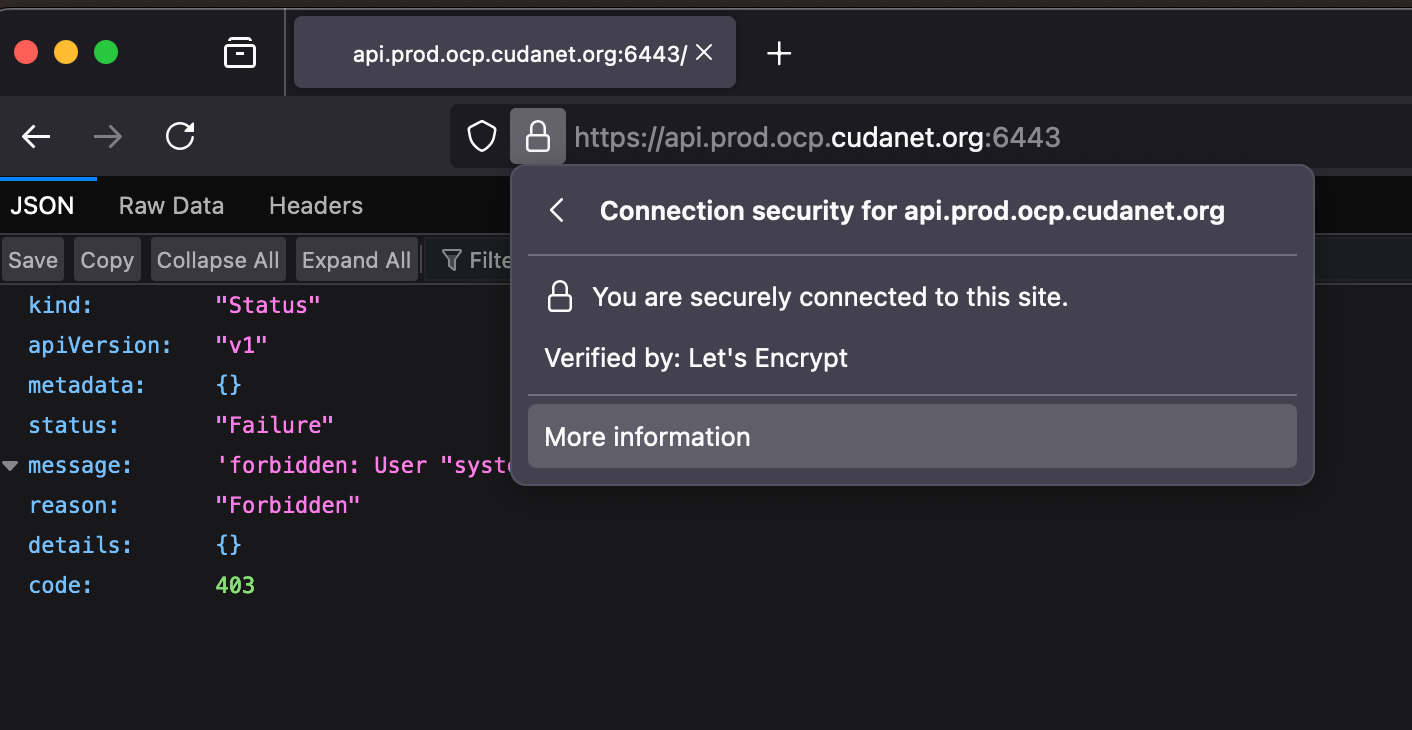

Personally, I use the cert-manager operator with Letsencrypt + Cloudflare DNS to manage my ingress and API certificates on Openshift, but regardless of whether you're using a publicly trusted certificate authority like Letsencrypt, your own internal CA like RHIDM, or even just the self signed certificate that Openshift ships with out of the box, there are plenty of ways to get your certificate chain. However, in my opinion the easiest way extract your SSL chain is using Firefox.

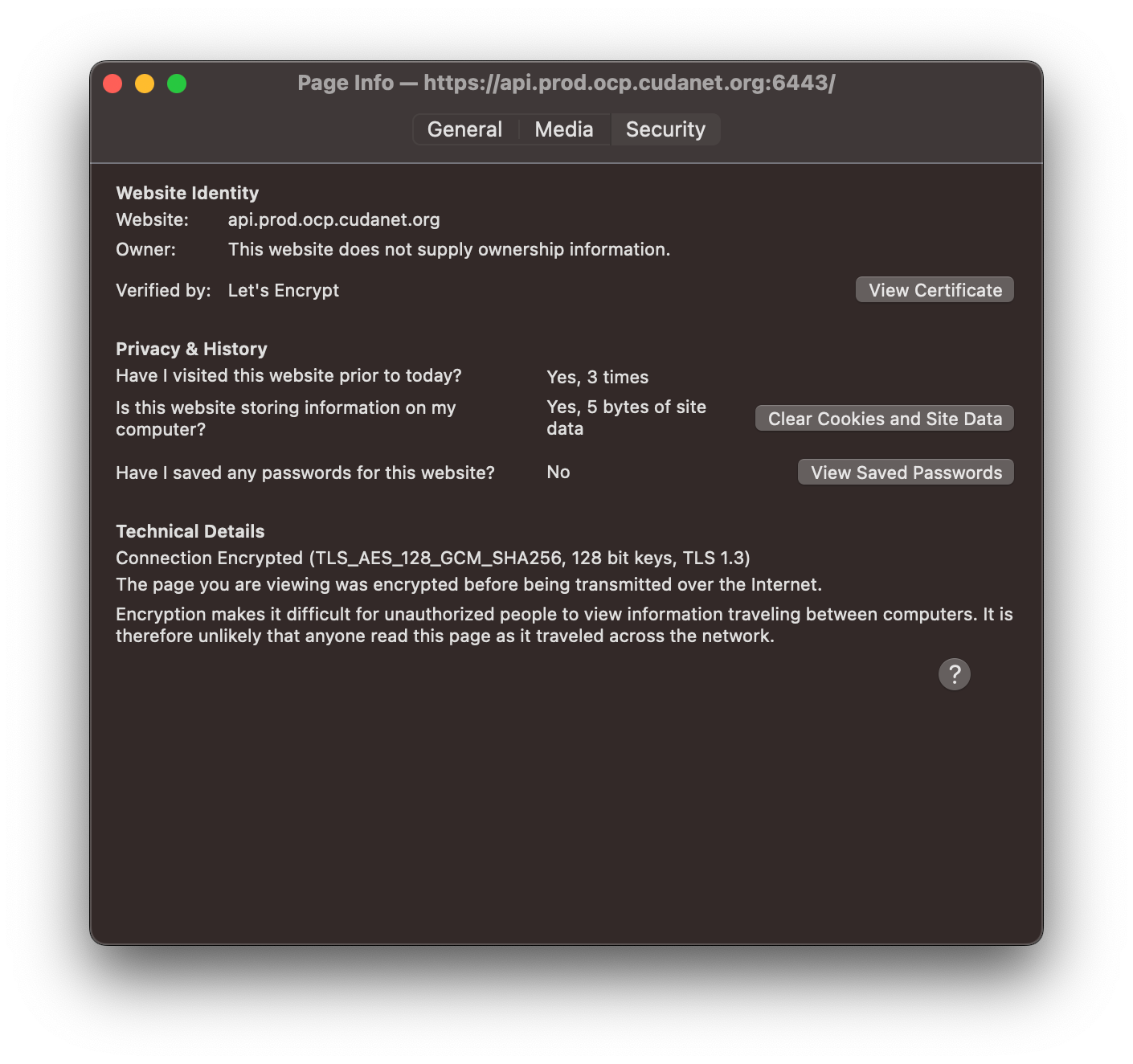

Open Firefox and navigate to your API URL, eg; https://api.prod.ocp.cudanet.org:6443

Click on the lock icon and click on 'More information'

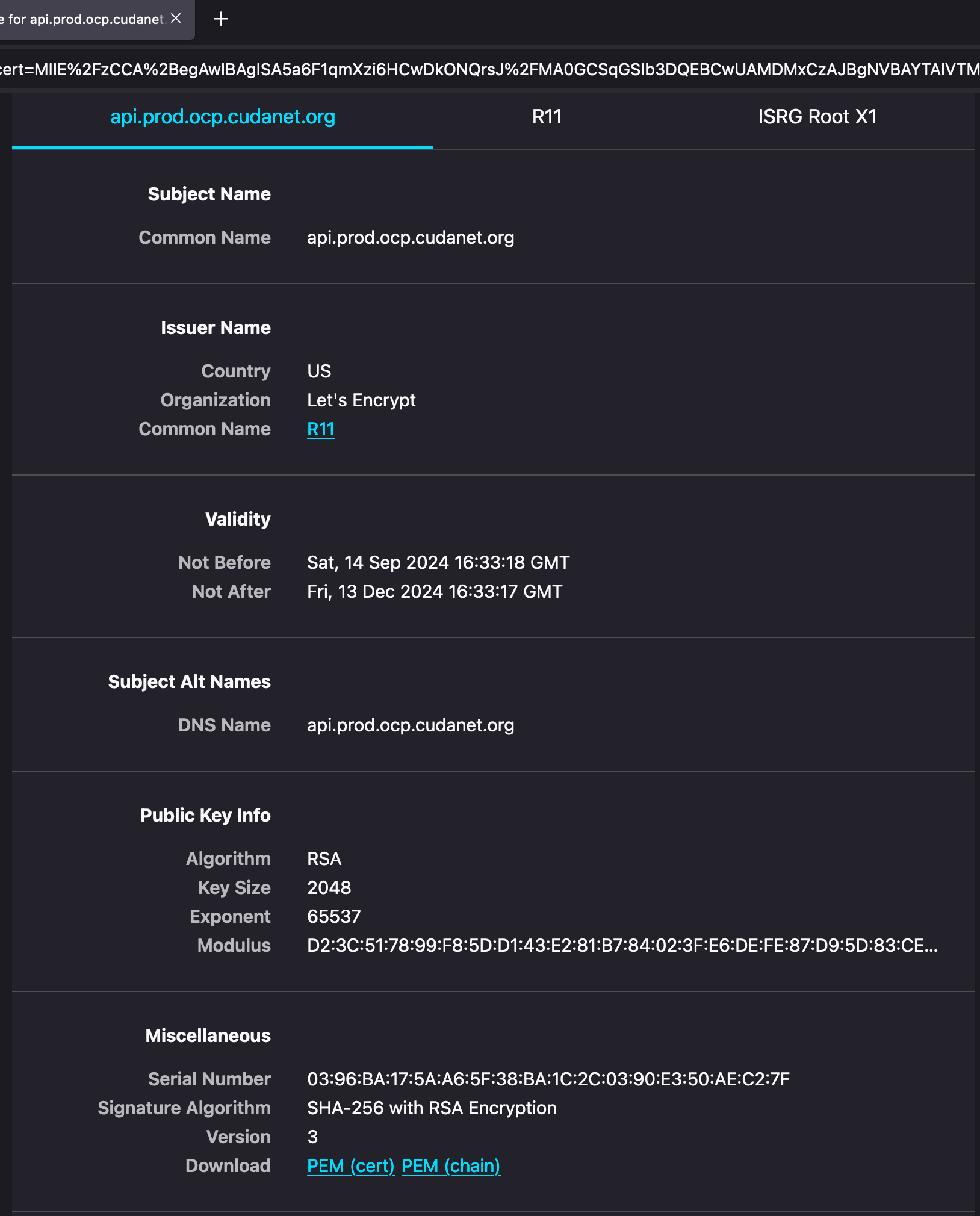

Click on 'View Certificate'

Scroll down and click on 'PEM (chain)' to download your full certificate chain.

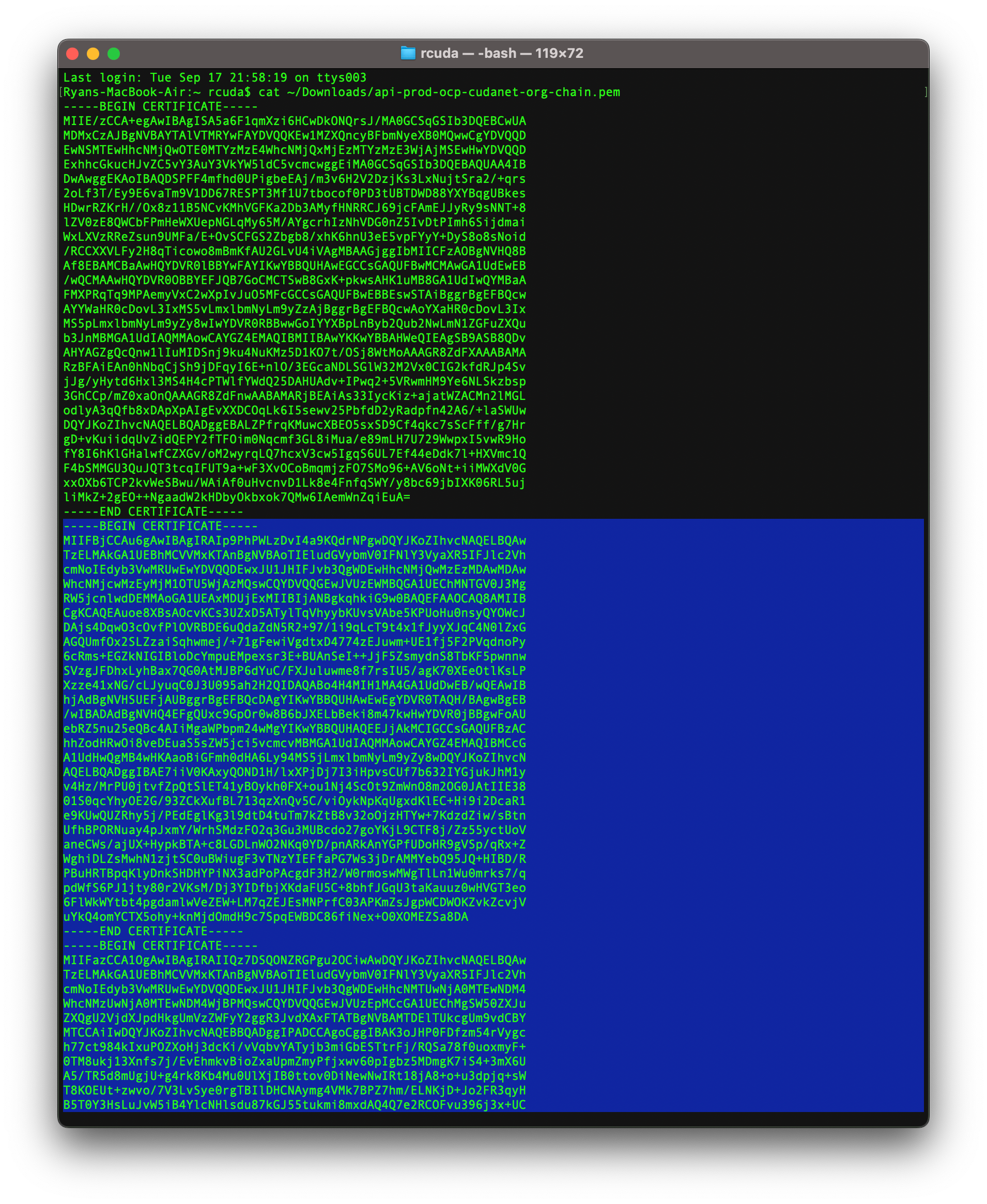

Then open your certificate with a text editor. The layout of your certificate chain from top to bottom should be API cert > Intermediate CA > Root CA.

Copy the intermediate and root CAs only (not including the API cert) and save them to a new file, eg; cacert.pem

Copy the files to your Satellite server

It's certainly not required that you run virt-who on your Satellite server. You can of course run virt-who on a separate system that is configured to connect to your Satellite server but... why? That's just one more server you have to manage. Personally, I prefer to just run virt-who on Satellite.

Copy your generated kubeconfig file and your cacert.pem to your Satellite server, eg;

scp -r kubeconfig cacert.pem root@satl01:~/you can store them in any arbitrary location, eg; /root/, however I chose to store them in /opt/. Just be mindful of their absolute paths.

Now, edit your kubeconfig file and add a line with the absolute path to the cacert.pem file, eg;

apiVersion: v1

clusters:

- cluster:

server: https://api.prod.ocp.cudanet.org:6443

certificate-authority: /opt/cacert.pem

name: api-prod-ocp-cudanet-org:6443

contexts:

...Generate a virt-who config

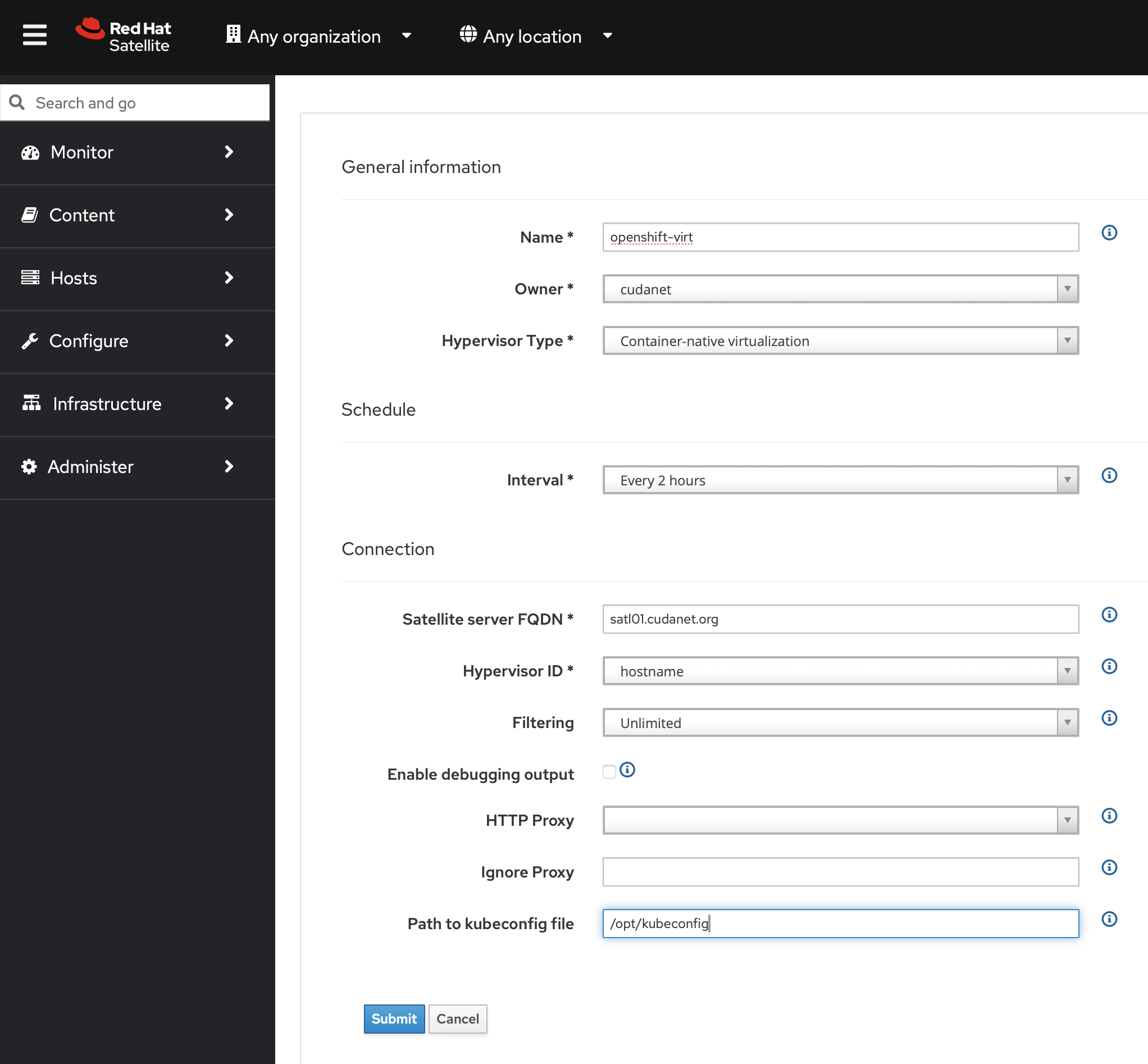

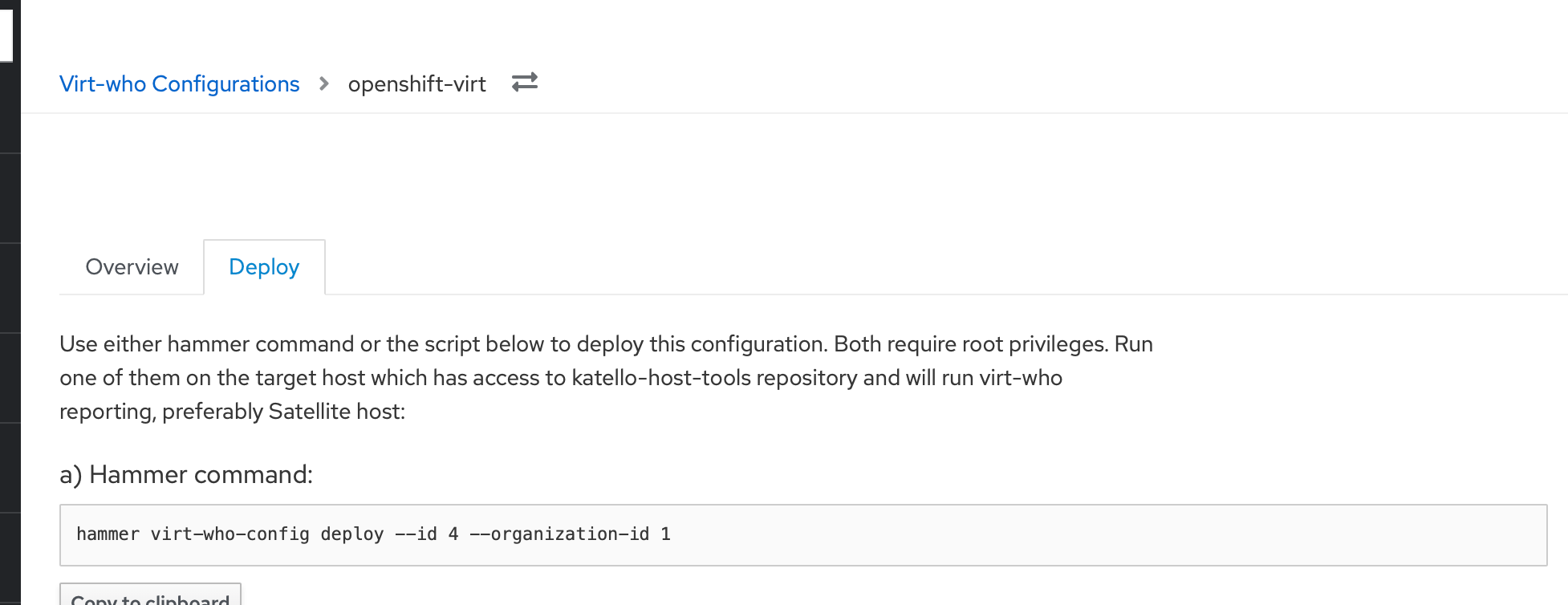

I prefer to use the web UI to create a virt-who config and apply it using hammer since it properly configures your Satellite server and makes sure the appropriate packages are installed and generates a systemd unit for you. On your Satellite server, navigate to Infrastructure > Virt-Who Configs and create a new configuration like this

make sure to set the hypervisor type to 'container-native virtualization' (aka; Openshift Virt) and specify the absolute path to your kubeconfig, eg; /opt/kubeconfig. Click submit.

Then copy the hammer command that it outputs on the next screen and run it as root on your Satellite server.

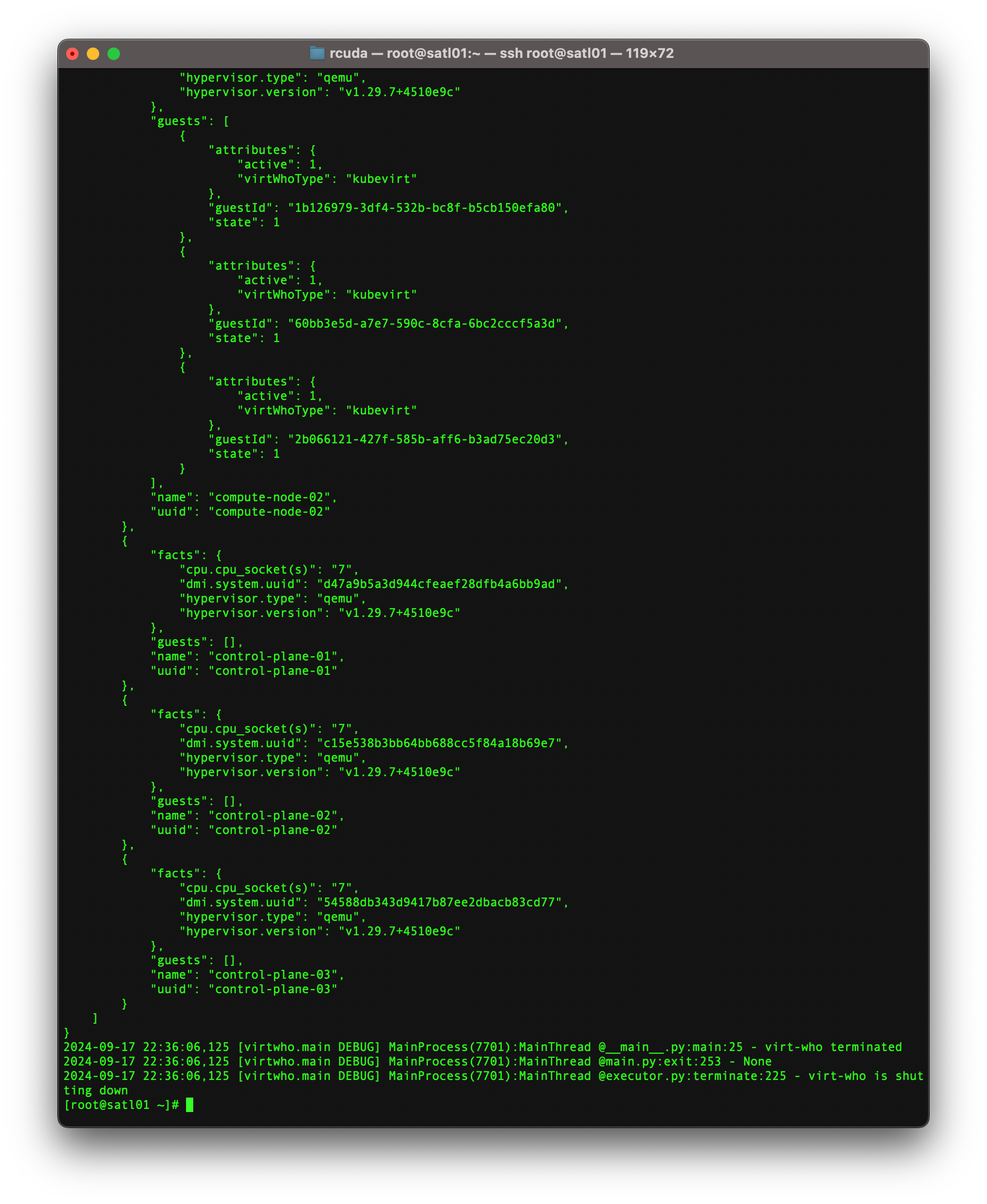

After it runs, you can check the output of virt-who --print and see if it is reporting your compute nodes, like this

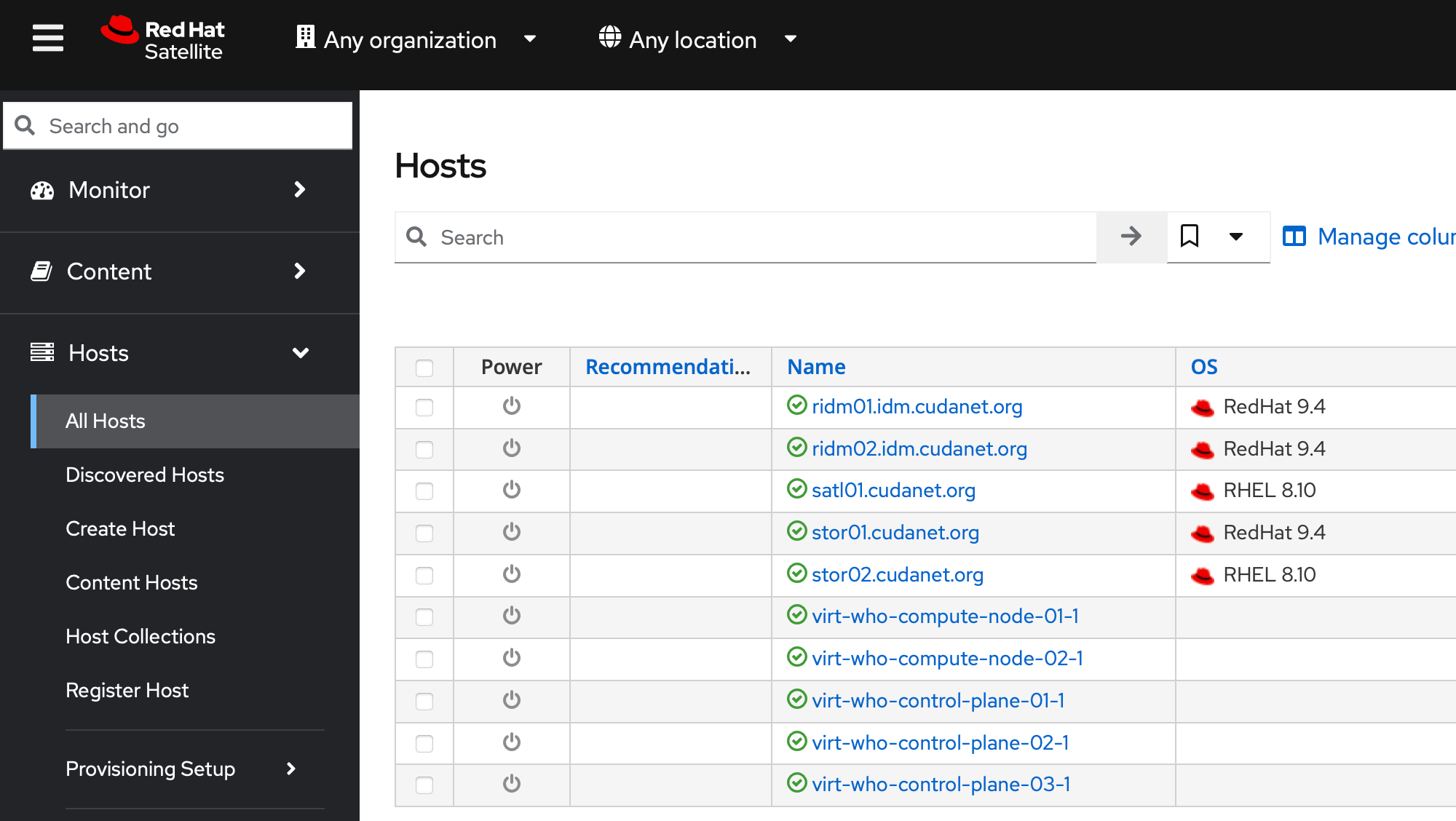

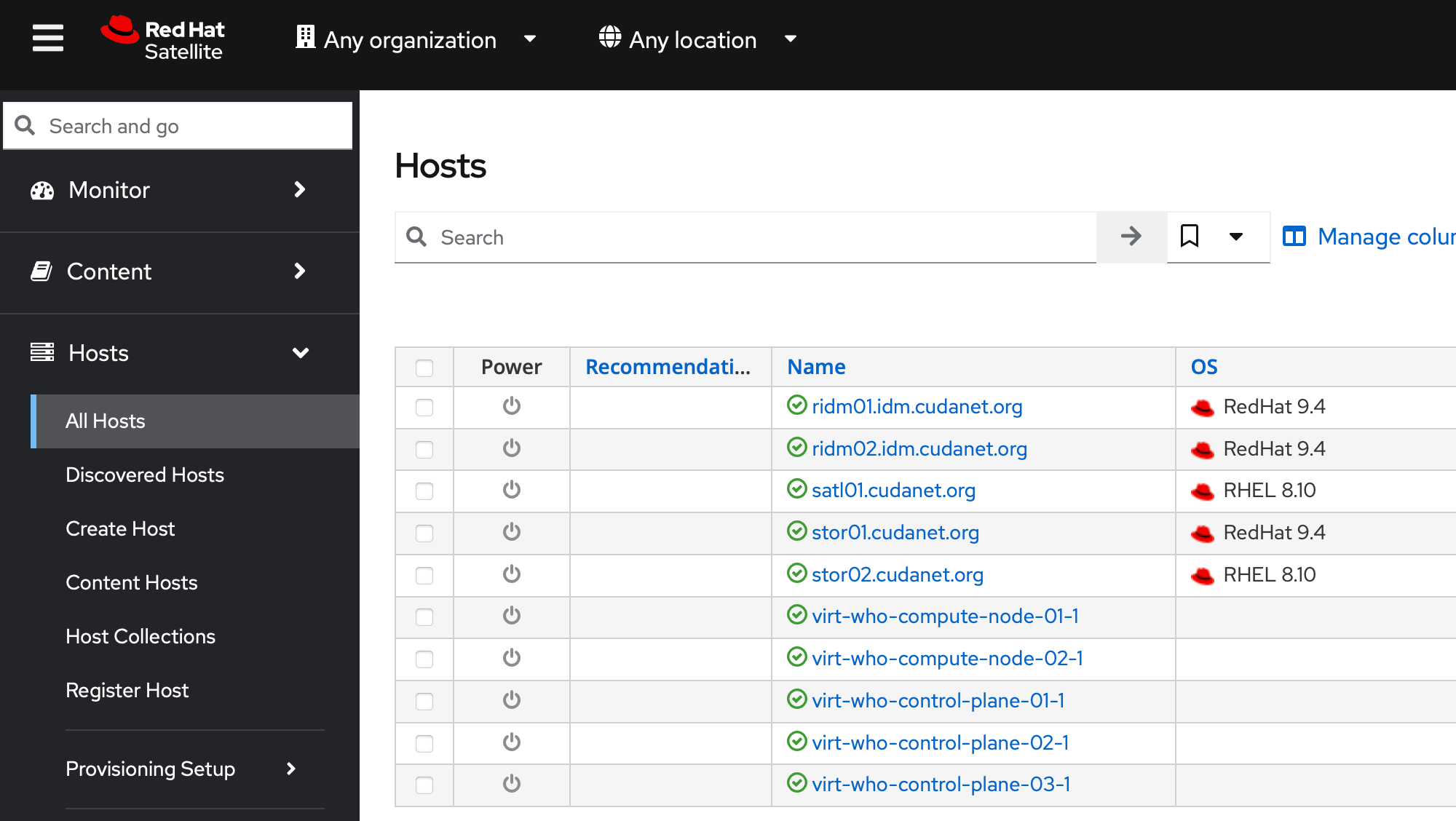

And now if you check the Hosts tab, you should be able to see your compute nodes populated as hypervisors in your inventory

Now you should be able to subscribe RHEL VM guests on Openshift using an activation key from your Satellite server.