Openshift, The Hard Way

git clone https://gitea.cudanet.org/CUDANET/openshift-the-hard-way.gitHere are some sample files to help you get started

Introduction:

Bare metal is a hard requirement for deploying Openshift Virtualization. Sure, you could technically enable nested virtualization on any generic OCP cluster, but performance ranges from mediocre to nigh-unusable in most cases and negates many of the benefits of leveraging Openshift as your hypervisor.

Deploying OpenShift on bare metal environments is a task that ranges from relatively straightforward to mind bogglingly complex, and never without some degree of trial and error.

These days, there are a lot of options that make bare metal Openshift deployments easier.

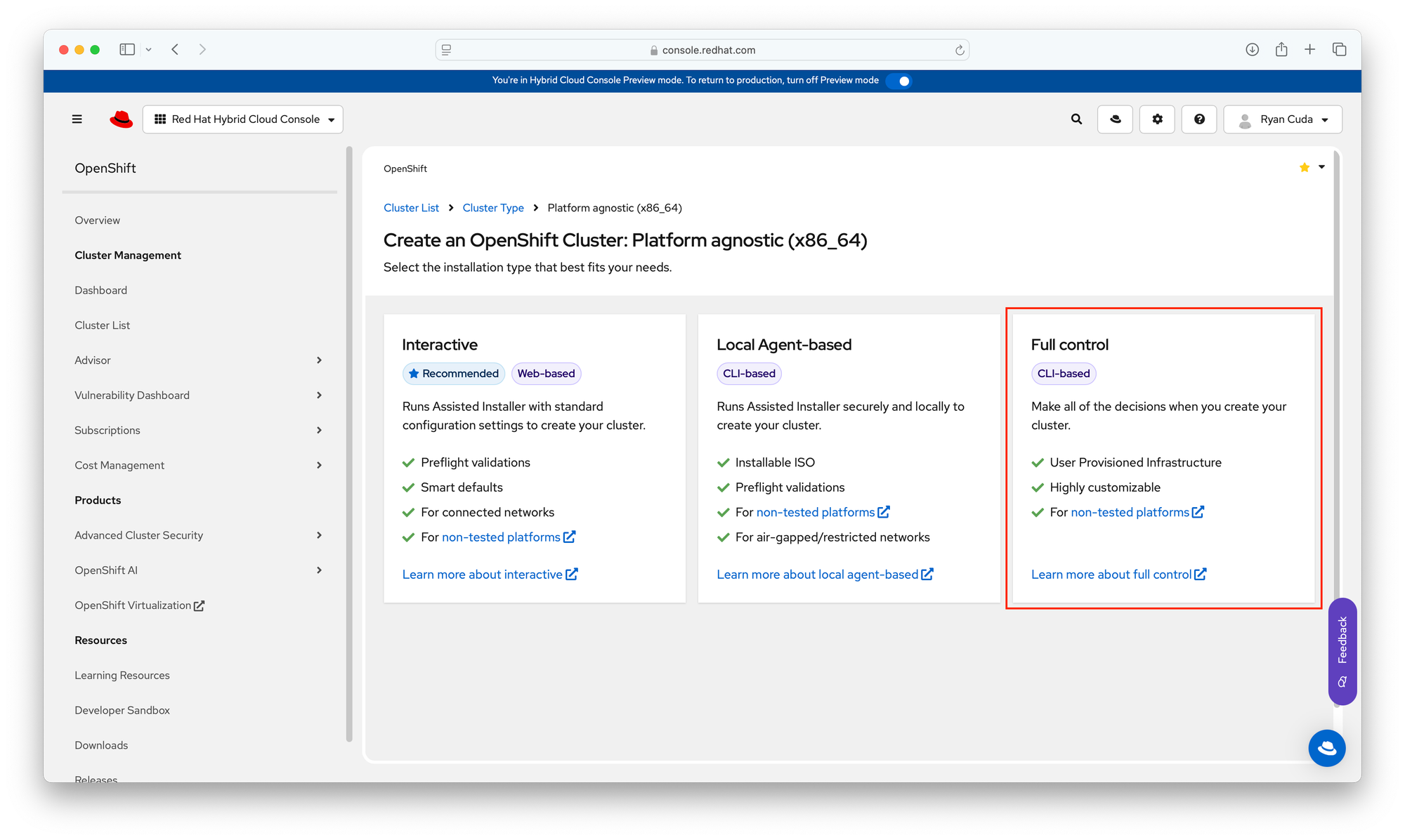

Ranging from easiest to hardest the options these days are:

- Assisted Installer

- Agent Based Installer

- Bare Metal IPI

- Bare Metal UPI

I could write a dozen articles about the differences between each deployment method and their pros, cons and target use cases. However, when all else fails and you have no other choice, UPI (user provisioned infrastructure) is the only deployment method guaranteed to work in any environment. Which is true in my case. My OCP nodes - a cluster of 6 Lenovo M710Q Tiny - although small and mighty, lack any kind of out-of-band management. They don't even have Intel vPro. I would have had to have sprung for the M910Q for that privilege. So in my case, UPI actually ended up being the path of least resistance to getting Openshift deployed, despite the laundry list of prerequisites.

When things are working as expected, a redeployment is as easy as generating new ignition configs, wiping boot disks on my nodes and rebooting them. A fresh cluster comes up ~30 minutes later. However, getting to that level of automation took a lot of work.

What You Need:

Before diving into the configuration steps, ensure you have the following prerequisites:

- Bare Metal Servers: The hardware for your OpenShift cluster nodes, which will be PXE booted. You'll also need a temporary bootstrap node which can be a VM.

- Openshift Boot Artifacts: These consist of the live root filesystem, live kernel, live initramfs and ignition files.

- PXE Server (TFTP and DHCP): A server that will serve the necessary boot images to your nodes. In my case I run all my network services on OPNsense and have MAC specific boot configs for each node

- DNS & DHCP Configuration: Properly set up to ensure your nodes can discover the OpenShift API and machine-config service to install the required packages.

- Load Balancing: you can of course use whichever load balancer you wish, but HAProxy is the standard for Openshift.

- Red Hat OpenShift Installer: The installer binary to generate the necessary ignition configs and initial authentication to the OpenShift installation.

Step 1: Preparing the PXE Server

The first step is setting up a PXE server that will be responsible for serving the boot images to your bare metal servers. The PXE server needs to provide both DHCP and TFTP services to your CoreOS nodes. In my lab, all of the network services are provided by OPNSense.

1. Install Necessary Packages on the PXE Server:

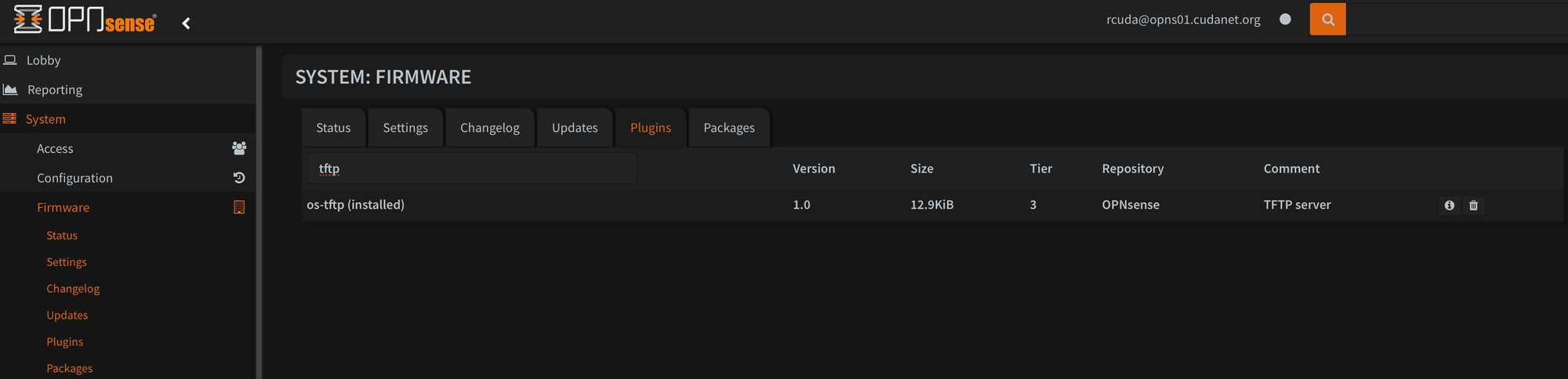

To set up the PXE boot service you need to install tftp. On OPNsense, navigate to System > Firmware > Plugins and install the os-tftp package

By default, tftp does not ship with any bootable artifacts or supporting libraries so you'll need to grab those from any Red Hat Linux distribution, eg; RHEL, CentOS or Fedora.

sudo dnf -y install syslinux-tftpbootthen copy the files to OPNsense. You'll need to create the tftp root directory first. On your OPNSense server

mkdir -p /usr/local/tftpThen on your Linux server, copy the files over

scp -r /tftpboot/* [email protected]:/usr/local/tftp/Then specifically for UEFI, you'll also need to copy over your grubx64.efi file, which is not included in the tftp package. I migrated from BIOS to UEFI a while ago, not that it seems to have any effect on the cluster mind you.

scp /boot/efi/EFI/redhat/grubx64.efi [email protected]:/usr/local/tftp/BOOTX64.EFINo idea if this file is actually case sensitive or not, but this is how I got it to work on my OPNSense server.

Where you place your boot artifacts that will be loaded via tftp is relative to the tftp root. Personally I put them in /usr/local/tftp/images/prod/. As long as the path you specify in your grub.cfg files is relative to the tftproot path (/usr/local/tftp/) you're good, which we'll get into a little bit later.

2. Configure DHCP for PXE Boot:

Configure the ISC DHCPv4 service to serve DHCP and TFTP services. You will need to adjust the DHCP configuration to match your network setup.

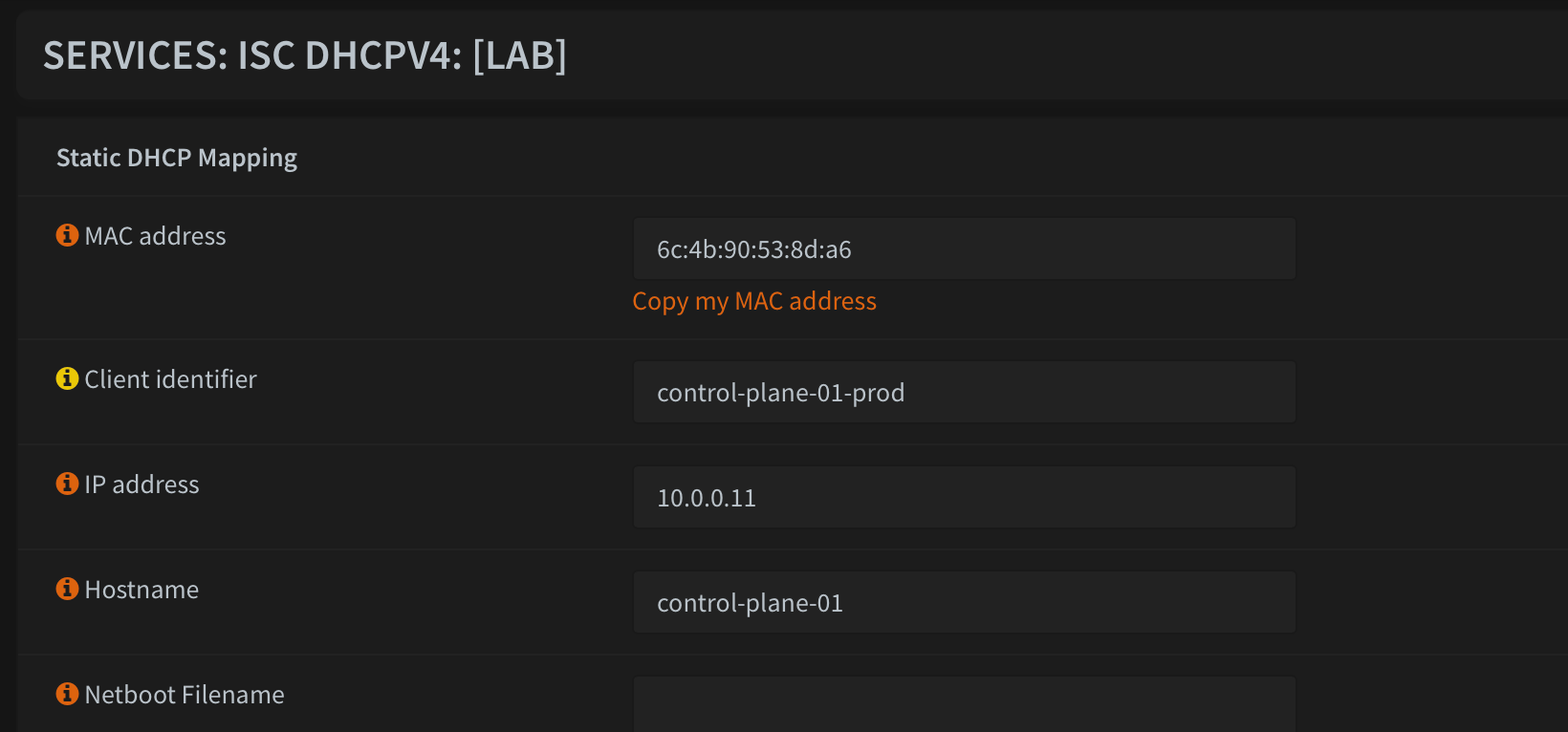

In my lab, each node has a MAC specific DHCP reservation. On OPNSense, there are a couple of methods to accomplish this, but I just use the default 'ISC DHCPv4'. It works fine and gets the job done.

Create a DHCP reservation for each of your nodes using their MAC address

This will ensure that they get the correct IP address and can grab the right ignition config on boot. On OPNSense, UEFI clients can get a MAC specific boot config if there is a file named like

/usr/local/tftp/grub.cfg-01-6c-4b-90-a8-cf-3c

the naming convention is grub.cfg-01 followed by the MAC address of the DHCP client separated by dashes (not colons).

Here is an example grub.cfg for a UEFI boot RHCOS node. Typically, what I do is symlink the specific node configs to the respective profile, eg; bootstrap, control-plane or compute-node. Just make sure you set the ignition file path to the correct version for the node, and make sure the root disk is set to the correct name. For example, on my bare metal Openshift nodes, that would be /dev/nvme01. However, my bootstrap node which is a VM gets /dev/vda as the root device.

Worth noting; TFTP will provide some of the smaller boot artifacts, however the larger files like the live root file system and ignition config must be served by another method. You can use http, ftp or nfs. I have found that using HTTPS is by far the easiest, but you will need to have proper SSL certs in place or else you'll run into issues. Thankfully, OPNsense has an ACME plugin which will generate and auto-rotate your router's SSL certificate for you.

set default="1"

function load_video {

insmod efi_gop

insmod efi_uga

insmod video_bochs

insmod video_cirrus

insmod all_video

}

load_video

set gfxpayload=keep

insmod gzio

insmod part_gpt

insmod ext2

set timeout=5

### END /etc/grub.d/00_header ###

### BEGIN /etc/grub.d/10_linux ###

menuentry 'Install OCP 4.18.1 Bootstrap Node' --class fedora --class gnu-linux --class gnu --class os {

linux images/prod/rhcos-418.94.202501221327-0-live-kernel-x86_64 coreos.inst.install_dev=/dev/vda coreos.live.rootfs_url=https://opns01.cudanet.org/pxeboot/prod/rhcos-418.94.202501221327-0-live-rootfs.x86_64.img coreos.inst.ignition_url=https://opns01.cudanet.org/pxeboot/prod/bootstrap.ign ignition.firstboot ignition.platform.id=metal

initrd images/prod/rhcos-418.94.202501221327-0-live-initramfs.x86_64.img

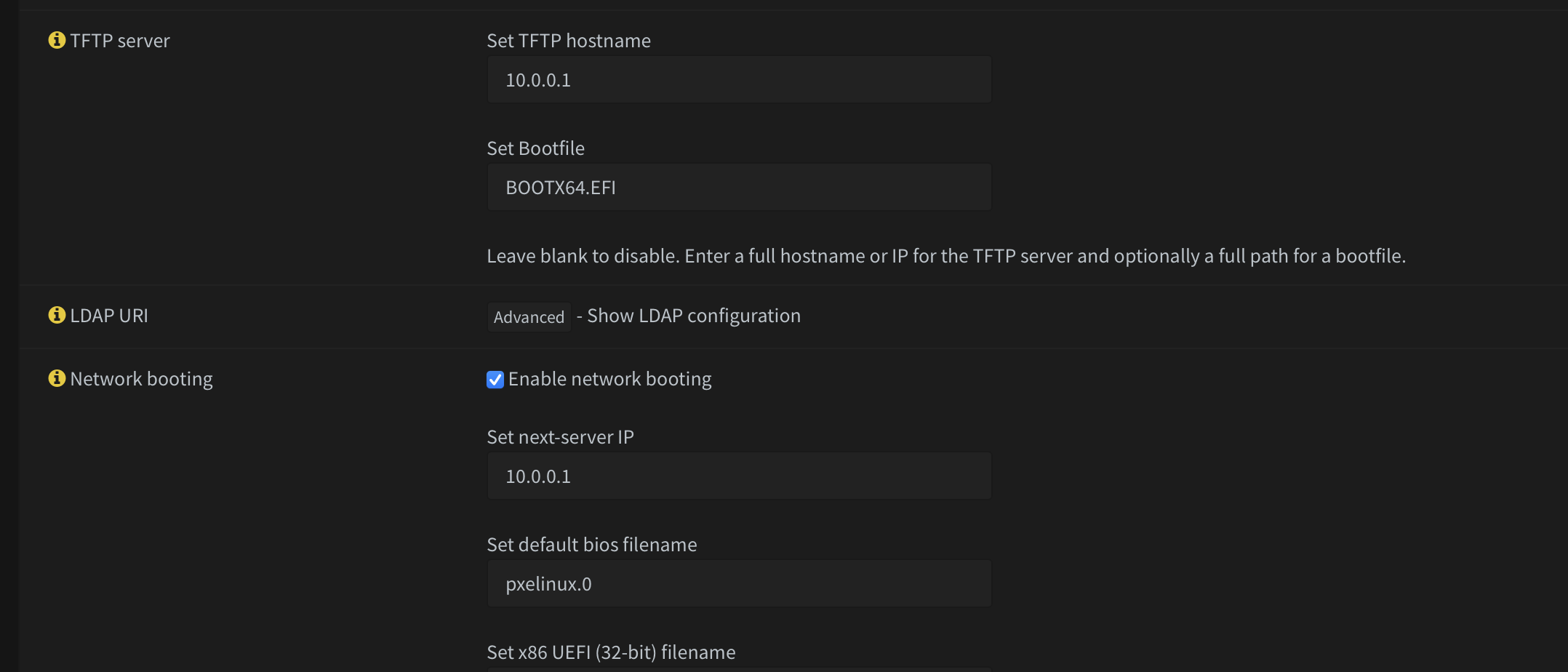

}In order to configure TFTP to serve the boot files needed to bootstrap your OCP cluster, you'll need to set the following configs:

You must set the TFTP hostname (or IP address). I use IP address just in case the DNS service is down. To boot a UEFI host, the file name must be set to BOOTX64.EFI.

Next server should be set to your TFTP server, in my case this is the OPNSense router. You must also check enable network booting.

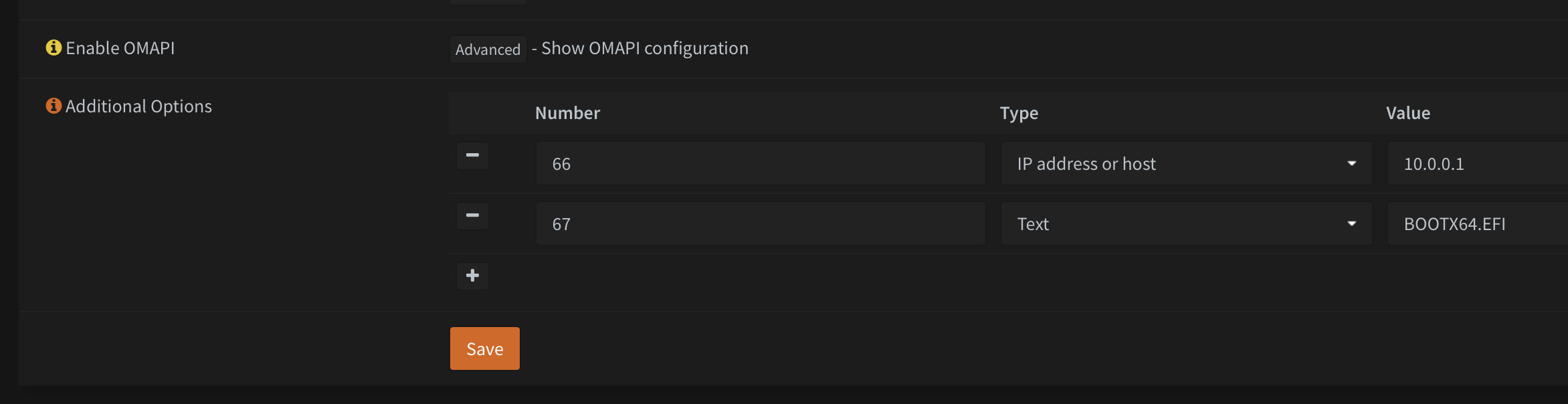

Some hardware may also require you to specify a DHCP option. It's kind of hit or miss, so you'll need to figure out what your specific hardware requires. The pertinent DHCP options are 66 and 67. If all else fails, setting options 66 and 67 should work in most cases.

3. Configure DNS:

It's always DNS. Especially when it comes to deploying Openshift. In order to bootstrap an Openshift cluster, you will need to have all the proper DNS entries for each of your nodes. You'll also need a wildcard DNS entry for your ingress, an A record for your Kubernetes API and another one for the machine config service. In the past, SRV records were also required for etcd, but thankfully this requirement hasn't been needed since at least Openshift 4.14.

Personally, I use dnsmasq on OPNSense. I previously used unbound (the default) which works fine, however due to the extensive caching that unbound does to improve DNS performance, I ran into an issue with the Certificate Manager Operator on Openshift where DNS-01 challenges would fail, and HTTP-01 challenges were not an option because my wonderful ISP (Cox) blocks port 80. Switching to dnsmasq with caching disabled fixed that issue for me. The other option would be to configure Certificate Manager to override the default DNS and use something like Google's DNS.

You will need to create all of the necessary DNS records before attempting to deploy your Openshift cluster. Before you begin, I'd highly recommend deciding on a naming convention for your cluster and name your nodes and VIPs accordingly. For example, my cluster uses prod.ocp.cudanet.org. Worth noting - on OPNsense, neither unbound nor dnsmasq support creating wildcard records through the UI, so you'll need to create an advanced configuration in either case.

Unbound wildcard example:

# /usr/local/etc/unbound.opnsense.d/openshift.conf

server:

local-zone: "apps.prod.ocp.cudanet.org" redirect

local-data: "apps.prod.ocp.cudanet.org 86400 IN A 10.0.0.10"DNSMasq wildcard example:

# /usr/local/etc/dnsmasq.conf.d/openshift.conf

address=/apps.prod.ocp.cudanet.org/10.0.0.10In these examples, 10.0.0.10 is the VIP for my Ingress. When doing a UPI install you can actually use the same VIP for all three cluster services; ingress, kubernetes API and machine config service. However there are reasons why you may want these separated. By default on an IPI or Assisted Install cluster, the kubernetes API and machine config service run on a separate VIP from the ingress.

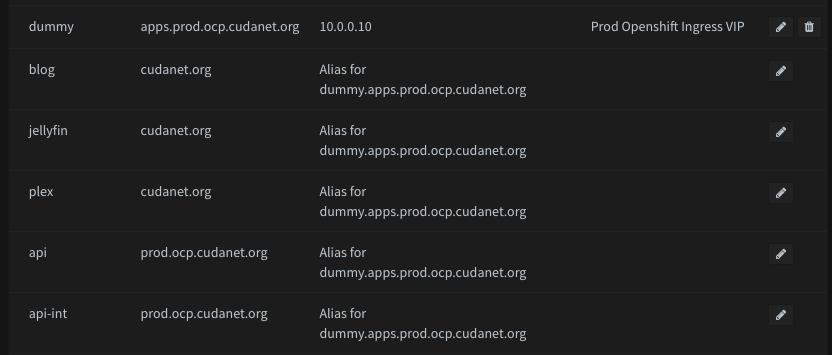

Once you've created your wildcard config, proceed to create the rest of the DNS entries through the web UI. To make things easier, I like to create a dummy A record pointing to my ingress and then I like to create CNAME records (aliases) pointing to the ingress for services run on my cluster with a "pretty name", so that I can for instance hit blog.cudanet.org instead of having to use the esoteric route name, eg; ghost-blog.apps.prod.ocp.cudanet.org

Also, because I only use a single virtual IP for my cluster, I create CNAMEs for api.prod.ocp.cudanet.org and api-int.prod.ocp.cudanet.org point to the ingress VIP.

For the sake of completeness, here's a list of all DNS records required to bootstrap my cluster (your entries will vary based on domain names, IPs and number of nodes)

*.apps.prod.ocp.cudanet.org 10.0.0.10 - A Record

api.prod.ocp.cudanet.org 10.0.0.10 - CNAME

api-int.prod.ocp.cudanet.org 10.0.0.10 - CNAME

control-plane-01.prod.ocp.cudanet.org 10.0.0.11 - A Record

control-plane-02.prod.ocp.cudanet.org 10.0.0.12 - A Record

control-plane-03.prod.ocp.cudanet.org 10.0.0.13 - A Record

compute-node-01.prod.ocp.cudanet.org 10.0.0.14 - A Record

compute-node-02.prod.ocp.cudanet.org 10.0.0.15 - A Record

compute-node-03.prod.ocp.cudanet.org 10.0.0.16 - A Record

bootstrap.prod.ocp.cudanet.org 10.0.0.17 - A RecordI'd recommend doing a few digs to make sure all the appropriate entries have been created, but that should cover it for DNS.

Step 2: Configuring a Load Balancer

When it's not DNS, if you are running into failures bootstrapping your cluster the next place to look would be your load balancer, as this is one of the more complex pieces to configure and it's easy to make simple mistakes. As I previously mentioned, you can use any load balancer you want but HAProxy is considered standard for Openshift, so that is what I use.

An HAProxy config consists of two main things; a Frontend and a Backend. A Frontend is a service end point which is tied to a VIP, eg; the kubernetes API for example. A Backend is a pool of endpoints running the service to be load balanced for performance and high availability, eg; your control plane nodes.

1. Create a Virtual IP (VIP):

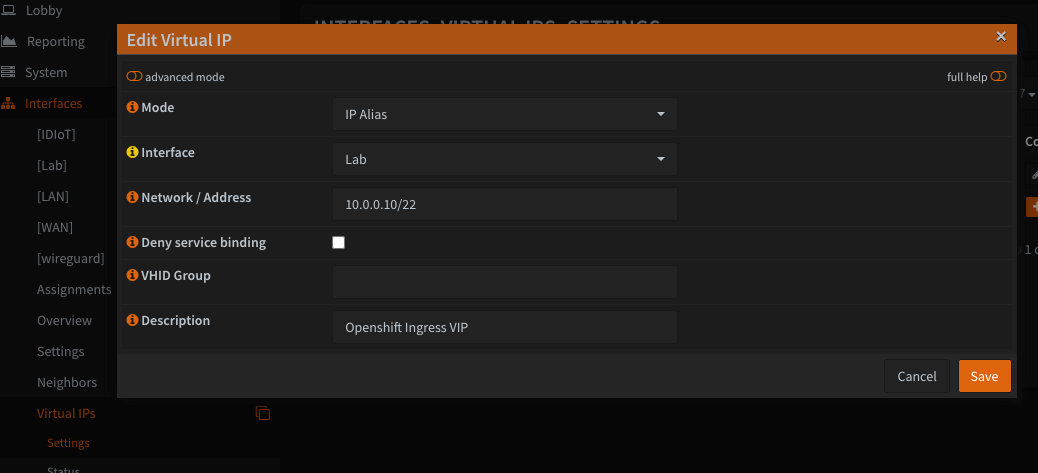

In the OPNSense Web UI, navigate to Interfaces > Virtual IPs > Settings and create a new IP Alias type VIP. Make sure to select the appropriate VLAN (Interface) for where your Openshift cluster will reside, in my case my Lab VLAN. Then click save.

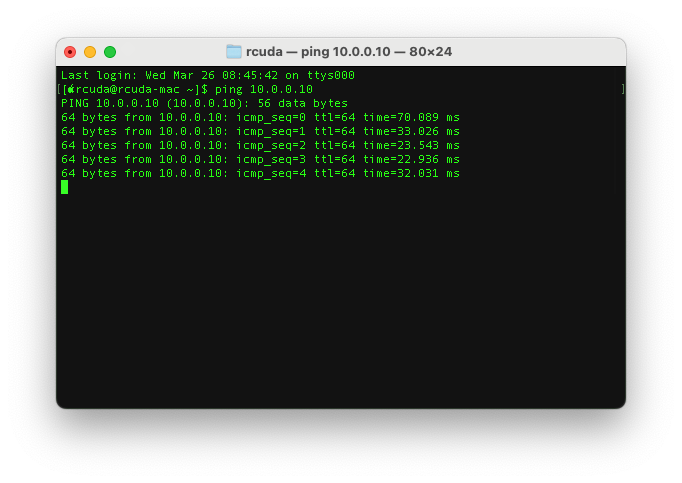

Make sure to do a ping check to ensure the VIP is up.

2. Install and Configure HAProxy:

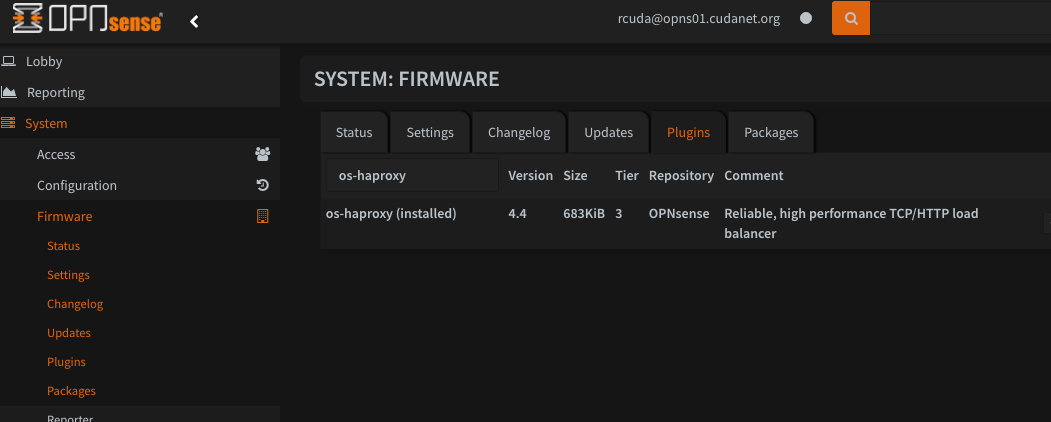

OPNSense does not come with a load balancer by default, but you can easily install haproxy as a package. Navigate to System > Firmware > Plugins and search for os-haproxy to install the package.

Once installed, navigate to Services > HAProxy. This next part is probably the most tedious part about setting up your UPI cluster. This will consist of configuring 4 things:

- Real Servers - these are the node service endpoints

- Backend Pools - these are groups of node service endpoints grouped by TCP service

- Public Services - these will be VIP endpoints that load balance a given service across a backend pool

- Health Monitors - these are health checks performed on node service endpoints to determine liveness

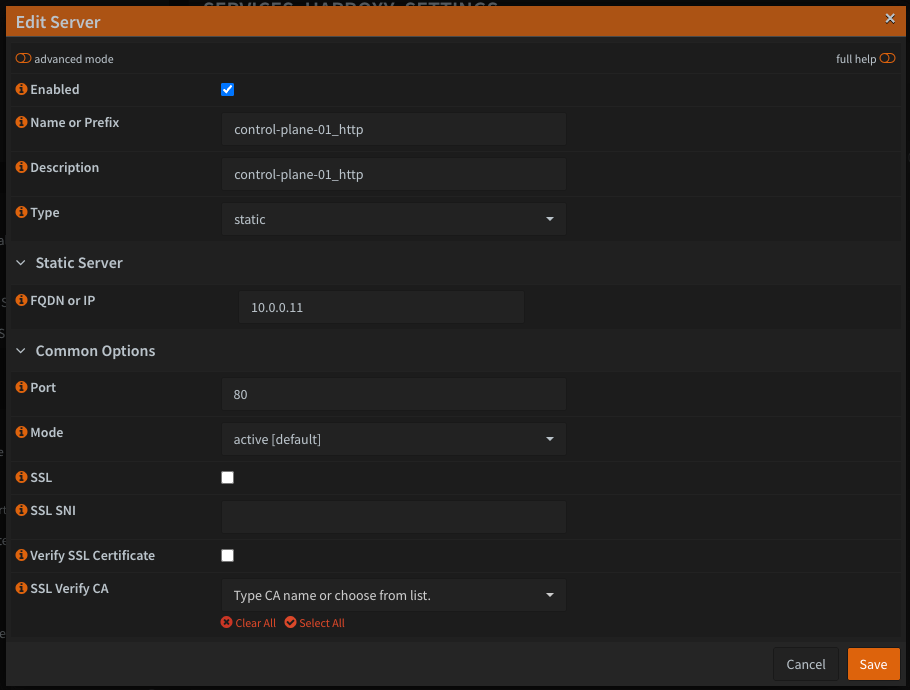

Let's start with the Real Servers. For whatever reason, the HAProxy plugin on OPNSense requires that you populate out all real servers before you can create backend pools. There are a couple of things you'll need to remember when creating these depending on your cluster's configuration. Control Plane nodes typically will run the kubernetes API and machine config services (TCP ports 6443 and 22623 respectively). If you are running schedulable control planes (I do) you will also need to configure real servers for HTTP/S (TCP ports 80 and 443) on your control plane nodes as well. The compute nodes only need TCP ports 80 and 443. Your Bootstrap node will only need the kubernetes API and Machine Config service. Just take your time, be thorough and make sure you don't miss anything. Here's my list of real servers

# kubernetes API

control-plane-01_api TCP/6443

control-plane-02_api TCP/6443

control-plane-03_api TCP/6443

bootstrap_api TCP/6443

# machine config

control-plane-01_api-int TCP/22623

control-plane-02_api-int TCP/22623

control-plane-03_api-int TCP/22623

bootstrap_api-int TCP/22623

# http

control-plane-01_http TCP/80

control-plane-02_http TCP/80

control-plane-03_http TCP/80

compute-node-01_http TCP/80

compute-node-02_http TCP/80

compute-node-03_http TCP/80

# https

control-plane-01_https TCP/443

control-plane-02_https TCP/443

control-plane-03_https TCP/443

compute-node-01_https TCP/443

compute-node-02_https TCP/443

compute-node-03_https TCP/443

For what it's worth, when configuring my real servers, I used their IP addresses so that load balancing should theoretically continue working even if the DNS service is down.

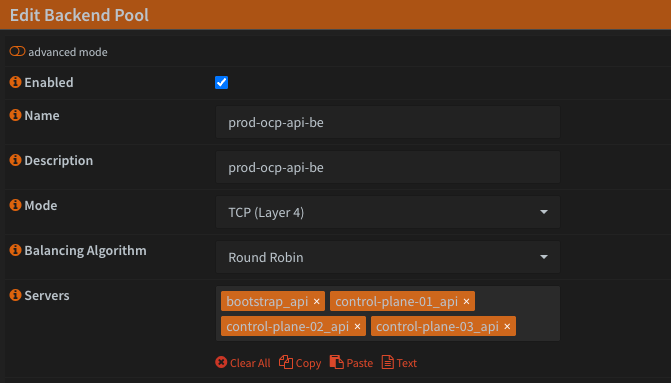

Now let's tackle the Backend Pools. This part is pretty straightforward. You'll need to create 4 backend pools; api, api-int, http and https. It will probably help to use a common naming convention, eg; prod-ocp-api-be.

Configure your backends as Layer 4 using Round Robin for the load balancing algorithm. Then just select your real servers from the pre-populated list.

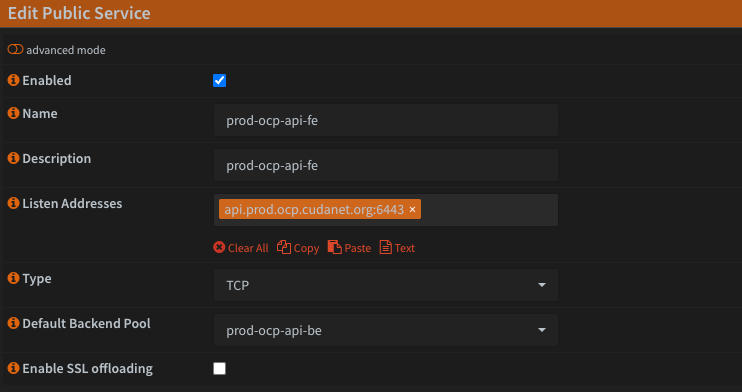

Then we can create our public services (frontends). You can use either FQDN or IP address with the port specified, eg; api.prod.ocp.cudanet.org:6443

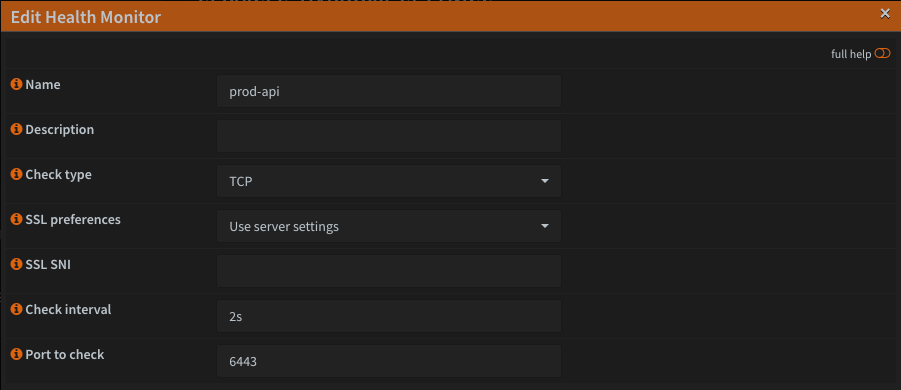

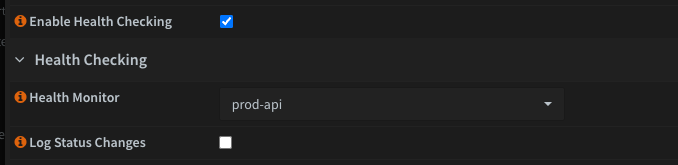

Next we'll create the health checks for each of the services. Set the check type to TCP and set the appropriate port for the service (6443, 22623, 80 and 443).

Then go back to your backend pools and set the appropriate health monitor for the pool

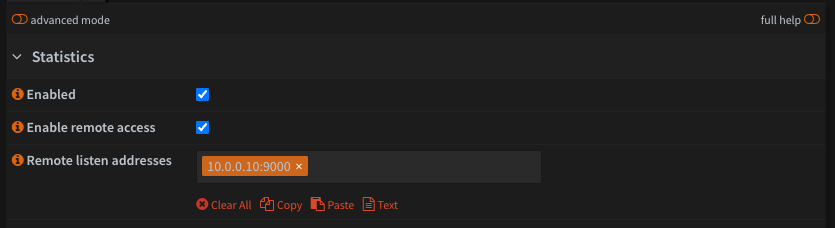

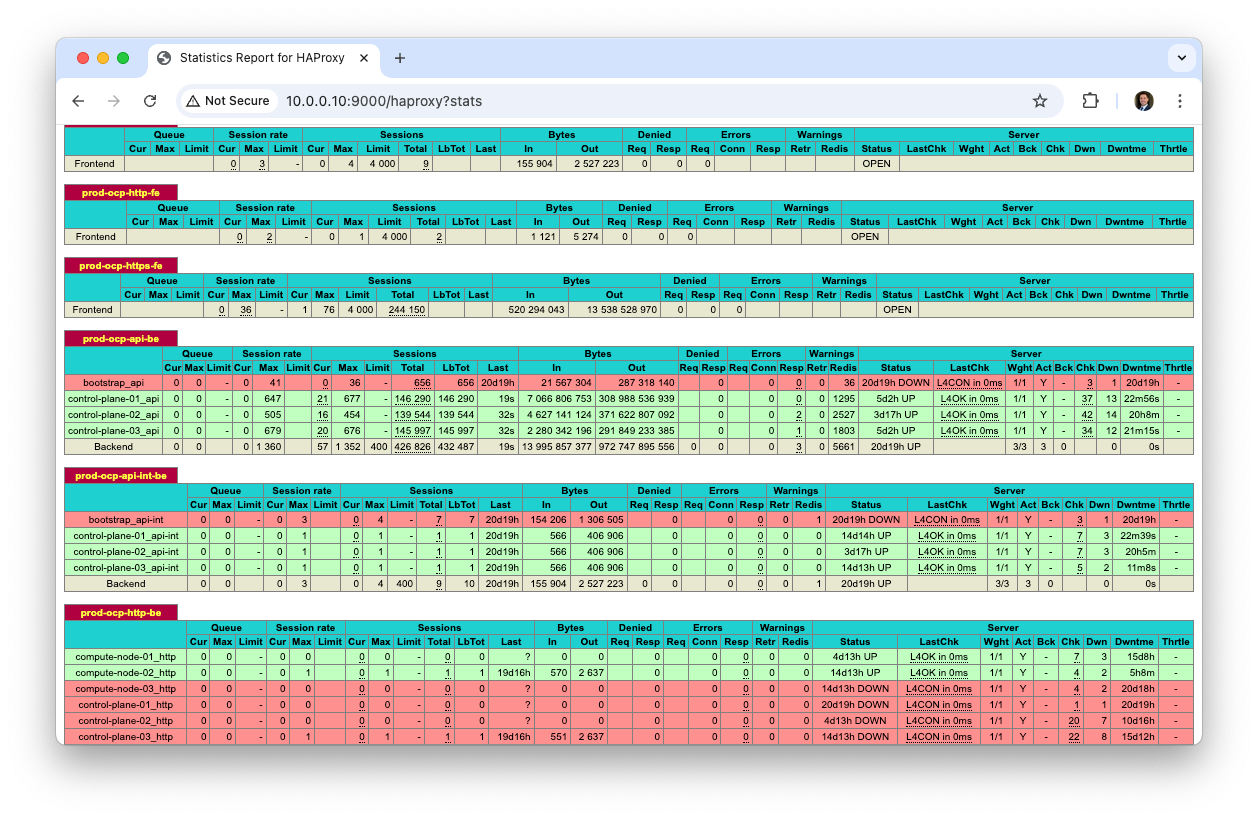

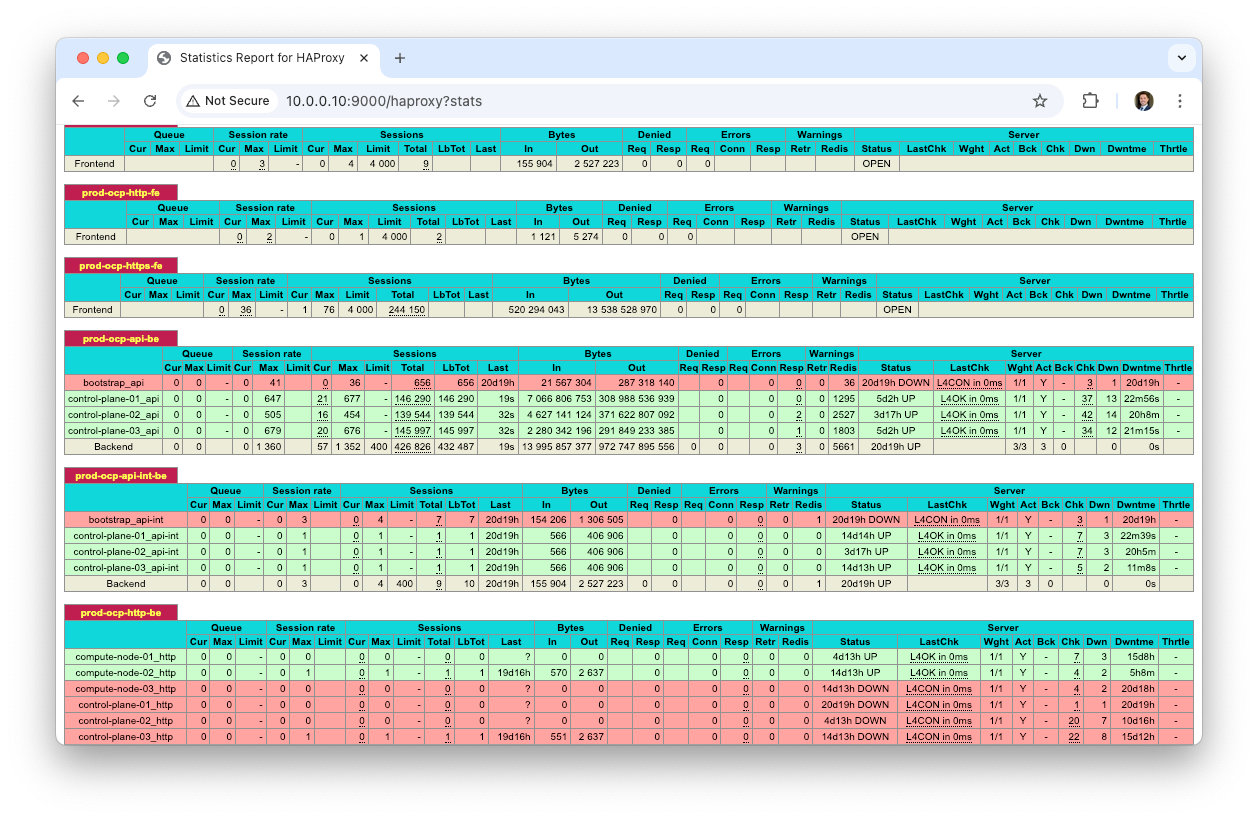

Once you're done with all of that, double check your work. Not required at all, but I'd highly recommend configuring the HAProxy stats page. It makes it a lot easier to see if your load balancer is configured correctly and that services are up. Navigate to Settings > Statistics and enable the service. You can set an arbitrary IP and port, but I set mine to my ingress VIP and port 9000.

Then you can view the HAProxy stats page at eg; http://10.0.0.10:9000/haproxy?stats

Step 3: Generate RHCOS Boot Artifacts

This will be the last major step before we're ready to actually deploy your Openshift cluster. This consists of two steps - downloading the RHCOS PXE boot artifacts and generating your cluster specific ignition configs.

1. Download Red Hat CoreOS (RHCOS):

You can always grab the latest version of the oc and openshift-install binaries from console.redhat.com. If you need to download a specific version of Openshift, you can grab it from https://mirror.openshift.com/pub/openshift-v4/clients/ocp/

In order to download the appropriate boot artifacts for your architecture (x86_64) you can run a command like this:

RHCOS=$(openshift-install coreos print-stream-json | grep x86_64 | grep live | grep -v iso| awk -F " " '{print $NF}' | cut -d '"' -f 2)

for r in $RHCOS;

do wget $r;

donewhich will download the kernel, initramfs and rootfs. The kernel and initramfs images get served via TFTP and should go into your PXE server's TFTP root directory, eg; on OPNSense I place these in /usr/local/tftp/images/prod/. The live rootfs and ignition files (which we'll generate in the next step) need to be served via HTTPS. I drop them into /usr/local/www/pxeboot/prod/ which makes them available at eg;

https://opns01.cudanet.org/pxeboot/prod/rhcos-418.94.202501221327-0-live-rootfs.x86_64.img

2. Generate Ignition Configs:

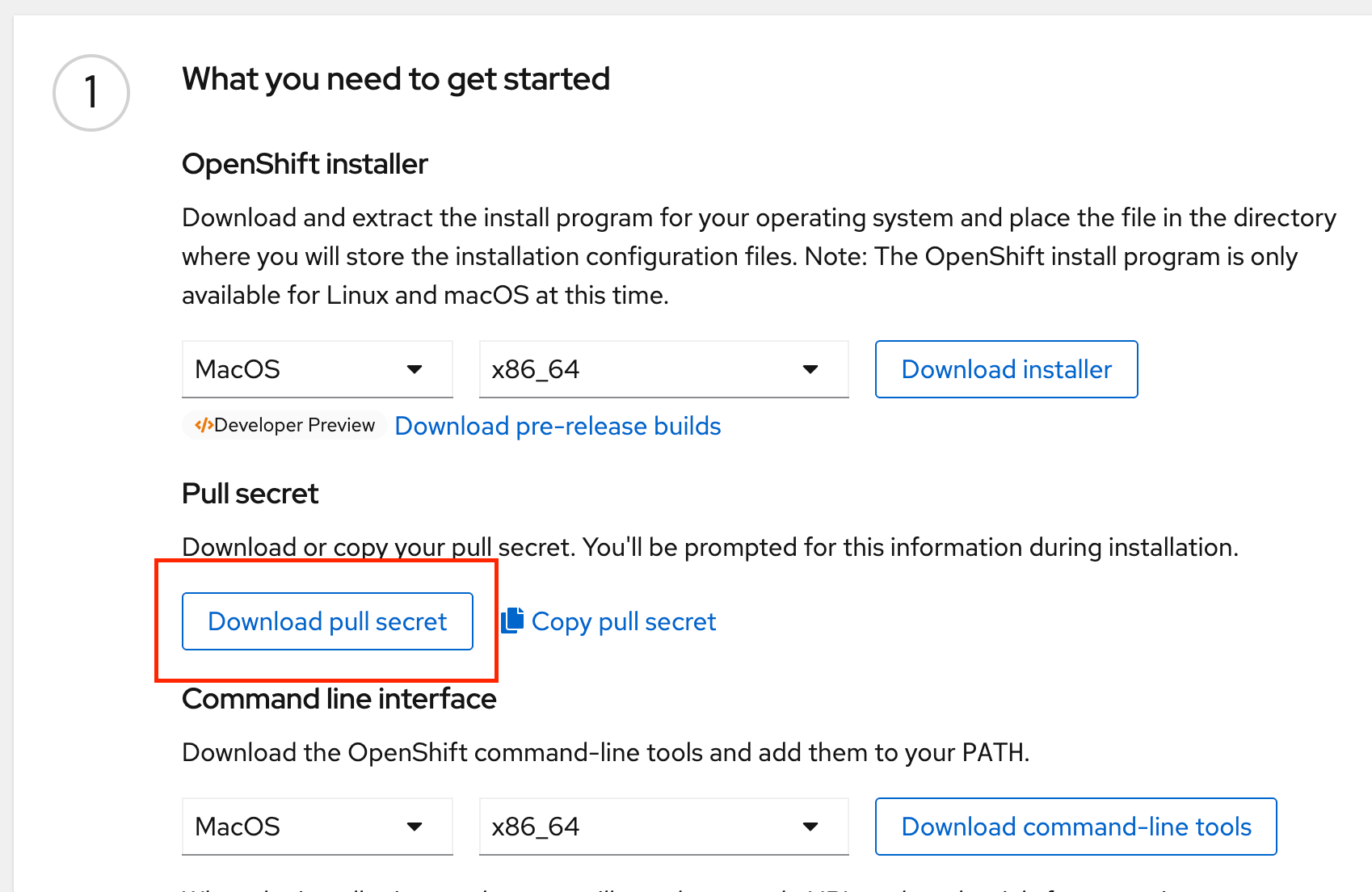

In order to generate your ignitions configs, you'll need a few things; the openshift-install binary which you should have downloaded earlier, and you'll need to generate an install-config.yaml. In order to generate an install-config.yaml, you're also going to need to get your Openshift pull secret. Optionally (but highly recommended) you're also going to want to add your workstation's SSH key in order to access your nodes.

In order to download your pull secret, navigate to https://console.redhat.com/openshift/install/platform-agnostic/user-provisioned

This is also where you'd go to download the latest versions of the oc and openshift-install binaries.

If you want to be able to ssh into your nodes, you'll have to add a public SSH key. If you haven't done so already, you can generate one with:

ssh-keygen -t rsaThen create your ignition config using this as a template.

apiVersion: v1

baseDomain: <your-domain-name>

compute:

- hyperthreading: Enabled

name: worker

replicas: 0

controlPlane:

hyperthreading: Enabled

name: master

replicas: 3

metadata:

name: <cluster-name>

networking:

clusterNetwork:

- cidr: 10.128.0.0/14

hostPrefix: 23

networkType: OVNKubernetes

serviceNetwork:

- 172.30.0.0/16

platform:

none: {}

fips: false

pullSecret: '<your-pull-secret>'

sshKey: '<your-ssh-public-key>'In my case, <your-domain-name> is 'ocp.cudanet.org', <cluster-name> is 'prod'. Add your pull secret and public ssh key (~/.ssh/id_rsa.pub). Save a copy of your install-config.yaml somewhere safe because (for whatever reason) as soon as you run the next command it gets deleted.

From the same directory as your install-config.yaml, run the command openshift-install create ignition-configs. It will create several files for you; master.ign, worker.ign, bootstrap.ign, and the auth folder will contain your initial kubeconfig and kubeadmin password in order to access your cluster once it's up. At this point you'll need to copy your ignition files over to your PXE server, eg;

scp -r ./*.ign root@opns01:/usr/local/www/pxeboot/prod/

Step 4: Configure PXE Boot (cont'd)

I bet you thought we were done configuring PXE booting, huh? Not even close!

Let's recap what all we've accomplished so far.

- created DHCP reservations for our nodes

- created all the necessary DNS entries for our cluster

- configured a PXE boot server

- configured a load balancer

- uploaded the RHCOS boot artifacts to the PXE server

Now we need to generate MAC specific boot configs to serve each of our nodes. What I like to do is to create the three necessary boot configs (control plane, compute node and bootstrap) and then create symlinks to them for the MAC addresses of the appropriate nodes. These files will reside in the tftp root of your PXE server, in my case /usr/local/tftp, eg;

grub.cfg-01-52-54-00-42-3b-fd -> bootstrap

grub.cfg-01-6c-4b-90-08-a6-ff -> control-plane

grub.cfg-01-6c-4b-90-53-8d-a6 -> control-plane

grub.cfg-01-6c-4b-90-a8-ce-cf -> control-plane

grub.cfg-01-6c-4b-90-0a-18-a6 -> compute-node

grub.cfg-01-6c-4b-90-32-2c-0a -> compute-node

grub.cfg-01-6c-4b-90-43-8c-03 -> compute-nodeBootstrap grub.cfg

set default="1"

function load_video {

insmod efi_gop

insmod efi_uga

insmod video_bochs

insmod video_cirrus

insmod all_video

}

load_video

set gfxpayload=keep

insmod gzio

insmod part_gpt

insmod ext2

set timeout=5

### END /etc/grub.d/00_header ###

### BEGIN /etc/grub.d/10_linux ###

menuentry 'Install OCP 4.18.1 Bootstrap Node' --class fedora --class gnu-linux --class gnu --class os {

linux images/prod/rhcos-418.94.202501221327-0-live-kernel-x86_64 coreos.inst.install_dev=/dev/vda coreos.live.rootfs_url=https://opns01.cudanet.org/pxeboot/prod/rhcos-418.94.202501221327-0-live-rootfs.x86_64.img coreos.inst.ignition_url=https://opns01.cudanet.org/pxeboot/prod/bootstrap.ign ignition.firstboot ignition.platform.id=metal

initrd images/prod/rhcos-418.94.202501221327-0-live-initramfs.x86_64.img

}keep in mind, root device on a VM is going to be /dev/vda, whereas my bare metal nodes use /dev/nvme0n1

Control Plane grub.cfg

set default="1"

function load_video {

insmod efi_gop

insmod efi_uga

insmod video_bochs

insmod video_cirrus

insmod all_video

}

load_video

set gfxpayload=keep

insmod gzio

insmod part_gpt

insmod ext2

set timeout=5

### END /etc/grub.d/00_header ###

### BEGIN /etc/grub.d/10_linux ###

menuentry 'Install OCP 4.18.1 Control Plane Node' --class fedora --class gnu-linux --class gnu --class os {

linux images/prod/rhcos-418.94.202501221327-0-live-kernel-x86_64 coreos.inst.install_dev=/dev/nvme0n1 coreos.live.rootfs_url=https://opns01.cudanet.org/pxeboot/prod/rhcos-418.94.202501221327-0-live-rootfs.x86_64.img coreos.inst.ignition_url=https://opns01.cudanet.org/pxeboot/prod/master.ign ignition.firstboot ignition.platform.id=metal

initrd images/prod/rhcos-418.94.202501221327-0-live-initramfs.x86_64.img

}

Compute Node grub.cfg

set default="1"

function load_video {

insmod efi_gop

insmod efi_uga

insmod video_bochs

insmod video_cirrus

insmod all_video

}

load_video

set gfxpayload=keep

insmod gzio

insmod part_gpt

insmod ext2

set timeout=5

### END /etc/grub.d/00_header ###

### BEGIN /etc/grub.d/10_linux ###

menuentry 'Install OCP 4.18.1 Compute Node' --class fedora --class gnu-linux --class gnu --class os {

linux images/prod/rhcos-418.94.202501221327-0-live-kernel-x86_64 coreos.inst.install_dev=/dev/nvme0n1 coreos.live.rootfs_url=https://opns01.cudanet.org/pxeboot/prod/rhcos-418.94.202501221327-0-live-rootfs.x86_64.img coreos.inst.ignition_url=https://opns01.cudanet.org/pxeboot/prod/worker.ign ignition.firstboot ignition.platform.id=metal

initrd images/prod/rhcos-418.94.202501221327-0-live-initramfs.x86_64.img

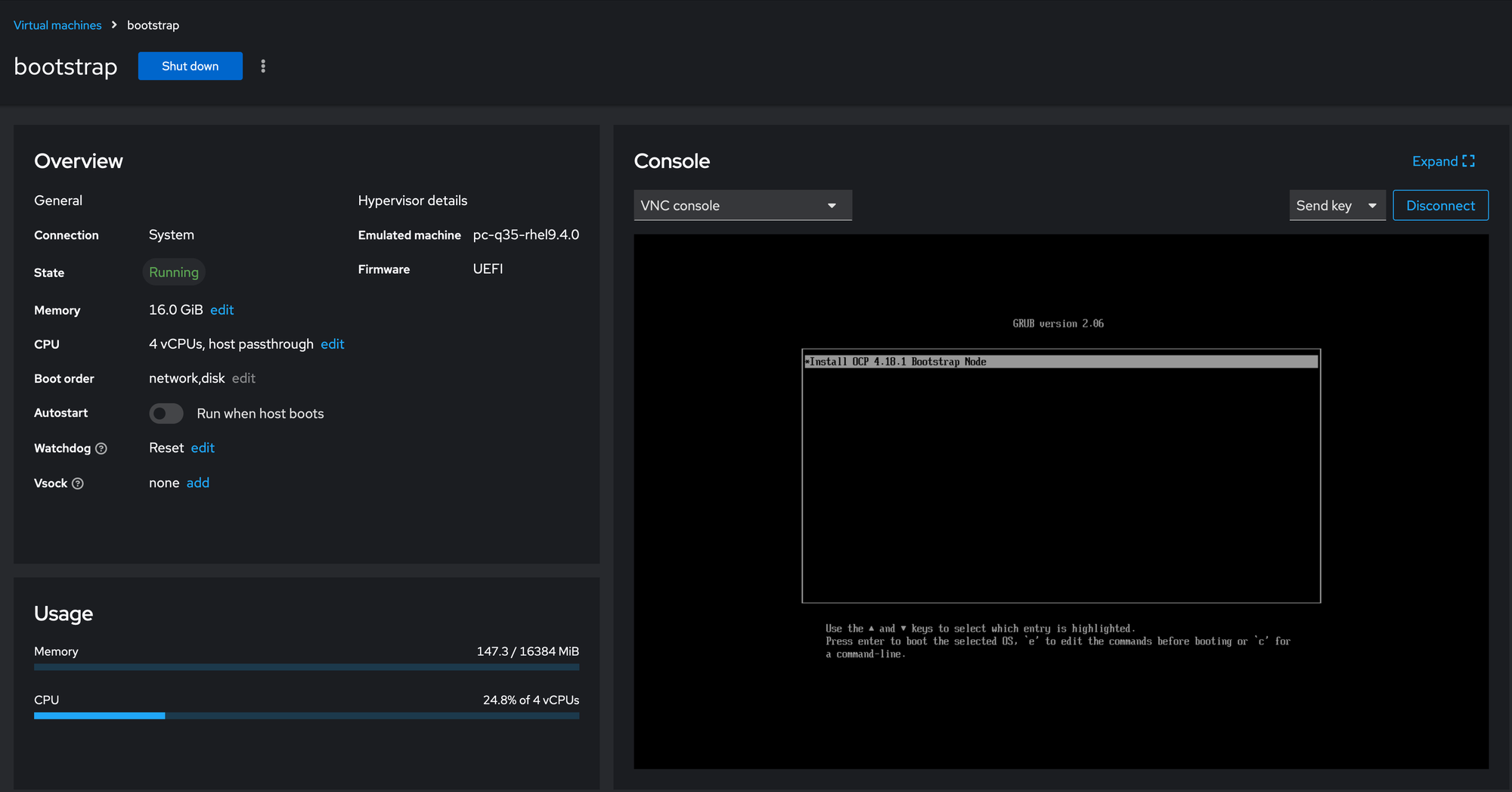

}Take your time, make sure the absolute and relative paths are correct and that your root device is set correctly. We're not going to be quite ready to bootstrap the cluster yet as we still need to configure our bare metal node BIOS settings, but it's probably a good idea at this time to fire up your bootstrap VM check that it can successfully PXE boot the RHCOS installer.

I use KVM on RHEL, but you can literally use any hypervisor, or even a physical device like a spare laptop, as long as you can put your bootstrap node on the appropriate VLAN. Just make sure to use the correct root device name if you use something different.

Now that we've verified that our PXE server works we are ready to configure our bare metal nodes for PXE booting.

Step 3: Configure the UEFI Boot Options on the Nodes

As I previously mentioned, my nodes lack any kind of out of band management, so the next part takes a little bit of a leap of faith, but if you have everything configured correctly your cluster will come up without a problem. For the initial configuration and troubleshooting, I eventually broke down and bought a portable monitor on Amazon and an HDMI KVM switch for this task to make it easier. In case you're wondering these are the ones I bought

https://www.amazon.com/dp/B088D8JG3L?ref_=ppx_hzsearch_conn_dt_b_fed_asin_title_1

https://www.amazon.com/dp/B08QCR62VL?ref_=ppx_hzsearch_conn_dt_b_fed_asin_title_1

Worth noting; on the KVM switch, the HDMI ports were too close to each other to use standard sized HDMI cords, so I ended up having to Dremel off the material and wrap them with electrical tape.

With that figured out we can configure our node BIOSes.

1. Update the BIOS:

There's nothing worse than troubleshooting dumb problems and inconsistent behavior on computers, so while it's not 100% necessary, I'd HIGHLY recommend updating all your nodes to the same BIOS version to preclude any weirdness.

In my case, I get to deal with an additional "fun" step on my nodes. I boot my nodes in UEFI, but the BIOS update images are only bootable in legacy mode. I won't cover exactly how to do this because everyone's hardware is going to be a little different. Just make sure to update the BIOS to the latest version and do a full settings reset (usually F9) to make sure all your nodes are configured identically.

2. Wipe your disks:

This step is the magic bullet that makes all of this work (or fail). Boot up a Fedora thumb drive and wipe all of your disks, eg; wipefs -af /dev/nvme0n1. Then shut down the node and pull the thumb drive out.

2. Configure your BIOS settings:

Boot into your BIOS, and make sure UEFI is enabled as the boot mode in the BIOS. Disable any legacy boot options (CSM) to ensure only UEFI booting is enabled.

Since these are bare metal nodes, you are planning on running Openshift Virtualization right? Make sure that VTx (and optionally, VTd if you plan on doing device pass thru) are enabled.

While you're at it, make 100% sure that the date and time are set correctly, because RHCOS gets its time and date initially from the BIOS, and if set incorrectly the SSL certificates will be expired/not valid yet.

3. Set boot priority:

The way I set my nodes up is to default boot from nvme, and then if there's no bootable OS on the nvme, it falls back to PXE boot. That way if I ever need to redeploy the nodes, I just wipe disks and reboot and PXE takes over from there. It's pretty slick once you get all the kinks worked out (which admittedly - WILL BE - a process of trial and error, especially if you're doing this completely headless like I did for a couple years before I finally broke down and got that monitor and KVM. I used to have to lug my nodes upstairs to plug them in at my desk in order to do the BIOS configuration. It got really old after a while, especially when I was still running a 10 node cluster).

Power off your nodes and sit tight, we're still not quite ready to deploy the cluster. I know...

Step 4: Deploying the OpenShift Cluster

Once your nodes are configured for UEFI PXE booting, the next step is to use the Red Hat OpenShift installer to deploy the cluster.

1. Generate new ignition configs:

Yeah, you read that right. Generate new ignition configs, and upload them to your PXE server, overwriting the old ones. The reason why this is a good idea is because the SSL certificates generated by the bootstrap node are only valid for a short time (I think 8 hours?). It's always recommended to just generate new ignition configs immediately prior to deploying a cluster. Just trust me...

2. NOW run the Openshift Installer:

From the same directory you just generated your fresh ignition configs from, run the command openshift-install wait-for bootstrap-complete. This isn't technically required (it doesn't actually do anything to the cluster but monitor the kube api), but it'll give you some good debugging information while your nodes come up and join the cluster.

3. Power on your nodes:

Assuming you've made it through the gauntlet to this point, your nodes should automatically PXE boot the RHCOS installer, grab their ignition configs and the cluster should come up in about a half hour or so... However, I've never once successfully bootstrapped a bare metal cluster on the first shot. Not. Once.

If the cluster does not come up on the first shot, start going through things logically.

- It's always DNS.

- when it's not DNS, it's your load balancer.

- Are you sure you made that DHCP reservation correctly?

- Are you sure that the time and date are correct? Once RHCOS connects to the internet, NTP should take over and time will be set to GMT, but I've seen things like a bad CMOS battery cause time issues.

- Check your grub.cfg files for typos.

- Wipe disks. Try, try again.

A really helpful tool while you're waiting for your cluster to come up is the HAProxy stats page. It will show you the status of your nodes as they come up and join the cluster.

Once your openshift-install wait-for bootstrap-complete command says it's done (usually takes about 20 minutes), the bootstrap node has successfully created an etcd cluster and handed it off to your control plane nodes.

There's still more to be done, but at this point as long as your kubernetes API is running on it's own, you can power off your bootstrap node and continue with the rest of the installation.

You can now run the command openshift-install wait-for install-complete to monitor the process. I also find it helpful do an export KUBECONFIG=auth/kubeconfig and run the command watch -n5 oc get co which will give you a real time report of the cluster operators as they are deployed and come online.

You can do something like this:

CSR=$(oc get csr | cut -d ' ' -f 1)

for c in $CSR;

do oc adm certificate approve $c;

doneLather, rinse and repeat, as needed.

Step 5: Post-Installation Steps

Once the cluster is up, you should be able to access it at eg; https://console-openshift-console.apps.prod.ocp.cudanet.org and log in as kubeadmin with the password set in auth/kubeadmin-password.

Some logical next steps would be to add one or more storage classes, as a UPI cluster will not have a storage class out of the box. You'll also probably want to set up an IdP (Identity provider, eg; LDAP) for managing users and groups - definitely not a great idea to access your cluster as kubeadmin, as this is basically logging in as a local administrator. While certainly not required, I always set up certificate-manager-operator and replace the self signed certs for the ingress and API with valid externally signed certs (I use cloudflare + letsencrypt, both are free). You're probably going to want to deploy MetalLB as well. Since this is a bare metal cluster, you're probably going to want to spin up the Openshift Virt operator once you have a storage class set up. In addition to OCP-V, you're likely going to want to install the nmstate-operator and set up one or more bridge interfaces for your VMs.

From here, the possibilities are endless and your cluster is yours to do with as you please. (Let's be honest, you're probably just going to run Plex on it... 😂)

Conclusion

I'm not going to lie, deploying a UPI OpenShift cluster on bare metal is a long, complicated process, but it's a great learning experience. I highly recommend that everyone who works with OCP experiences this pain at least a few times, if for no other reason than to appreciate just how much easier we've made things for our customers. That being said, there are always going to be edge use cases where UPI is the only option, not just on my janky home lab. For example, on unsupported hypervisors like Proxmox or Hyper-V, or in cases where a customer's IT policy bans the use of DHCP (yes, I use DHCP, but with static reservations, which is more or less the exact same thing as doing static IPs). In that case, PXE booting isn't even an option and you have to resort to booting RHCOS from an ISO and applying network and ignition configs manually.

Good luck!