Oh No! Someone Griefed my Minecraft Server!

OADP to the rescue!

A couple of days ago, my daughter texted me, "Hey dad, I think someone raided the Minecraft server". So I logged in and sure enough, we'd been griefed. It just so happens that following the GA release of OADP (Openshift APIs for Data Protection) 1.3.1, I decided to set up some backup schedules, specifically to test out the new Datamover capabilities (hint; OADP 1.3.1 now supports both restic and kopia backends, allowing for backup of both filesystem and block PVCs to an S3 bucket).

This turned out to be the perfect opportunity to test backup and restore functionality on OADP. First I went back and looked at the logs and determined when the server had been raided, then found the most recent backup from before that point and did a restore. Boom! Back in business.

Pretty awesome, right? Shoot, it's almost like I just responded to an actual hack and had a disaster recovery event and I handled it like a real enterprise admin. Well, for all intents and purposes, that is what happened. Granted, a Minecraft server doesn't contain any sensitive data, but from a data protection perspective, OADP did exactly what it was supposed to - provide the ability to back up and restore your data.

Intro to OADP

OADP, or Openshift APIs for Data Protection is an operator for Openshift based on upstream Velero. Velero is a kubernetes native solution that is capable of backing up and restoring desired state as well as persistent data. It is leveraged under the hood on several Openshift operators, such as the Migration Toolkit for Containers, as well as just as a standalone for performing data protection operations on your Openshift cluster. Out of the box, OADP will work for backing up desired state (deployments, services, routes, etc.) but relies on CSI snapshots to restore PVCs. However, that assumes that the snapshots still exist and haven't been deleted or corrupted. Generally speaking, the CSI snapshot is going to exist where the PVC does, so that doesn't exactly give me a warm fuzzy feeling about the security of my data. What happens if the data on the PVC or snapshot is deleted or corrupted? That's where DataMover comes into play.

What is DataMover?

OADP has a feature flag called "DataMover" which is probably what most people are thinking of when talking about data protection - my workload is snapshotted and the snapshot data egresses my cluster to be backed up somewhere else. Makes sense right? If you want your data to actually be backed up and have a recoverable copy of it somewhere else, that's what DataMover is for. As of the release of OADP 1.3.1, DataMover now supports both restic and kopia backends for filesystem and block PVCs respectively. You just have to point it at an S3 bucket somewhere and you're off to the races. By default, OADP kind of assumes that your S3 bucket is actually an Amazon S3 bucket, but pretty much any object storage will work - Azure blob storage is supported, both Noobaa and ceph-rgw on ODF work (although... I don't like the idea of the S3 bucket backing up my data to be on the same storage array as the data itself, especially with hyperconverged Ceph) or in my case - MinIO on my TrueNAS server.

Installing OADP

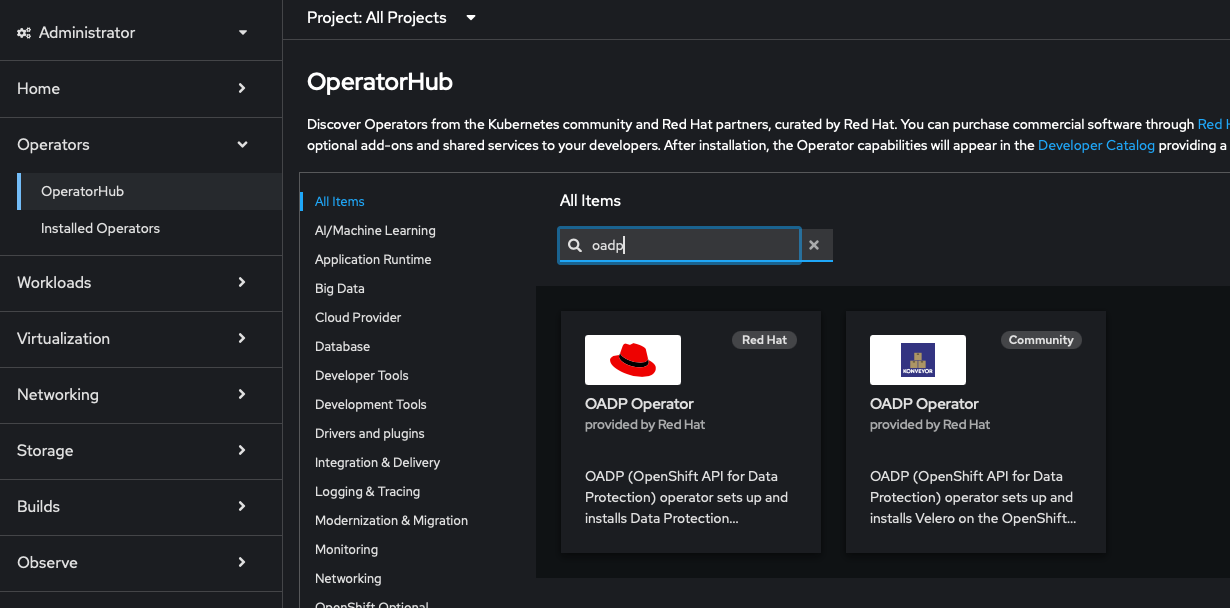

Installing OADP is very straightforward. From the Openshift web console, navigate to Operators > OperatorHub and search for OADP.

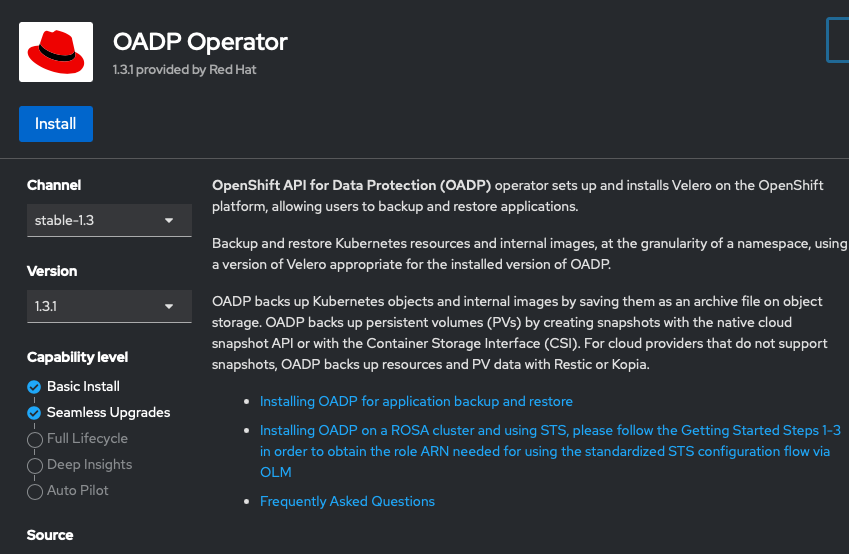

Make sure to select the latest stable version (1.3.1 at the time of writing) and click Install

By default, OADP will install to the openshift-adp namespace, but it can be installed to multiple namespaces so that you can have individual instances configured differently. Also, several other operators make use of OADP under the hood.That's all there is to it.

Data Protection Application

Next you'll need to define a DataProtectionApplication. A DataProtectionApplication is a CRD that defines where and how OADP can peform backups. In general, it needs to be configured with the access key and secret key for your S3 storage as well as the bucket you wish to use.

First, create a secret with your S3 credentials, eg;

cat << EOF > credentials-velero

[default]

aws_access_key_id=YOURACCESSKEY

aws_secret_access_key=YOURSECRETKEY

EOF

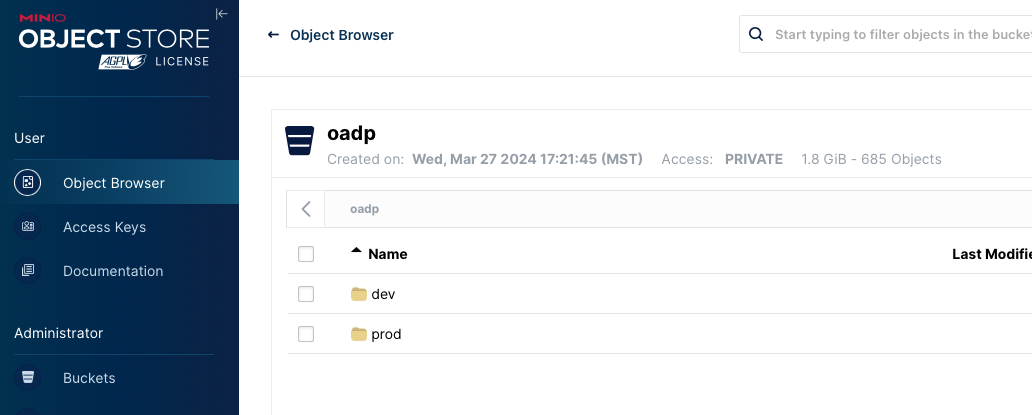

oc create secret generic cloud-credentials -n openshift-adp --from-file cloud=credentials-veleroNext, you'll need to create a bucket on your S3 server for OADP, in my case it's called oadp (really creative, I know).

Next, you'll need to annotate the OADP namespace to enable DataMover

oc annotate --overwrite namespace/openshift-adp volsync.backube/privileged-movers='true'Then you can create your DataProtectionApplication. In my experience, the form view does not provide a lot of the necessary fields I needed to get it working, so I just define my DPA using YAML

apiVersion: oadp.openshift.io/v1alpha1

kind: DataProtectionApplication

metadata:

name: dev-ocp

namespace: openshift-adp

spec:

backupLocations:

- velero:

config:

insecureSkipTLSVerify: 'true'

profile: default

region: us-east-1

s3ForcePathStyle: 'true'

s3Url: 'https://192.168.60.190:9000'

credential:

key: cloud

name: cloud-credentials

default: true

objectStorage:

bucket: oadp

prefix: dev

provider: aws

configuration:

nodeAgent:

enable: true

uploaderType: restic

velero:

defaultPlugins:

- openshift

- aws

- kubevirt

- csi

defaultSnapshotMoveData: true

defaultVolumesToFSBackup: true

featureFlags:

- EnableCSI

snapshotLocations:

- velero:

config:

profile: default

region: us-east-1

provider: aws

You can set the uploaderType to either restic or kopia. I use NFS as my default storage class so I set it to restic, but I believe kopia works with both filesystem and block PVCs. As I mentioned previously, I'm using MinIO on my TrueNAS server for S3 but OADP assumes that your S3 storage is actually Amazon S3, so we have to set a couple of dummy values in the yaml to work around that, eg; provider: aws, region: us-east-1, etc.

Once you create the DataProtectionApplication, OADP will also create a couple of other CRs, such as a BackupStorageLocation and a VolumeSnapshotLocation. If you browse to your S3 server, you'll see that a folder under the bucket you specified with the prefix specified was created

Run your first backup

In order to interact with your OADP instance via the CLI, you'll need to create an alias in your bash profile

echo "alias velero='oc -n openshift-adp exec deployment/velero -c velero -it -- ./velero'" >> ~/.bash_profile

source ~/.bash_profile

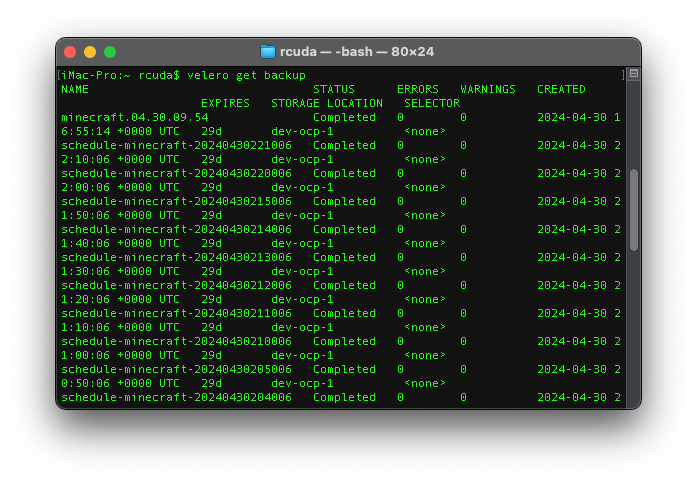

Then you can interact with OADP programatically. It has a pretty logical syntax for a lot of things, eg; velero get backup will list your backups, etc.

Now we can try running a first backup by doing something like this

NAMESPACE=minecraft

velero backup create $NAMESPACE.$(date +'%m'.'%d'.'%H'.'%M') --include-namespaces $NAMESPACE --default-volumes-to-fs-backupand then you can view the status of your backup like this

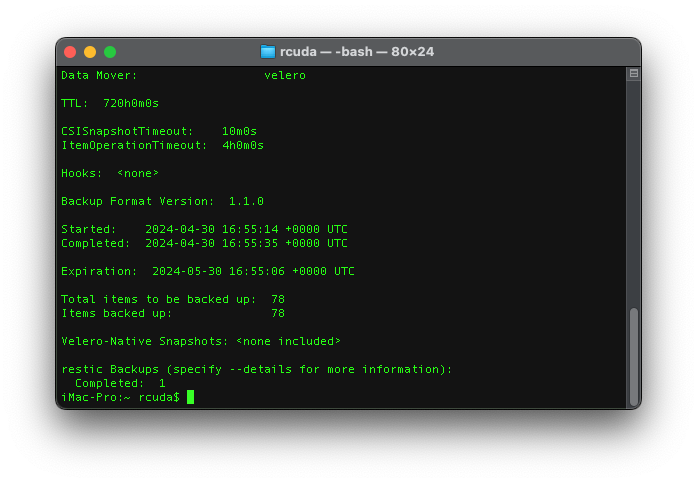

velero backup describe minecraft.04.30.09.54

#or

velero backup logs minecraft.04.30.09.54

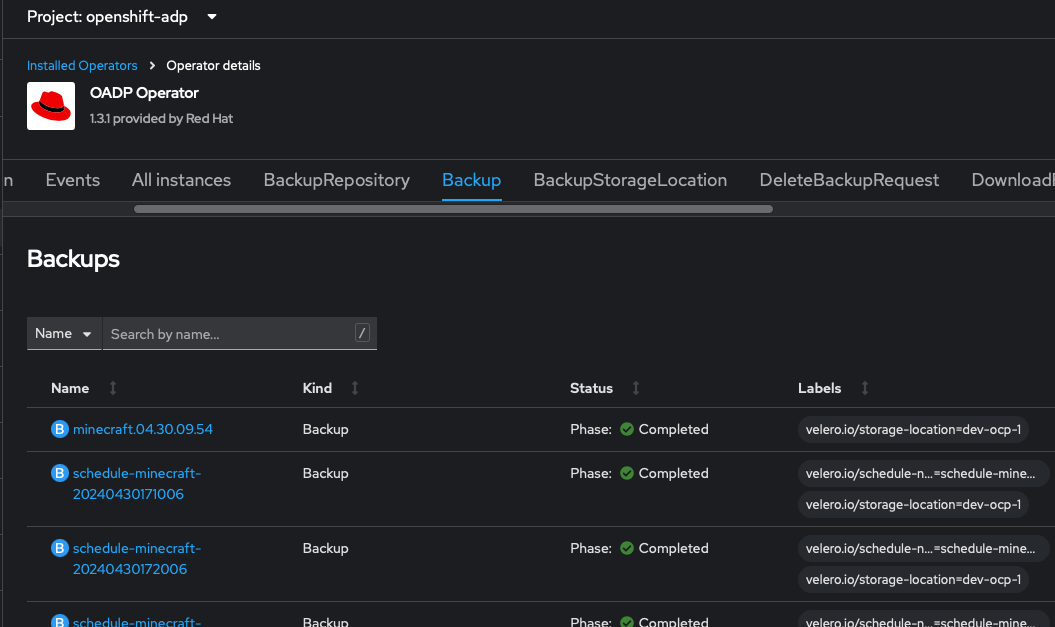

You can also see your backups in the Openshift web console.

Scheduling backups

running a one-off backup is fine, but typically you're going to want to take a backup at regular intervals, eg; every night at midnight. Creating a schedule is probably easier to do via YAML than it is to do with the form view. They're defined using cron format. Here's a very simple example of a backup schedule. It runs every night at midnight and backs up the minecraft namespace only.

apiVersion: velero.io/v1

kind: Schedule

metadata:

name: schedule-minecraft

namespace: openshift-adp

spec:

schedule: '0 0 * * *'

template:

defaultVolumesToFsBackup: true

includedNamespaces:

- minecraft

storageLocation: dev-ocp-1Now, with the backup schedule set, new backups will have the naming convention schedule-minecraft-{timestamp}.

Performing a Restore

Restores are probably going to be performed few and far between, but as a data protection admin in a past life, believe me - you really want to test your backups on a regular basis. Backups are only worthwhile if you can actually restore them, and restoring them is the only way to test if your backups are good. Test. Restores. Regularly.

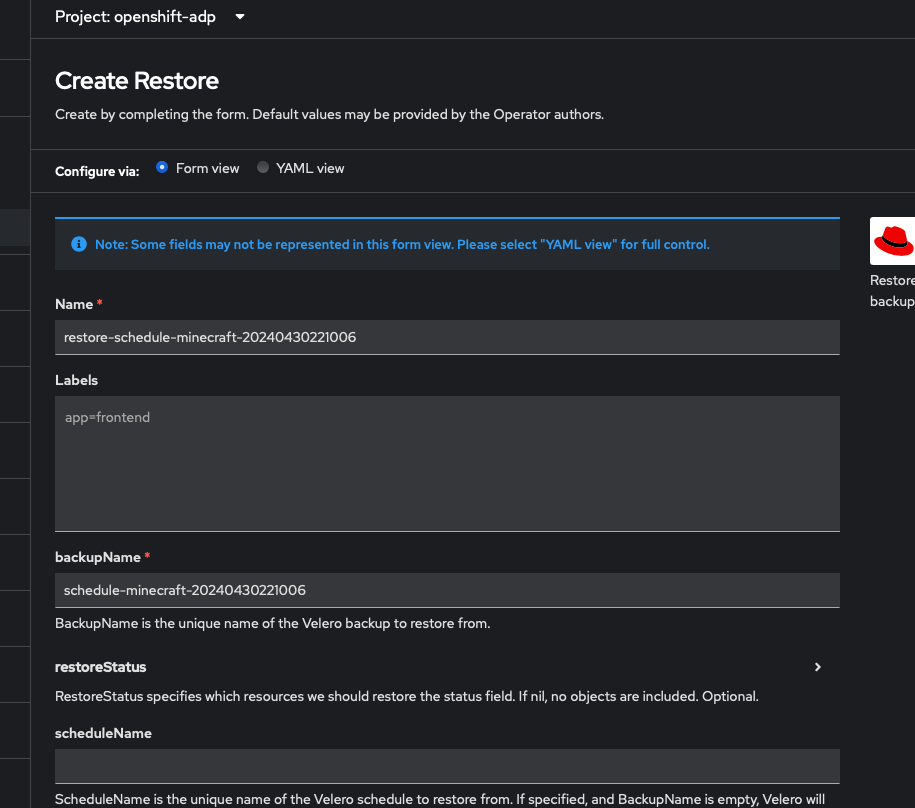

Performing a restore is pretty straightforward, and it's actually on of the few things that is easier to do with the form view. Figure out which backup you want to restore from, eg; schedule-minecraft-20240430221006.

Click on Create Restore and define the name and backupName

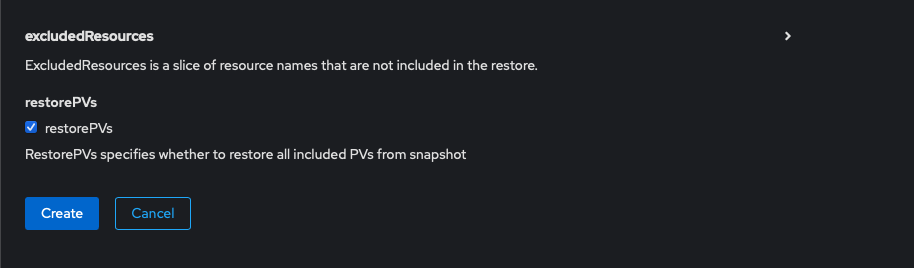

Then, considering we need to restore the data on the PVC to a point before my minecraft server got raided, we need to check the restorePVs box.

Then click create. That's it. OADP will spin up a pod that will copy the data back over from your S3 bucket to a PVC in the appropriate namespace, which may take a while depending on the volume of data. This is a minecraft server, so we're talking less than 100Mb. Once the restore is complete, you're done

Before:

After: