More Fun with TrueNAS Scale and Democratic CSI

I recently migrated from TrueNAS Core to TrueNAS Scale. I'm not going to lie, getting to the point where I had all the bugs worked out has been a struggle. Even after I finally managed to get everything working, I was still running into some intermittent issues, particularly with the Democratic CSI drivers which provide NFS and iSCSI storage for my Openshift clusters.

Mistakenly, when I migrated from TrueNAS Core to TrueNAS Scale, I was under the impression that you had to use the democratic-csi API storage drivers - which are still marked as 'experimental' (see here https://github.com/democratic-csi/democratic-csi), and given the inconsistent behavior I've witnessed from the API drivers with certain work loads, they live up to that status. In particular, I've had a lot of problems with Openshift Virtualization, especially on VM creation. Certainly, my initial attempt at setting up democratic-csi on Openshift using my existing configuration failed. It turns out that you can still use the stable SSH key based drivers, but there are a few things that have changed between TrueNAS Core and TrueNAS Scale. Nothing particularly drastic, but they needed changing to get them working.

First and foremost, on Debian based TrueNAS Scale, there is no "wheel" group. The correct user and group to use is "root:root" or "0:0". Second, you can use a non-root user (admin) on TrueNAS Scale for additional security if you don't want to configure root SSH access which is nice.

Configure SSH Access

Configuring SSH access on TrueNAS Scale is pretty different from how you configure SSH access on TrueNAS Core, and the process was far from intuitive.

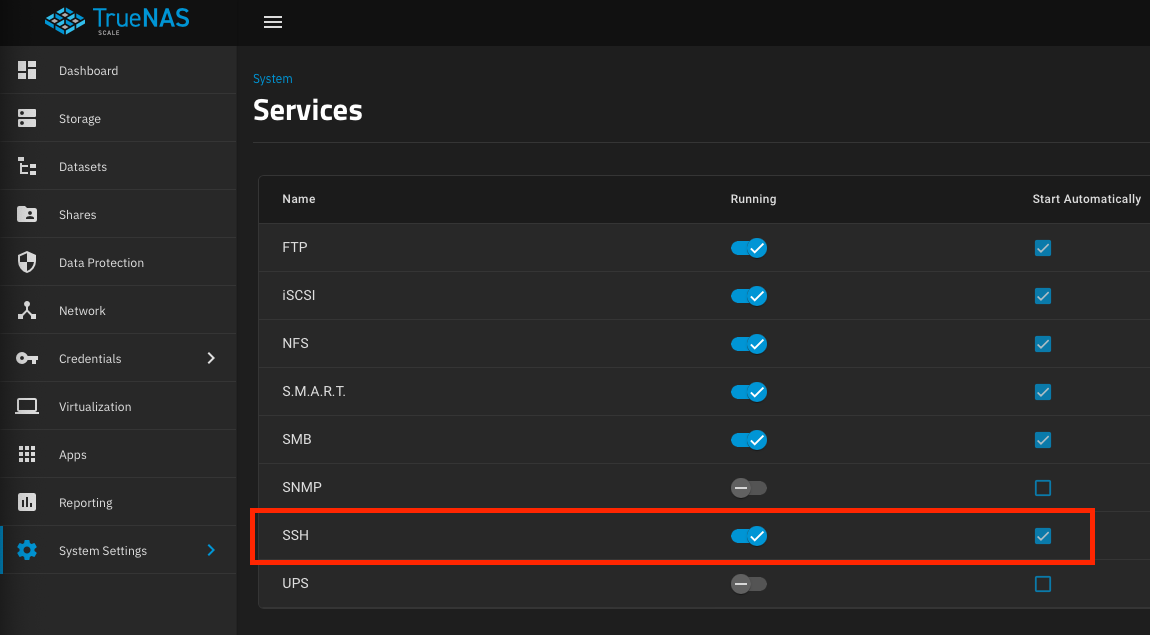

- Enable the SSH service

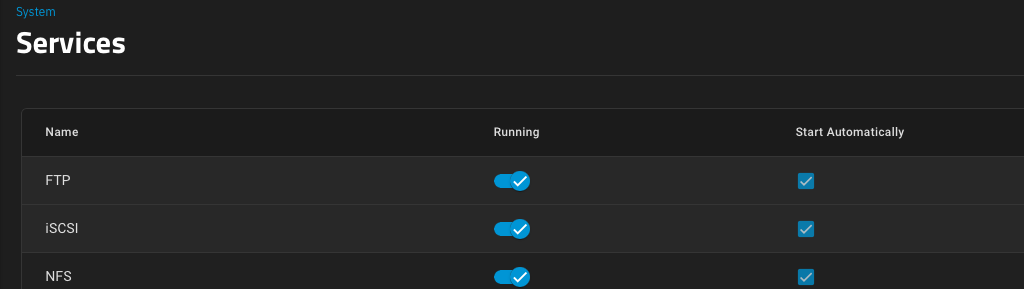

From the TrueNAS web UI, navigate toSystem Settings > Servicesand toggle the SSH server on. Make sure to check theStart Automaticallybox

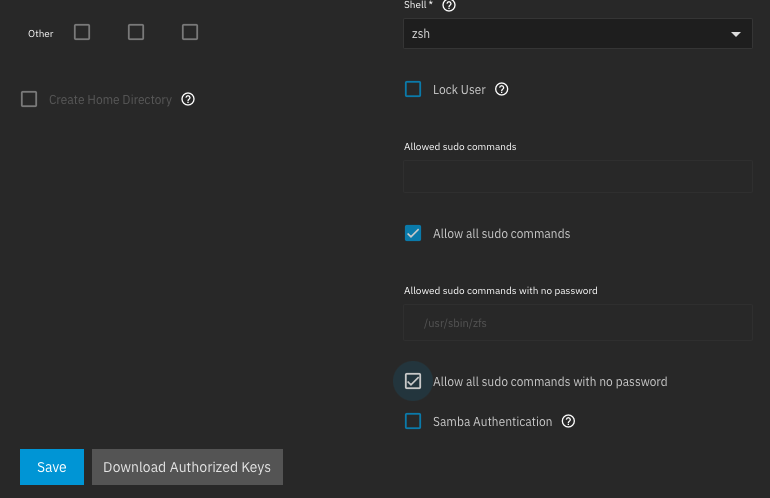

2. configure passwordless sudo for the admin user

Navigate to Credentials > Local Users > admin and select Edit. Under the admin user config screen, toggle Allow all sudo commands and Allow all sudo commands with no password and click save

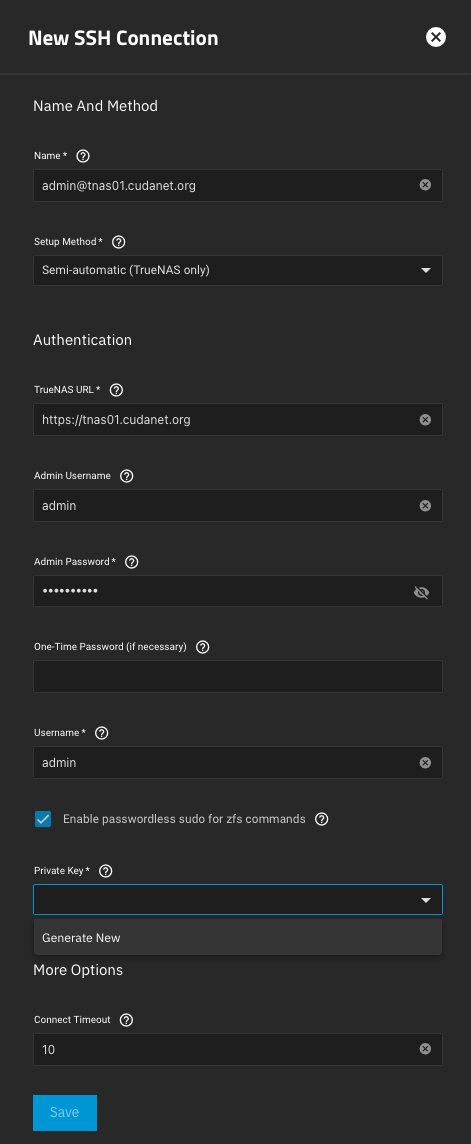

3. Create the SSH keys

This part was extremely unintuitive. To create an SSH keypair for an account you have to navigate to Credentials > Backup Credentials > SSH Connections and select Add. Then under the 'New SSH Connection' wizard, configure your connection as follows

Name: [email protected]

Setup Method: Semi-automatic

TrueNAS URL: https://tnas01.cudanet.org

Admin Username: admin

Admin Password: <password>

Username: admin

Enable passwordless sudo for zfs commands ***very important!

Private Key: Select 'Generate New'

4. Add the SSH key to the admin user

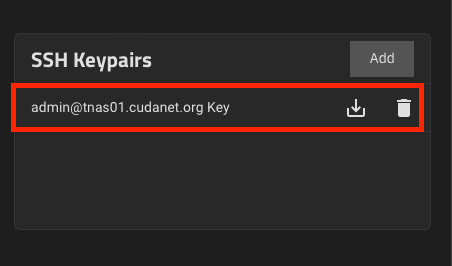

Navigate to Credentials > Backup Credentials > SSH Keypairs and download the new keypair that was generated.

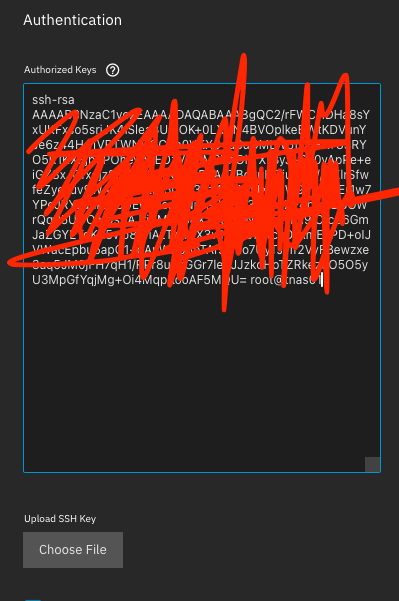

Copy the contents of the SSH public key. Navigate back to Credentials > Local Users > admin and pasted the contents of the SSH public key into the Authorized Keys section under the admin user account (if it's not automatically populated). Also, not sure if this part is 100% necessary but it's probably a good idea to change the last line root@tnas01 to admin@tnas01

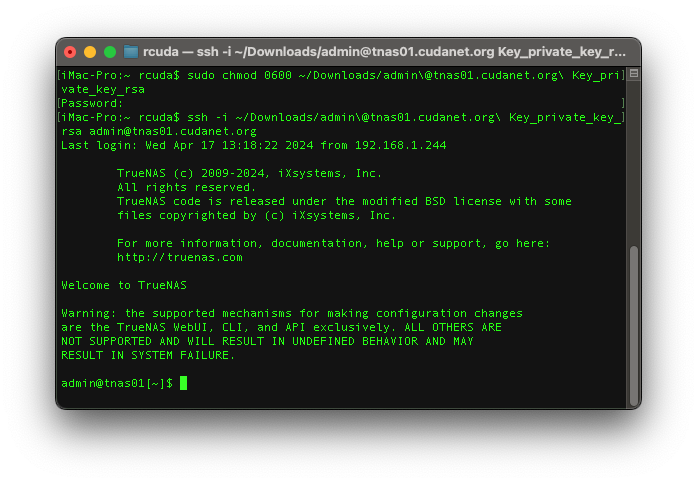

Then it's probably a good idea to check whether your ssh keys work.

sudo chmod 0600 ~/Downloads/[email protected] Key_private_key_rsa

ssh -i ~/Downloads/admin\@tnas01.cudanet.org\ Key_private_key_rsa [email protected]

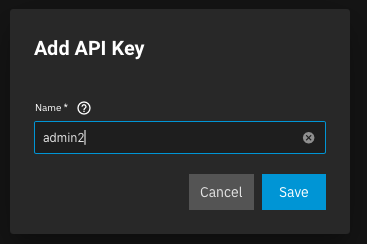

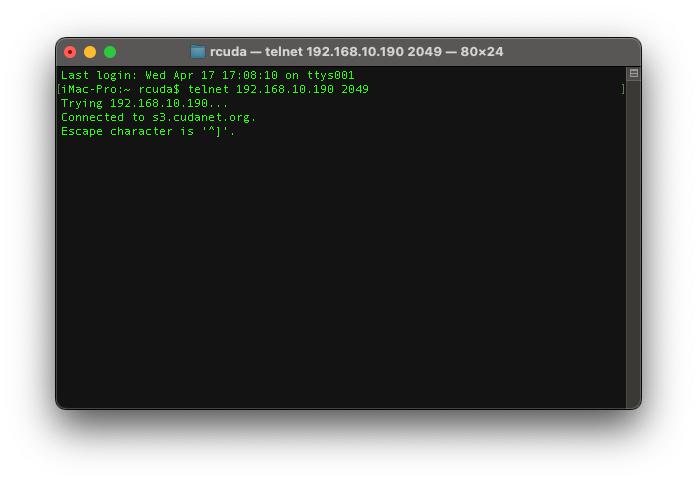

Create an API key

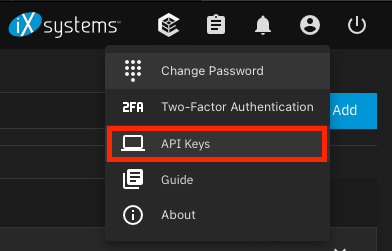

Although the standard drivers use SSH keys to do the actual provisioning tasks, they still use the API to determine things about the system like the version of TrueNAS you're running. To generate an API key, click on the User icon up in the top right corner and select API Keys.

Then select Add API Key, give it a unique name and select Save.

Copy the API key somewhere safe, because you'll never be able to see it again

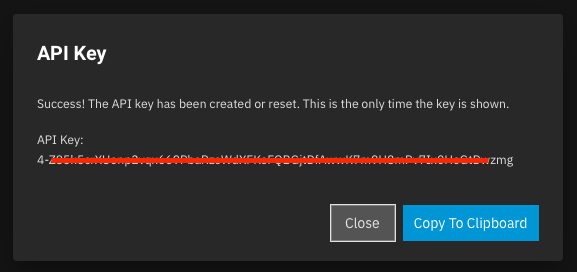

Configure NFS

First, you need to make sure the NFS service is enabled and listening on the appropriate interface(s). You can also just allow it to listen on all interfaces by not specifying any specific interface. Navigate to System Settings > Services and toggle the NFS service to on, and make sure it's set to start automatically. You may want to check the settings and configure acccordingly.

Then we need to make sure the parent datasets for volumes and snapshots exist at the appropriate paths. In my case, these reside under /mnt/pool0/csi/{snaps,vols}.

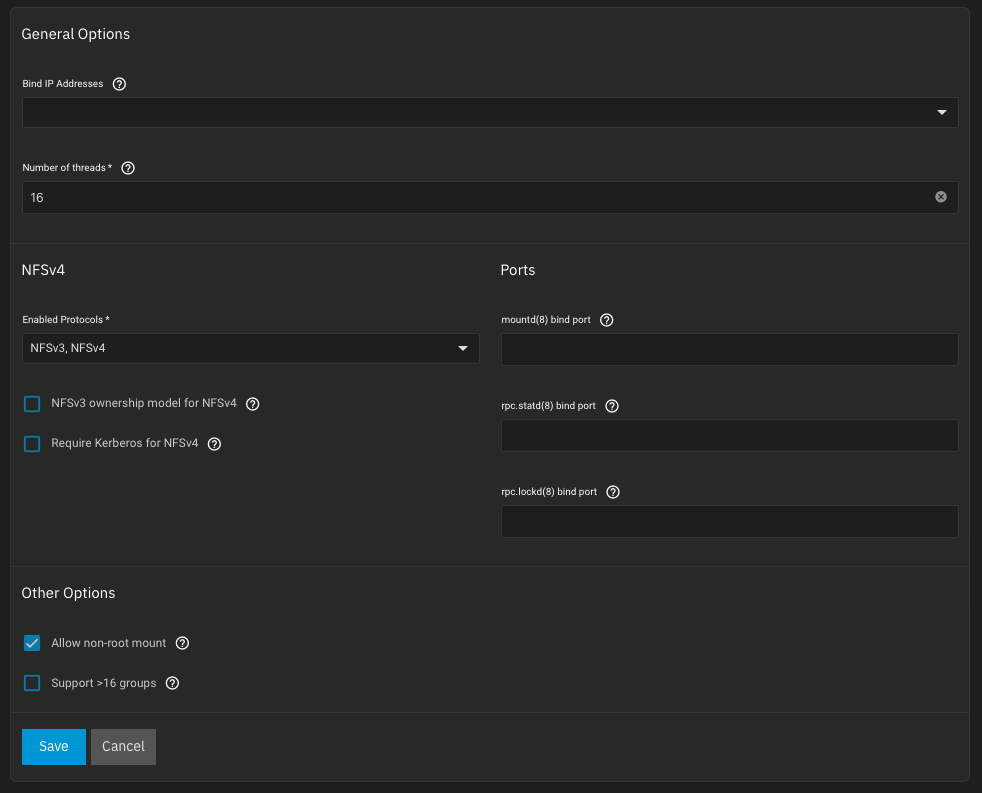

Now let's verify that the service is listening on the appropriate interface and port

Configure iSCSI

iSCSI is a little more complicated to set up than NFS. I don't use CHAP authentication so it's not that bad to set up. First, navigate to System Settings > Services and toggle on the iSCSI service, and make sure it's set to start automatically.

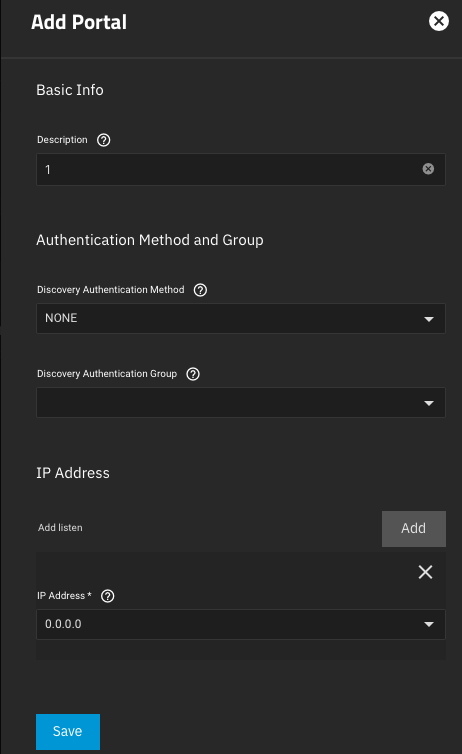

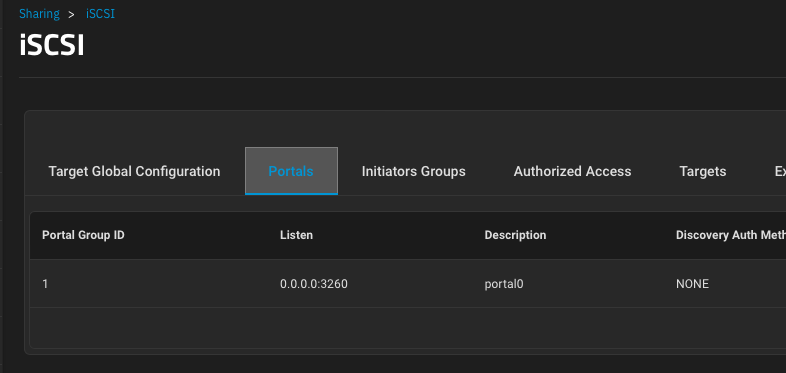

I set my portal to listen on all interfaces to keep things simple, but just like NFS, you can limit it to a specific interface(s) which would be very useful if you have, eg; a separate 10GBe storage area network (SAN) and you want to segregate iSCSI traffic to that. Worth noting, if you go down this route, you'd probably want to enable jumbo frames (9000 MTU) on the interface, but that is not within the scope of this tutorial.

Create your first portal, eg; 1

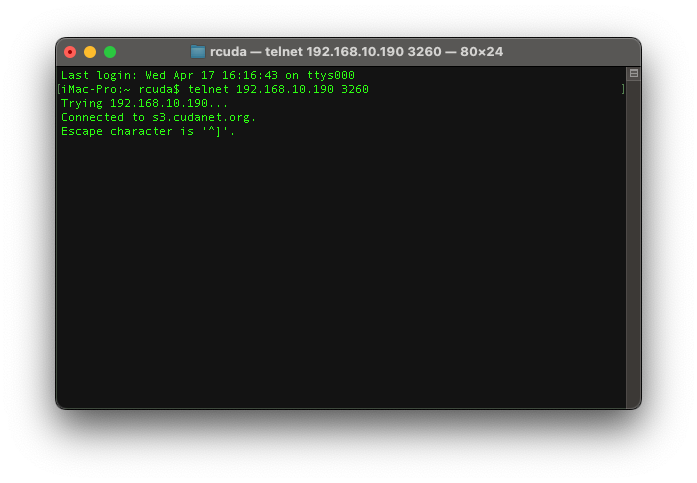

In my case, I have portal 1 which listens on all interfaces on port 3260 TCP, or 0.0.0.0:3260 and it does not use CHAP authentication.

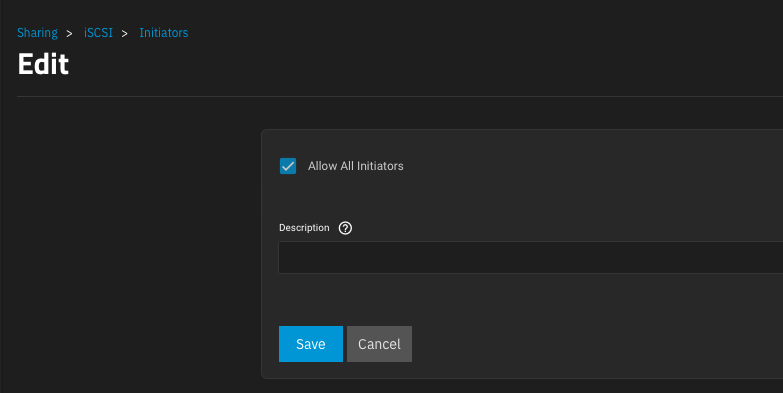

and I've created Initiator Group 1 and configured the iSCSI service to allow all initiators. Again, you can really tighten down the security on this if you want, but it isn't necessary in my lab.

Then create your initiatiator group. Select Allow All Initiators. You don't necessarily have to give it a description.

Let's make sure that the service is indeed listening on the correct interface and port.

Deploy Democratic CSI

Now with NFS and iSCSI configured, and our API key and SSH keys in hand, we can deploy democratic-csi. Democratic-csi is typically deployed as a helm chart, you just need to provide it values.yaml files for the NFS and iSCSI services. These are the values files that worked for me

NFS:

csiDriver:

# should be globally unique for a given cluster

name: "org.democratic-csi.nfs"

# add note here about volume expansion requirements

storageClasses:

- name: truenas-nfs-nonroot

defaultClass: false

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

parameters:

# for block-based storage can be ext3, ext4, xfs

# for nfs should be nfs

fsType: nfs

# if true, volumes created from other snapshots will be

# zfs send/received instead of zfs cloned

# detachedVolumesFromSnapshots: "false"

# if true, volumes created from other volumes will be

# zfs send/received instead of zfs cloned

# detachedVolumesFromVolumes: "false"

mountOptions:

- noatime

- nfsvers=4

secrets:

provisioner-secret:

controller-publish-secret:

node-stage-secret:

node-publish-secret:

controller-expand-secret:

# if your cluster supports snapshots you may enable below

volumeSnapshotClasses: []

#- name: truenas-nfs-csi

# parameters:

# # if true, snapshots will be created with zfs send/receive

# # detachedSnapshots: "false"

# secrets:

# snapshotter-secret:

driver:

config:

driver: freenas-nfs

instance_id:

httpConnection:

protocol: https

host: tnas01.cudanet.org

port: 443

apiKey: <your-api-key>

username: admin

allowInsecure: true

sshConnection:

host: 192.168.10.190

port: 22

username: admin

privateKey: |

-----BEGIN OPENSSH PRIVATE KEY-----

b3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAABlwAAAAdzc2gtcn

...

hlA+2q2t2yzk3RAAAAC3Jvb3RAdG5hczAxAQIDBAUGBw==

-----END OPENSSH PRIVATE KEY-----

zfs:

cli:

sudoEnabled: true

paths:

zfs: /usr/sbin/zfs

zpool: /usr/sbin/zpool

sudo: /usr/bin/sudo

chroot: /usr/sbin/chroot

datasetParentName: pool0/csi/vols

detachedSnapshotsDatasetParentName: pool0/csi/snaps

datasetEnableQuotas: true

datasetEnableReservation: false

datasetPermissionsMode: "0777"

datasetPermissionsUser: 0

datasetPermissionsGroup: 0

nfs:

shareHost: 192.168.10.190

shareAlldirs: false

shareAllowedHosts: []

shareAllowedNetworks: []

shareMaprootUser: root

shareMaprootGroup: root

shareMapallUser: ""

shareMapallGroup: ""iSCSI:

csiDriver:

name: "org.democratic-csi.iscsi"

storageClasses:

- name: truenas-iscsi-nonroot

defaultClass: false

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

parameters:

fsType: ext4

mountOptions: []

secrets:

provisioner-secret:

controller-publish-secret:

node-stage-secret:

node-publish-secret:

controller-expand-secret:

driver:

config:

driver: freenas-iscsi

instance_id:

httpConnection:

protocol: https

host: tnas01.cudanet.org

port: 443

apiKey: <your-api-key>

username: admin

allowInsecure: true

apiVersion: 2

sshConnection:

host: 192.168.10.190

port: 22

username: admin

privateKey: |

-----BEGIN OPENSSH PRIVATE KEY-----

b3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAABlwAAAAdzc2gtcn

...

hlA+2q2t2yzk3RAAAAC3Jvb3RAdG5hczAxAQIDBAUGBw==

-----END OPENSSH PRIVATE KEY-----

zfs:

cli:

sudoEnabled: true

paths:

zfs: /usr/sbin/zfs

zpool: /usr/sbin/zpool

sudo: /usr/bin/sudo

chroot: /usr/sbin/chroot

datasetParentName: pool0/csi/vols

detachedSnapshotsDatasetParentName: pool0/csi/snaps

zvolCompression:

zvolDedup:

zvolEnableReservation: false

zvolBlocksize:

iscsi:

targetPortal: [192.168.10.190:3260]

targetPortals: []

interface:

namePrefix: prod-

nameSuffix: ""

targetGroups:

- targetGroupPortalGroup: 1

targetGroupInitiatorGroup: 1

targetGroupAuthType: None

extentInsecureTpc: true

extentXenCompat: false

extentDisablePhysicalBlocksize: true

extentBlocksize: 4096

extentRpm: "SSD"

extentAvailThreshold: 0Then you can deploy democratic-csi with helm like this

oc new-project democratic-csi

helm repo add democratic-csi https://democratic-csi.github.io/charts/

helm repo update

helm search repo democratic-csi/

helm upgrade \

--install \

--values iscsi-values.yaml \

--namespace democratic-csi \

zfs-iscsi democratic-csi/democratic-csi

helm upgrade \

--install \

--values nfs-values.yaml \

--namespace democratic-csi \

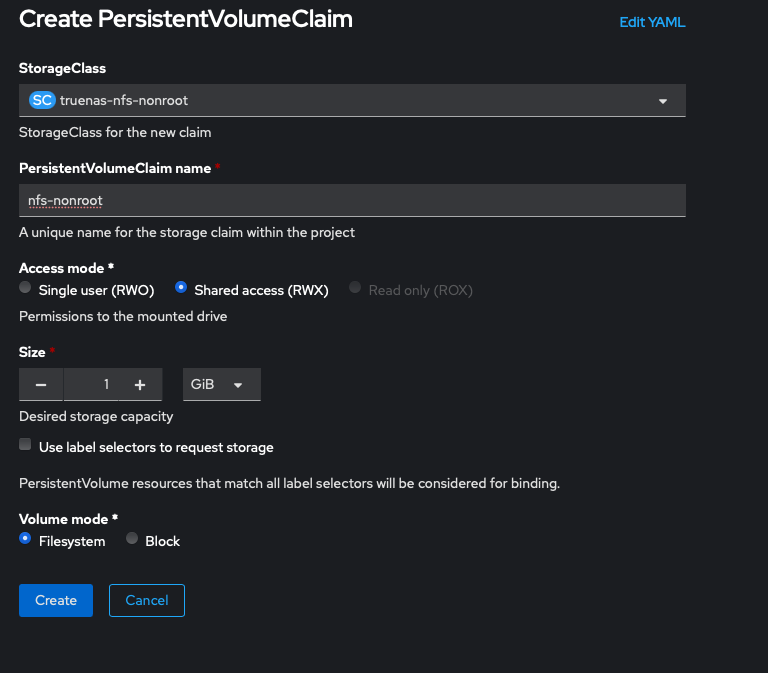

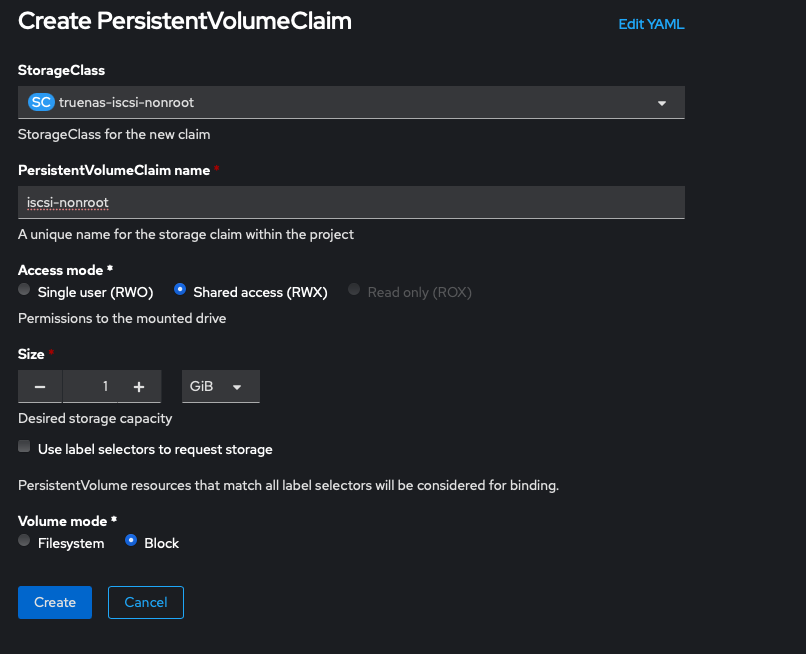

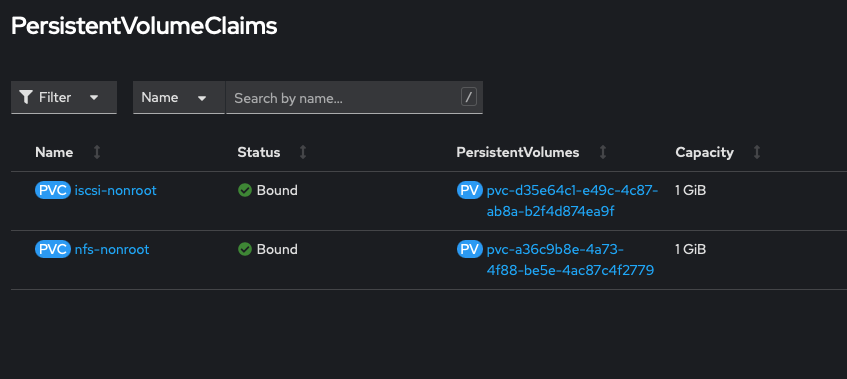

zfs-nfs democratic-csi/democratic-csitemplate argument to spit out all the manifests it creates into yaml files so that I can point it at my internal container registry, which I've written about here in my previous article about converting from TrueNAS Core to TrueNAS ScaleAnd now it would prudent to test creating both iSCSI and NFS PVCs. You should have two new storage classes called truenas-iscsi-nonroot and truenas-nfs-nonroot.

After we click Create the PVC should be bound almost immediately

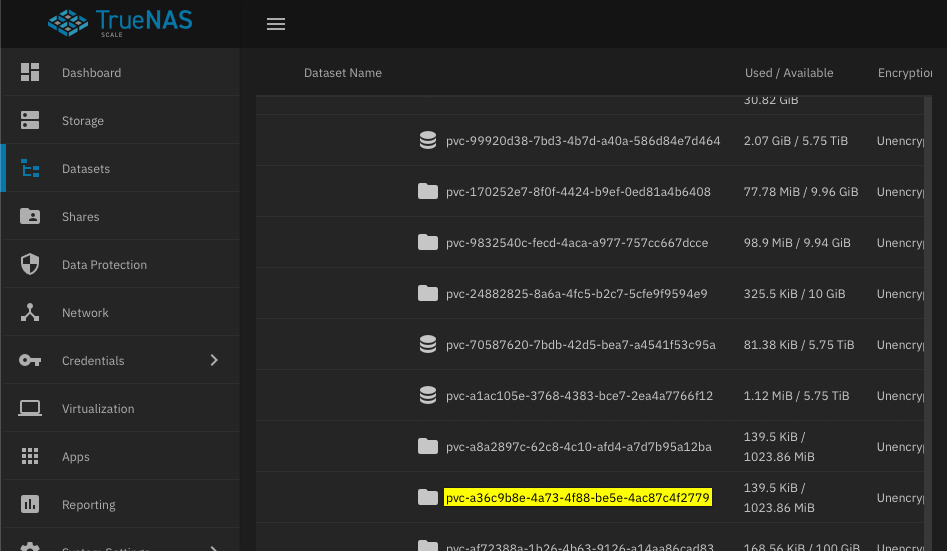

and then if we check the parent dataset on TrueNAS, we can see that the dataset for the PVC was created

Next Steps

But wait, we're not done yet! We still need to configure snapshotting, and if we use Openshift Virtualization (which I do) we need to apply some annotations to the storage profiles in order to make OCP-V happy.

It's technically possible to deploy democratic-csi with snapshot support built in (the commented parts of values.yaml files above) but I haven't figured it out yet. However, you can also just install snapshot controller, see here https://github.com/democratic-csi/charts/tree/master/stable/snapshot-controller

helm repo add democratic-csi https://democratic-csi.github.io/charts/

helm repo update

helm upgrade --install --namespace kube-system --create-namespace snapshot-controller democratic-csi/snapshot-controller

kubectl -n kube-system logs -f -l app=snapshot-controllerThen with snapshot-controller deployed, you can create the appropriate VolumeSnapshotClasses , eg;

apiVersion: snapshot.storage.k8s.io/v1

deletionPolicy: Retain

driver: org.democratic-csi.iscsi

kind: VolumeSnapshotClass

metadata:

annotations:

snapshot.storage.kubernetes.io/is-default-class: "false"

labels:

snapshotter: org.democratic-csi.iscsi

velero.io/csi-volumesnapshot-class: "false"

name: truenas-iscsi-csi-snapclass

---

apiVersion: snapshot.storage.k8s.io/v1

deletionPolicy: Retain

driver: org.democratic-csi.nfs

kind: VolumeSnapshotClass

metadata:

annotations:

snapshot.storage.kubernetes.io/is-default-class: "false"

labels:

snapshotter: org.democratic-csi.nfs

velero.io/csi-volumesnapshot-class: "true"

name: truenas-nfs-csi-snapclassvelero.io label is used by OADP and should be applied to the corresponding VolumeSnapshotClass for your cluster's default StorageClass. If you're not using OADP, you can omit that lineStorage Profiles

Assuming you're using Openshift Virtualization, you will need to patch the StorageProfile CRs with the appropriate values to make OCP-V happy. When you deploy kubevirt/CNV onto an openshift cluster, a StorageProfile is created for each storage class on your cluster. However, basically unless you're using ODF, your storage profiles will be incomplete, lacking information instructing kubevirt how PVCs can be created. In my case, truenas-iscsi can create RWX & RWO block PVCs and RWO filesystem PVCs, and truenas-nfs can create RWX and RWO filesystem PVCs. The StorageProfile also instructs kubevirt how data import and cloning is handled. I wish that this was named consistently, but the feature called SmartCopy is enabled by setting the cloneStrategy to csi-snapshot and dataImportCronSourceFormat set to snapshot means that your boot sources for VM templates will be stored as CSI snapshots. Effectively what this means is that when you create a VM from a template, cloning from a snapshot is more or less instantaneous, but if your method is set to pvc or csi-import, it will have to create a new PVC and copy the contents which obviously takes longer. Here's a link to the official documentation on StorageProfiles which may explain some things in greater detail http://kubevirt.io/monitoring/runbooks/CDIDefaultStorageClassDegraded.html

Here's a simple bash script to do it for you:

#!/bin/sh

# patch TrueNAS iSCSI and NFS storageprofiles

oc patch storageprofile truenas-iscsi-csi -p '{"spec":{"claimPropertySets":[{"accessModes":["ReadWriteMany"],"volumeMode":"Block"},{"accessModes":["ReadWriteOnce"],"volumeMode":"Block"},{"accessModes":["ReadWriteOnce"],"volumeMode":"Filesystem"}],"cloneStrategy":"csi-snapshot","dataImportCronSourceFormat":"snapshot"}}' --type=merge

oc patch storageprofile truenas-nfs-csi -p '{"spec":{"claimPropertySets":[{"accessModes":["ReadWriteMany"],"volumeMode":"Filesystem"}],"cloneStrategy":"csi-snapshot","dataImportCronSourceFormat":"snapshot","provisioner":"org.democratic-csi.nfs","storageClass":"truenas-nfs-csi"}}' --type=mergeAnd that's pretty much it. Now I just need to go back and migrate some PVCs around.

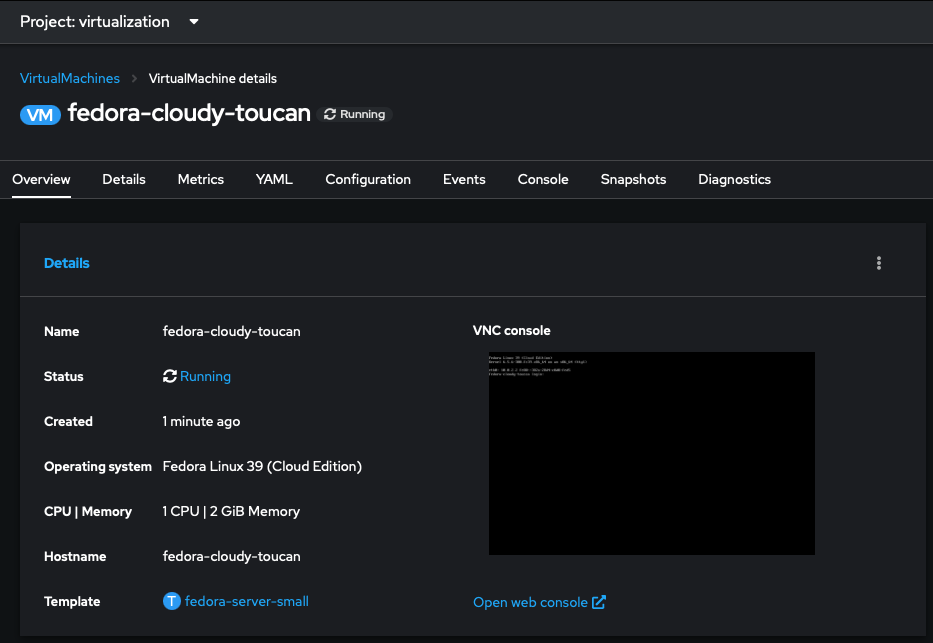

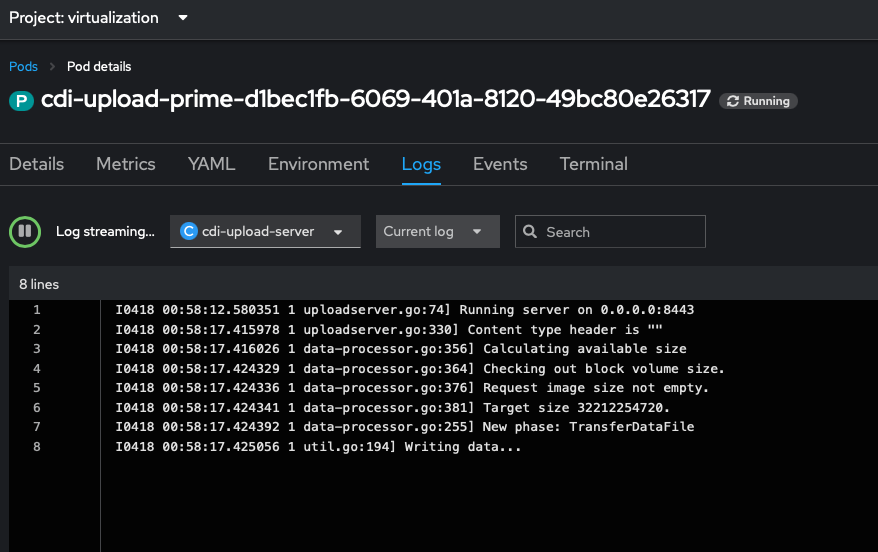

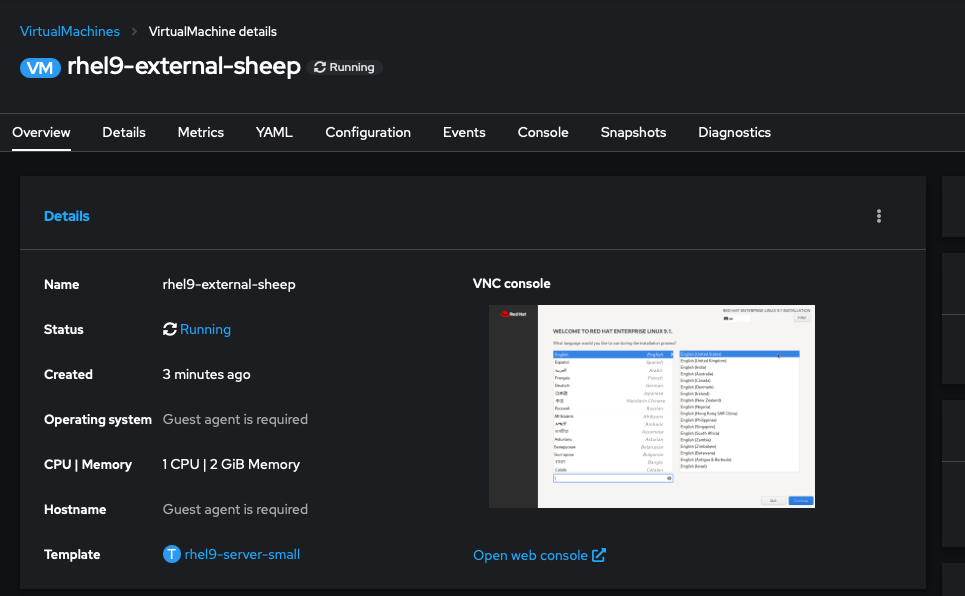

Also, since creating new VMs was kind of hit or miss for me with the API drivers, I figured it would be a good idea to try and import an ISO to spin up a VM. Which, (after remembering to set my storage class as the default for the cluster and patching the storage profile), worked immediately.

I also tested out creating virtual machines from template with a boot source. Once the cron job had run and the boot sources synced, I was able to spin up a new VM from a template in under 30 seconds.