Converting from TrueNAS Core to Scale

tl;dr - don't. Just don't. 🤦♂️

This all started last week when my domain wildcard cert expired and all hell broke loose in my lab. After switching out unbound for dnsmaq, I was finally able to get cert-manager working on my Openshift/OKD clusters and never have to worry about manually rotating certs again. The only things that cert-manager won't help me with are my OPNsense router and my TrueNAS Core server. It turns out that OPNsense has an ACME plugin that will autorotate the cert for you, which I promptly set up, leaving just the NAS. After looking into it, I discovered that TrueNAS, like OPNsense - another BSD based infrastructure platform - also has an ACME plugin for generating certificates as well. However, I was dismayed to find that TrueNAS Core only supports route53 as a DNS provider, but TrueNAS Scale supports Cloudflare as well. This was the final kick in the ass I needed to just bite the bullet and "upgrade" from Core to Scale. This is where my problems began.

While it is true that it's possible to convert a TrueNAS Core install to TrueNAS Scale in place, I cannot stress enough that you really should not do it. In the long run, you'll save yourself a lot of time and headaches by just doing a fresh install of TrueNAS Scale. Seriously. Don't. Just don't.

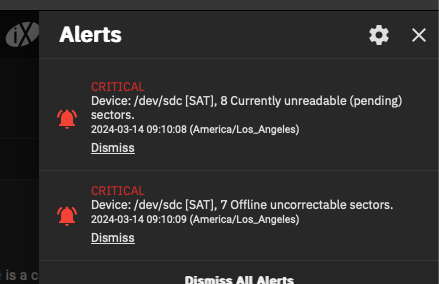

Converting from TrueNAS Core to TrueNAS scale was not a simple migration, it was literally replacing an existing server with a completely different platform, but with the added "bonus" of dragging along all kinds of cruft and weirdness from the previous install. Moving to Scale was kind of like when I recently replaced pfSense with OPNsense on my router. Sure, the two systems largely do the exact same things, but the way that services are implemented are completely different. If I had it to do over again, I would have just wiped the boot disk and done a clean install because it would have been faster and easier in the long run. Coincidentally, I'll get my chance to do that soon because upon importing my ZFS pool to TrueNAS Scale, one of the disks in my zfs pool started throwing errors about bad sectors, so I decided it was time to pull the trigger and upgrade to SSDs. I'm in the process of backing up all my data onto an external hard drive which is going to take a couple of days at this rate. Rsync has been running since yesterday afternoon and I've backed up about 1TB out of 6TB.

ZFS

ZFS is the heart and soul of TrueNAS. Thankfully, importing your ZFS pool into a "new" host is pretty painless. However, that's about the only part of the process that is. Literally every single other thing from your network configs to setting up services are going to be brand new deployments. Your previously working configs do not translate from Core to Scale - despite the fact that there is a wizard for importing your old config. For starters, I had my TrueNAS Core network configured with the two NICs in a lagg interface. TrueNAS Scale only supports link aggregation with bond interfaces, so my TrueNAS server was borked as soon as I tried importing my old config. Second, the paths for executables, and the implementation of pretty much all of the services I use - iSCSI, NFS, SMB, rsync, S3, Webdav, etc. required a completely new configuration.

ACME

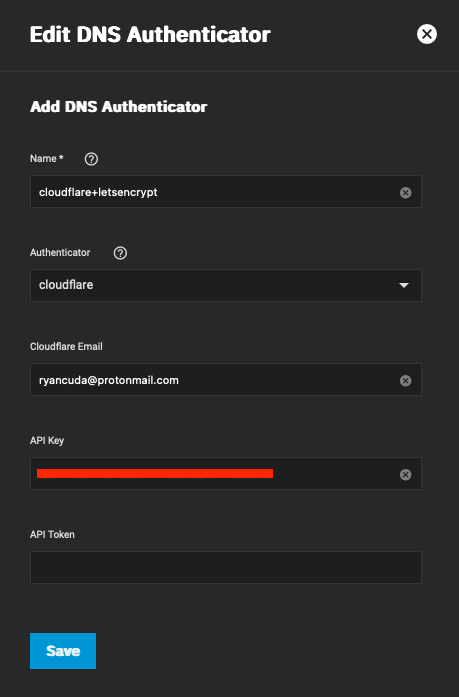

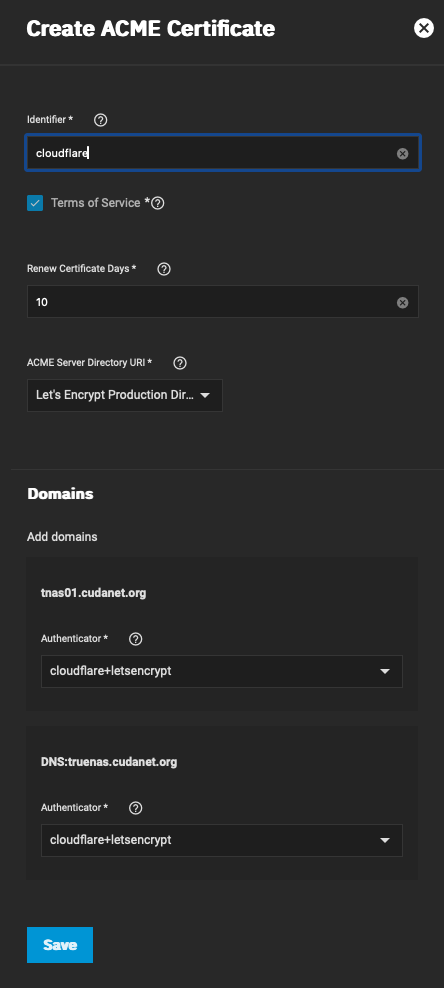

This is where the whole mess began. The steps to set up ACME for certificates is pretty much the same for every other platform. You need an API key or token for your DNS provider (in my case Cloudflare). Navigate to Credentials > Certificates and add an ACME DNS Authenticator. Then configure it appropriately. You can use one of API Key or API token, but not both. Then click save.

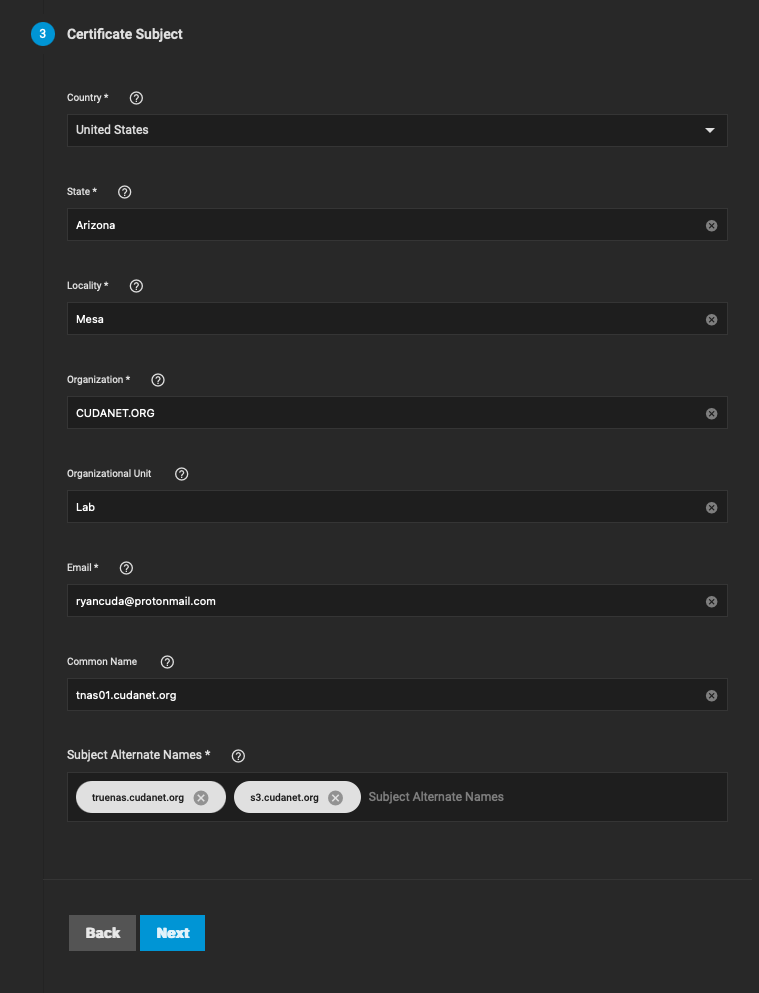

Then create a CSR. You can accept all of the default values except on page 3, you need to populate it out with the appropriate values. Then click save to generate the CSR

Then click on the wrench icon on the new CSR and configure a few things. You'll probably want to use letsencrypt prod and not staging. By default, the cert should renew 10 days before it expires (80 days). Then make sure to set the authenticator to the one you created earlier (the thing you provided your API token to). Then click save.

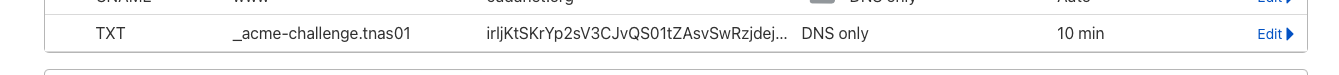

At this point, it will use the API token to authenticate to your DNS provider and create TXT records for each of the SANs on the certificate containing the challenge key it needs to validate your certificate request. This process can take a few minutes while DNS is updated.

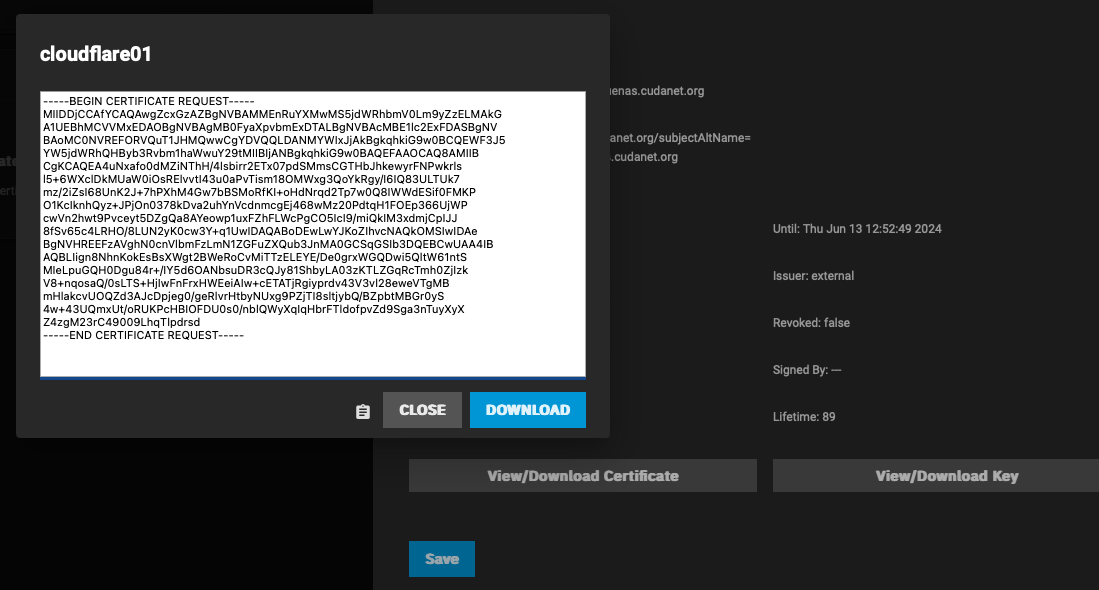

When done, you'll have a new certificate signed by Let's Encrypt

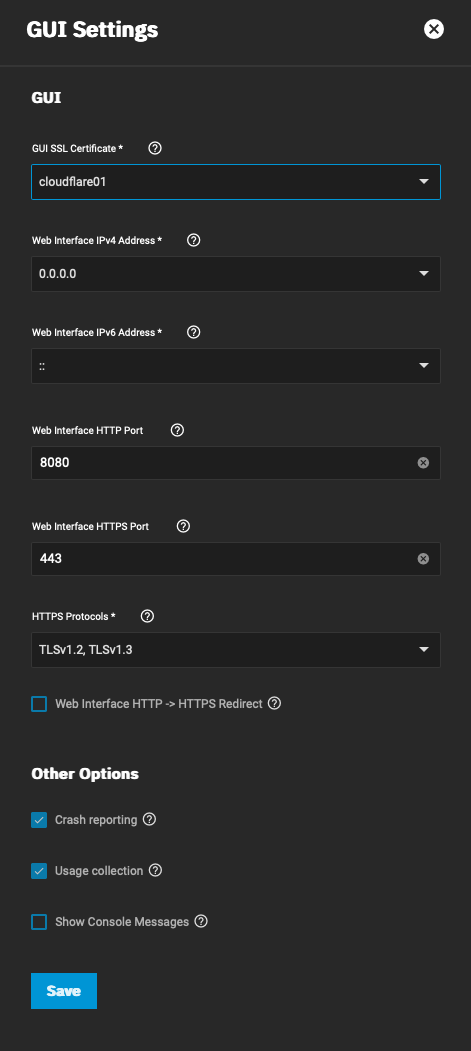

Then navigate to System Settings > General > GUI Settings and click on Edit and set the GUI SSL Certificate to the cert you just created. Click Save and you will be prompted to restart the web service.

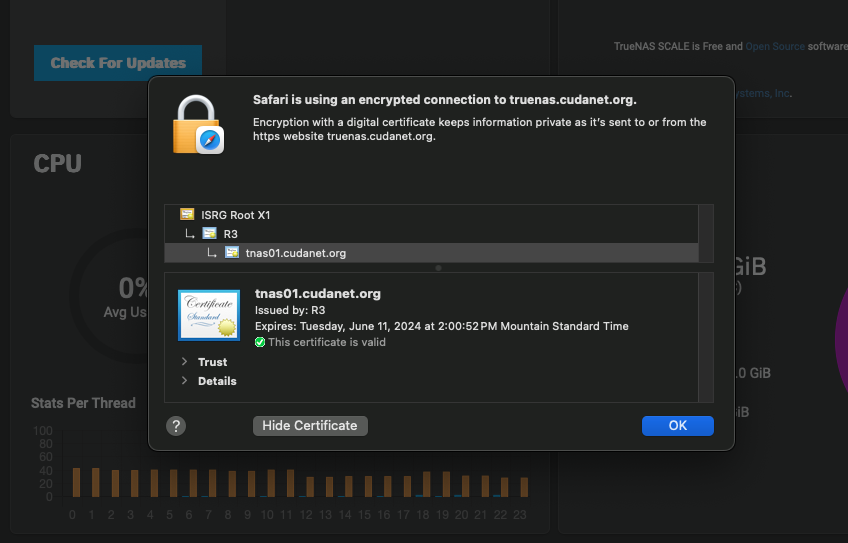

Refresh your web browser and verify that you have a valid SSL certificate

Enjoy never having to worry about expiring certs again.

NFS

NFS was particularly troublesome to get working. The NFS implementation on TrueNAS Scale does not play nice with macOS clients. In order to get NFS shares to be mountable on macOS I had to log in to the web UI on the TrueNAS Scale server and in the shell execute the following command

`midclt call nfs.update '{"allow_nonroot": true}'`Yes - I had to do that from the shell on web UI. I tried doing it via SSH and it just spit out a bunch of garbage about ZSH not being happy. After I ran that command, I was able to create an NFS share and mount it on my mac. But then, even after I managed to get my NFS shares working with macOS, trying to list the exports from the terminal still throws a protocol error:

howmount: Cannot retrieve info from host: 192.168.10.190: RPC failed:: RPC: Program/version mismatch; low version = 3, high version = 3I'm using NFSv4, so I'm not really sure why it's complaining about version 3. Bizarre... but whatever, at least it's working. Linux clients don't have any issues with it, which is fine since the main consumer of NFS on my TrueNAS is Openshift.

SMB

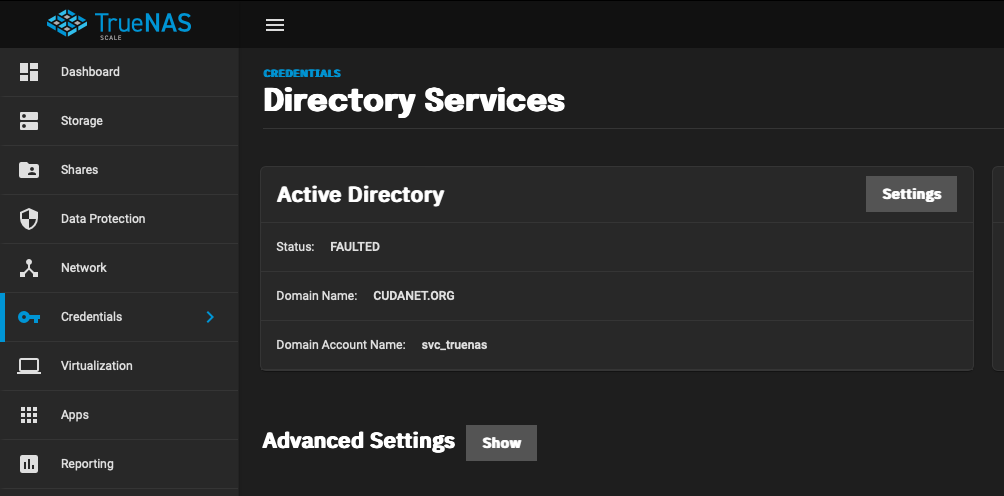

Then I tried setting up SMB by integrating LDAP/Kerberos with Active Directory. Integrating with AD was straightforward, just used my service account credentials and it synced up without any issues.

However, I cannot for love nor money mount an SMB share on my Mac using my domain credentials, and it was working perfectly fine on TrueNAS core. Windows clients on the other hand can browse shares just fine. I'll have to dive into that another day, I rarely use SMB and prefer NFS, but I would like to get Time Machine set up which requires an SMB share on TrueNAS Scale whereas TrueNAS Core had an AFP implementation that worked natively with Time Machine.

looking at the auth logs on TrueNAS, I am seeing my attempts to mount the SMB share with my domain credentials failing with the status NT_STATUS_NO_LOGON_SERVERS which makes absolutely no sense to me because LDAP and kerberos work just fine

{

"timestamp": "2024-03-15T11:39:56.329470-0700",

"type": "Authentication",

"Authentication": {

"version": {

"major": 1,

"minor": 2

},

"eventId": 4625,

"logonId": "0",

"logonType": 3,

"status": "NT_STATUS_NO_LOGON_SERVERS",

"localAddress": "ipv4:192.168.1.190:445",

"remoteAddress": "ipv4:192.168.1.244:54852",

"serviceDescription": "SMB2",

"authDescription": null,

"clientDomain": "CUDANET",

"clientAccount": "rcuda",

"workstation": "IMAC-PRO",

"becameAccount": null,

"becameDomain": null,

"becameSid": null,

"mappedAccount": "rcuda",

"mappedDomain": "CUDANET",

"netlogonComputer": null,

"netlogonTrustAccount": null,

"netlogonNegotiateFlags": "0x00000000",

"netlogonSecureChannelType": 0,

"netlogonTrustAccountSid": null,

"passwordType": "NTLMv2",

"duration": 998802

},

"timestamp_tval": {

"tv_sec": 1710527996,

"tv_usec": 329470

}

}However, enabling guest access allows me to mount the share. Not very secure, but I'm not that concerned about it, I don't store anything sensitive on my NAS. It's mostly just movies and ISO images.

S3

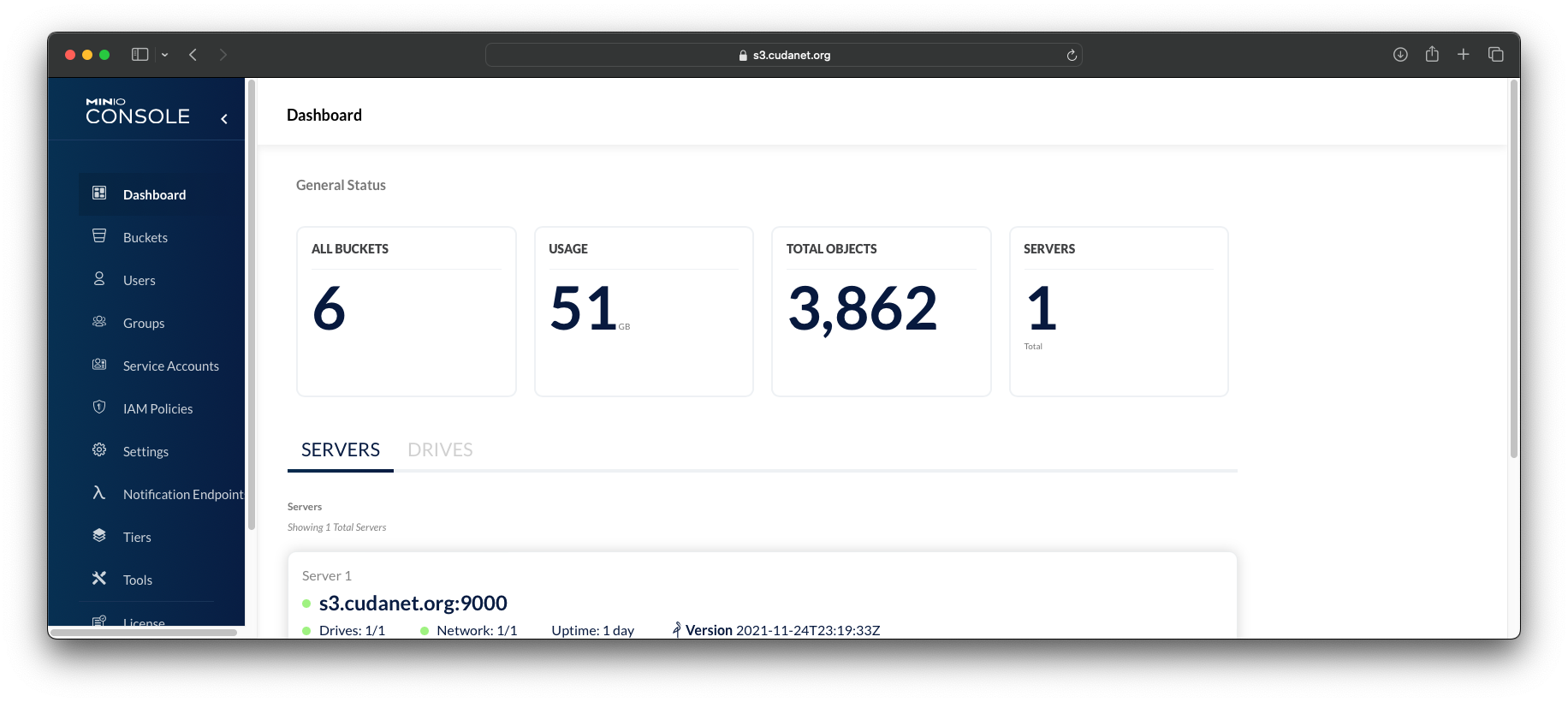

S3 was actually pretty straightforward - just enable the Minio service, point it at the S3 dataset and plug my access and secret keys in and it came right back up, and after converting from Core to Scale, I was able to enable SSL for the web UI. There is a bug with the Minio plugin on TrueNAS Core that prevents you from accessing the Minio web console with SSL enabled. The service itself still works fine and you can use it for bucket storage with SSL enabled, but the web UI was unavailable.

iSCSI

iSCSI was pretty easy to set up as well, the process is exactly the same as it was on TrueNAS core, so that was a relief. I don't use CHAP for authentication so I just needed to set up an initiator group and portal to listen on the appropriate interfaces. The only thing in my lab currently that consumes iSCSI is Openshift via Democratic CSI. The only workloads I have that need block storage are virtual machines, and right now 100% of that is on Ceph, so I had nothing to import.

Webdav

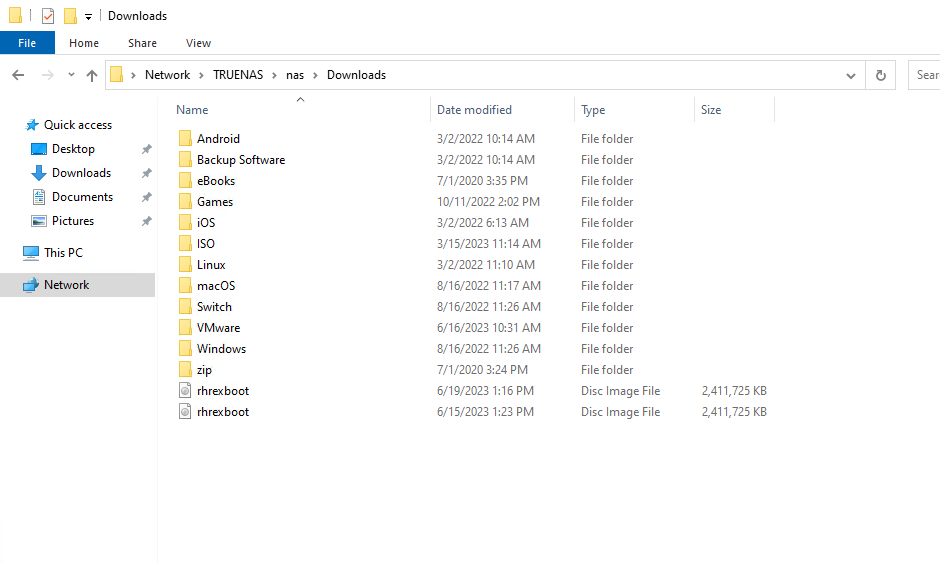

Several years ago when I ripped out vSphere from my lab and went all in on Openshift Virtualization, it was a lot less mature than it is today. There really wasn't a simple way to upload an ISO to Openshift to use for eg; installing a Windows server. The path of least resistance back then was to create a PVC using a URL, which is primarily what I use WebDAV for. These days you can just upload an ISO directly from your workstation, but given the fact that my Openshift clusters and TrueNAS are on the same physical network, transfer speeds are a lot better than trying to upload an ISO over wifi from my laptop, so I still use webdav as the primary way I get ISO images into Openshift for operating systems that do not provide their own boot images.

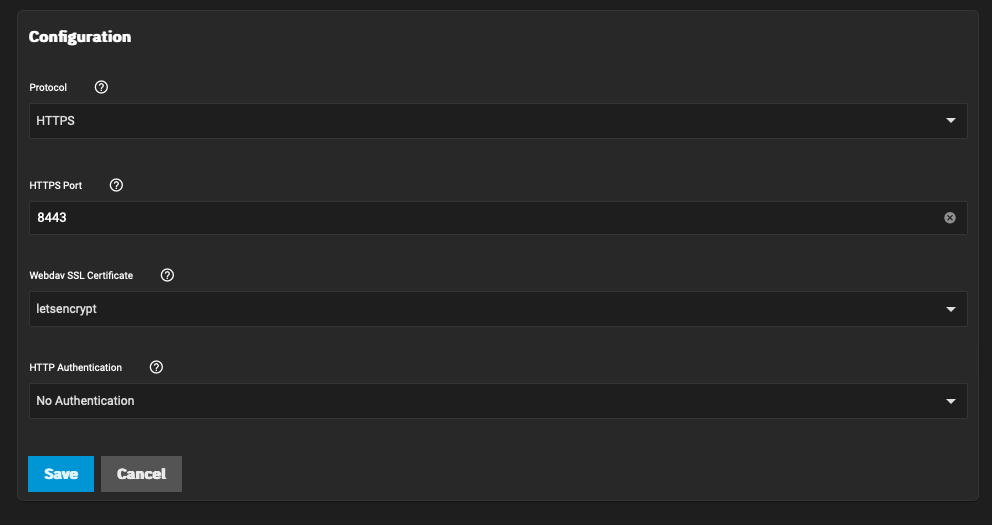

Webdav can be configured with or without SSL. I prefer to use it with a properly signed and trusted cert as it will save you some headaches not running into SSL errors.

Navigate to System Settings > Services > WebDAV and configure it appropriately. Use the SSL cert you generated earlier with ACME. Click save and enable the WebDAV service.

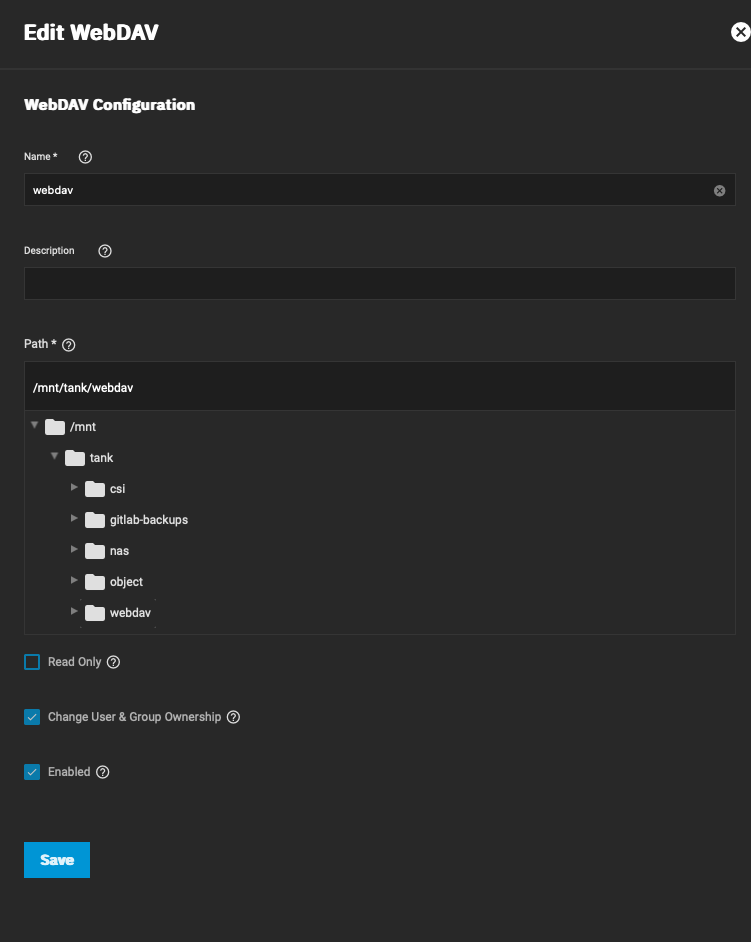

Then navigate to Shares > WebDAV and configure your share accordingly. Worth noting, WebDAV needs to be on it's own dataset that is not shared with any other services, which does have a downside; you can't symlink files across datasets. I keep most of my ISO files in my main I stand corrected! That was a limitation on TrueNAS Core, but isn't an issue on TrueNAS Scale. Either that or upgrading my version of ZFS may have allowed symlinks across datasets, but it was definitely not possible when I first set up my TrueNAS server.nas dataset which is shared with NFS and SMB, so anything I put into my WebDAV share is a duplicate of data that exists elsewhere on my NAS. Not a huge deal, just something to be aware of.

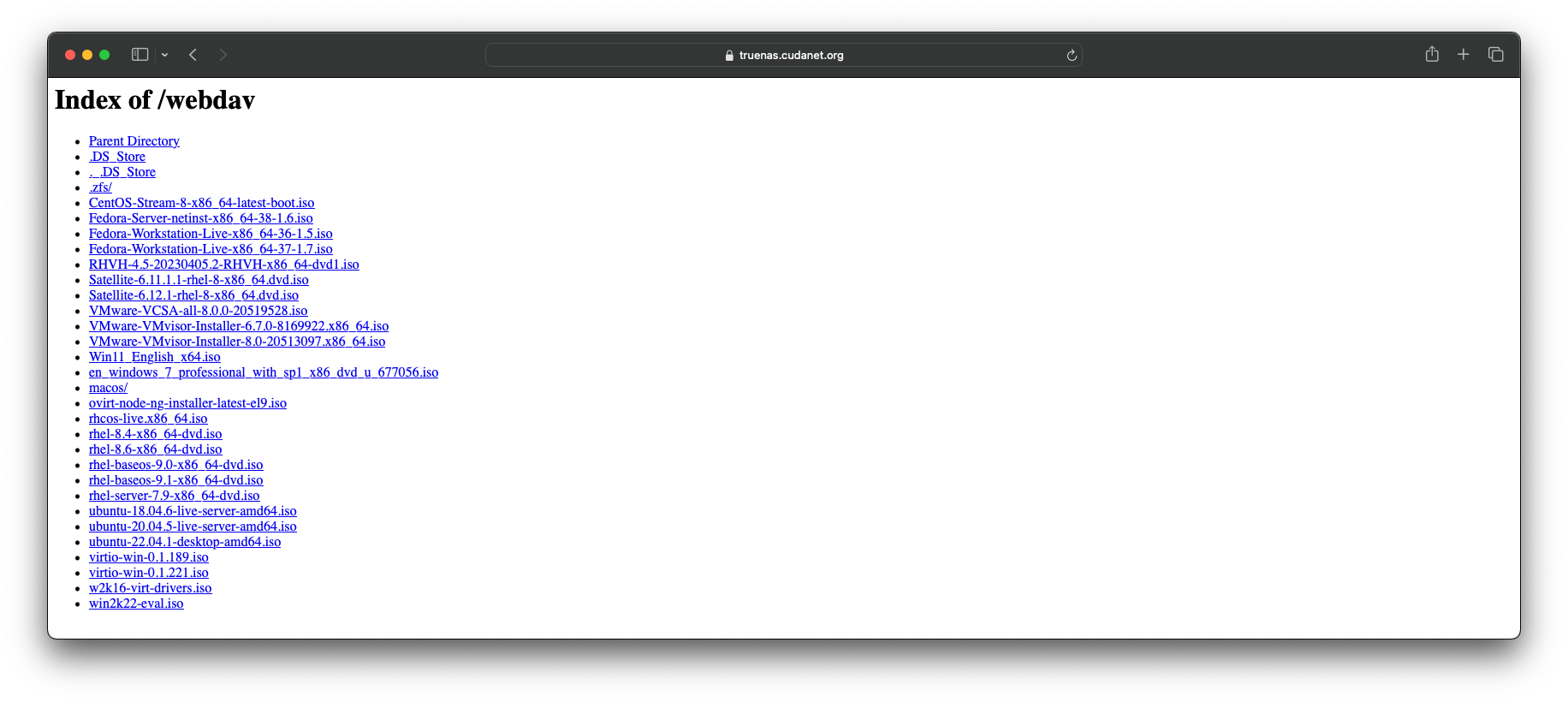

Verify your WebDAV Share is working

Democratic CSI

Democratic CSI is both a collection of storage interface drivers that are primarily targeted at ZFS to provide persistent storage in the form of NFS, iSCSI and SMB (and several other experimental protocols) to a Kubernetes cluster, and a framework for developing CSI drivers. While TrueNAS has no "official" CSI drivers like you would find on an enterprise storage array like Trident on NetApp for example, iX Systems, the parent company that produces TrueNAS is the corporate sponsor behind the Democratic CSI project, and the lion's share of development is obviously directed at TrueNAS.

Like just about everything else with the migration from Core to Scale, your previously working Democratic CSI drivers are not going to work anymore. After some trial and error, I was finally able to get the freenas-api-iscsi and freenas-api-nfs drivers working with Openshift. The biggest thing that changed with democratic-csi from TrueNAS Core to Scale was that you have to use the API drivers and you can no longer use SSH keys for authentication. Everything is done via the API, which I was hesitant about using because on the democratic-csi Github page, the API drivers are listed as "experimental".

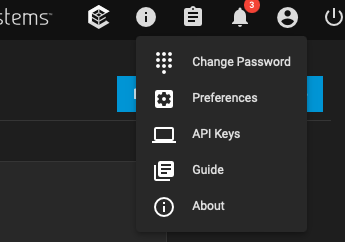

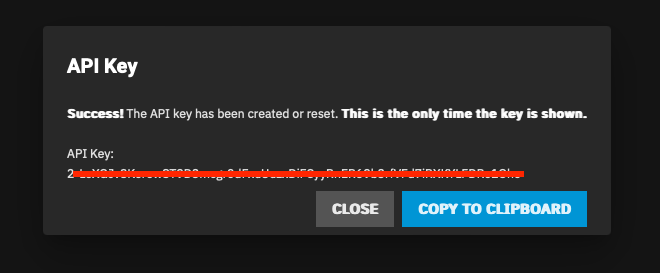

First, you'll need to generate an API key. From the web UI, click on the user icon up at the top right and select API Keys from the drop down menu

Then create a new API key. Make sure to copy the key because it will never be seen again.

Typically, you install democratic-csi using helm. Personally, I use helm to spit out the yaml manifests and then I mirror all the images locally on my gitlab server and point the manifests to my registry. I'm not sure if they're still hosting the container images on Docker hub, but I have run into the stupid pull rate limit issue a few times while deploying democratic-csi, and then I was dead in the water until the next day. Very annoying.

These are the values.yaml files that worked for me. There are still some things I would like to test out, for instance you can create additional users and delegate sudo privileges. I'm really not a fan of running things as root if I don't have to so setting up a service account specifically for democratic-csi is on my to-do list. I also still need to figure out what (if anything) changed with snapshots. Just perusing the example files, it looks like the syntax for handling snapshots between the old SSH based drivers and the new API drivers is a little different.

iSCSI:

csiDriver:

name: "org.democratic-csi.iscsi"

storageClasses:

- name: truenas-iscsi-csi

defaultClass: false

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

parameters:

fsType: xfs

mountOptions: []

secrets:

volumeSnapshotClasses: []

#- name: freenas-iscsi-csi

# parameters:

# # if true, snapshots will be created with zfs send/receive

# # detachedSnapshots: "false"

# secrets:

# snapshotter-secret:

extraCaCerts: []

driver:

config:

driver: freenas-api-iscsi

instance_id:

httpConnection:

protocol: https

host: tnas01.cudanet.org

port: 443

apiKey: YOUR-API-KEY

username: root

allowInsecure: true

zfs:

cli:

paths:

zfs: /usr/sbin/zfs

zpool: /usr/sbin/zpool

sudo: /usr/bin/sudo

chroot: /usr/sbin/chroot

datasetParentName: tank/csi/okd

detachedSnapshotsDatasetParentName: tank/csi/okd-snaps

zvolCompression: "lz4"

zvolDedup: "on"

zvolEnableReservation: false

zvolBlocksize: 16K

iscsi:

targetPortal: "192.168.10.190:3260"

targetPortals: []

interface:

namePrefix: csi-

nameSuffix: "-okd"

targetGroups:

- targetGroupPortalGroup: 1

targetGroupInitiatorGroup: 1

targetGroupAuthType: None

targetGroupAuthGroup:

extentInsecureTpc: true

extentXenCompat: false

extentDisablePhysicalBlocksize: true

extentBlocksize: 512

extentRpm: "SSD"

extentAvailThreshold: 0

NFS:

csiDriver:

name: "org.democratic-csi.nfs"

storageClasses:

- name: truenas-nfs-csi

defaultClass: false

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

parameters:

fsType: nfs

mountOptions:

- noatime

- nfsvers=3

secrets:

provisioner-secret:

controller-publish-secret:

node-stage-secret:

node-publish-secret:

controller-expand-secret:

volumeSnapshotClasses: []

driver:

config:

driver: freenas-api-nfs

instance_id:

httpConnection:

protocol: https

host: tnas01.cudanet.org

port: 443

apiKey: YOUR-API-KEY

username: root

allowInsecure: true

sshConnection:

host: 192.168.10.190

port: 22

username: root

zfs:

datasetParentName: tank/csi/okd

detachedSnapshotsDatasetParentName: tank/csi/okd-snaps

datasetEnableQuotas: true

datasetEnableReservation: false

datasetPermissionsMode: "0777"

datasetPermissionsUser: 0

datasetPermissionsGroup: 0

nfs:

shareHost: 192.168.10.190

shareAlldirs: false

shareAllowedHosts: []

shareAllowedNetworks: []

shareMaprootUser: root

shareMaprootGroup: root

shareMapallUser: ""

shareMapallGroup: ""

If you want to go that route, here's the command I use to spit out the yaml files

# iSCSI

helm template \

--create-namespace \

--values values.yaml \

--namespace democratic-csi \

--set node.kubeletHostPath="/var/lib/kubelet" \

--set node.rbac.openshift.privileged=true \

--set node.driver.localtimeHostPath=false \

--set controller.rbac.openshift.privileged=true \

zfs-iscsi democratic-csi/democratic-csi \

--output-dir helm

# NFS

helm template \

--create-namespace \

--values values.yaml \

--namespace democratic-csi-nfs \

--set node.kubeletHostPath="/var/lib/kubelet" \

--set node.rbac.openshift.privileged=true \

--set node.driver.localtimeHostPath=false \

--set controller.rbac.openshift.privileged=true \

zfs-nfs democratic-csi/democratic-csi \

--output-dir helmThen the process to mirror the images locally is really straightforward. Basically you pull all the images, push them to your local registry and then change image URL in the yaml files. It can all be done in a bash script, like this

#!/bin/bash

# quick and dirty script to mirror all the images in a helm chart to a local registry and update the manifests to use the local image

internal=registry.cudanet.org/cudanet/democratic-csi/

IMAGES=$(find ./ -type f -exec grep -H image: {} \; | cut -d ':' -f 3,4 | sort | uniq)

YAML=$(find ./ -type f -exec grep -H image: {} \; | cut -d ':' -f 1)

USER=dockeruser

PASSWORD=dockerpassword

# make sure you are authenticated to your internal registry first

docker login -u $USER -p $PASSWORD $(echo $internal | awk -F "/" '{ print $1F }')

for i in $IMAGES;

image=$(echo $i | awk -F "/" '{ print $NF }')

# pull the images

do docker pull $i;

# tag the images locally

docker image tag $i $internal$image

# push the image to your local registry

docker push $internal$image

done

# point the yaml files at your internal registry

for y in $YAML;

do

for i in $IMAGES;

do

image=$(echo $i | awk -F "/" '{ print $NF }');

sed -i "s|$i|$internal$image|g" $y ;

done;

doneThere may be a quicker way to do this, but for a quick and dirty way to redirect the images to your internal registry, it gets the job done.

Then don't forget to add the privileged SCC to the default service account for the democratic-csi namespace.

oc adm policy add-scc-to-user privileged -z default -n democratic-csi

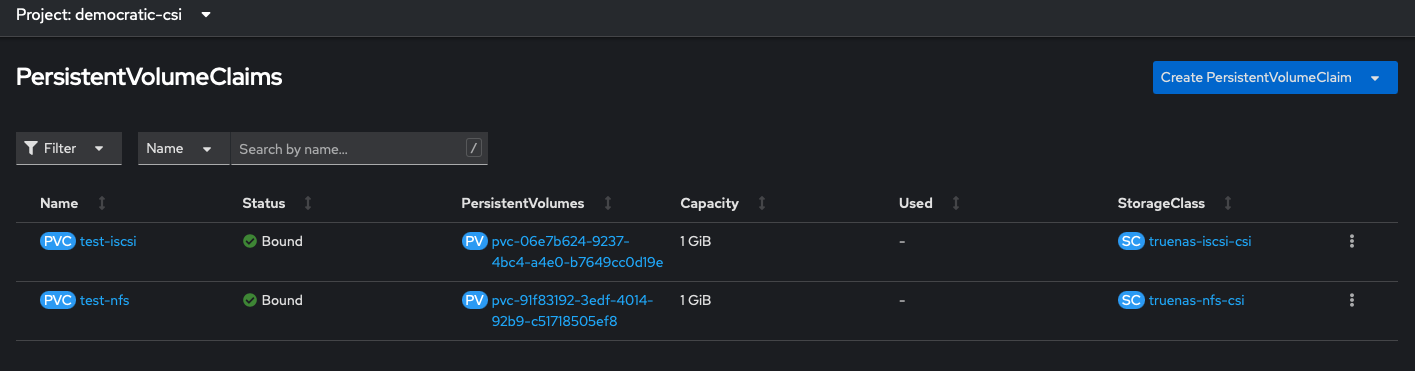

Then make sure to test that you can create PVCs

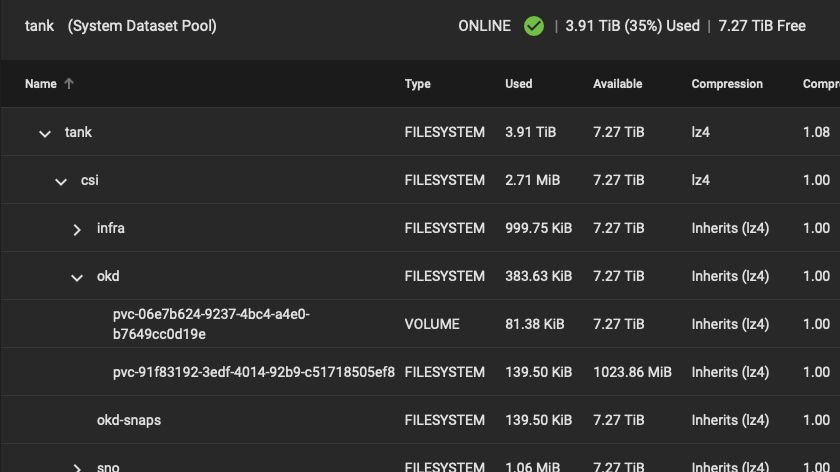

If we look at our dataset in TrueNAS we should see those volumes were provisioned

Wrapping up

All in all, migrating from TrueNAS Core to Scale in-place was an absolute failure, and having to set literally everything back up from scratch was annoying. But I guess I can be happy that I don't have to think about expiring certs anymore. So... worth it? Maybe. We'll see how things go after I upgrade to SSDs.