A Practical Guide to Migration from Gitlab to Gitea

First, I just want to say thank you to Gitlab for the many years it has served me quite well. I've pretty much always preferred to run a self hosted git, whether that was due to intellectual property reasons for my job, my general distrust of Microsoft and the (6 years on, apparently unfounded) fear that they would be poor stewards of Github and pull a Docker, or simply because I like full control over my platforms. Several years ago I ended up doing a bake-off between Bitbucket and Gitlab at my job and while Bitbucket won out at work, I ended up liking Gitlab CE more for personal use. Back then, Docker was still newish and kubernetes was just on the horizon, so at the time deploying Gitlab on a VM was standard. Now fast forward to today and I try to avoid running anything in a VM and prefer to run everything I can on kubernetes (Openshift). Technically, you can run Gitlab on Openshift. We have an officially certified operator for it, and I've used it in the past. However, I've found the operator deployment to be a bit limiting. Some things you can tune, others you can't. Getting LDAP authentication figured out took a lot of trial and error trying to reverse engineer examples from the Helm chart deployment targeted at xKS. Ultimately, I just found the Gitlab operator to be a bit too problematic to really be reliable enough for production use. I'd have issues with pods randomly crashing after losing connection with pgsql database or just running out of memory. The biggest problem I've had though, has been the absolutely terrible track record with compatibility on Openshift. It seems as though every other update to Openshift breaks the Gitlab operator. I'd been looking for a migration path off of the Gitlab Omnibus install on a VM I've been running for a couple of years now and ultimately, hosting Gitlab on Openshift just wasn't in the cards, so I started looking into alternatives which generally led me to Gitea.

Personally, I really only use a handful of features on Gitlab so I never had any need for a lot of the additonal bells and whistles it comes with, nor the added complexity and overhead. I really just use the git server, the container registry and LDAP for authentication, and after kicking the tires on Gitea I found that it checks all the boxes for me.

The first thing I had to do was figure out how to deploy Gitea on Openshift. Compared to Gitlab, Gitea has a lot less components and dependencies. It really just consists of Gitea itself and a database backend which can be just about any SQL database you want, but I stuck with MariaDB because I'm familiar with it and it's easy to use. A few things worth noting; if you run Gitea in a container, you really should use the 'non-root' variant. Also, while it's certainly technically possible to have Gitea provide SSL itself, doing so in a container requires a lot of manual configuration after deployment. I found it was easier to just use nginx as a reverse proxy to handle SSL, which has the added benefit of being able to seamlessly redirect HTTPS and SSH traffic to the normal ports, 443 and 22 respectively.

On Openshift, you'll also want to use the Cert Manager operator and MetalLB (assuming you're deploying on-prem).

In summary, my Gitea deployment on Openshift consists of 3 pieces - a gitea pod, a mariadb pod and an nginx pod. You can interact with your Gitea instance by hitting the ingress which is bound to the nginx service. The nginx pod is configured to reverse-proxy SSH and HTTPs back to the gitea pod. It's nginx.conf file is mounted as a configmap so it does not require any persistent storage and any changes to it can be managed with a simple git push and a CI/CD pipeline rung (I'd highly recommend trying out Tekton!). You can find all of the files you'll need here for reference https://gitea.cudanet.org/cudanet/gitea. Just make sure to change the values in things like the SSL cert (cert.yaml), the nginx.conf and the ingress route to reflect your environment.

You can easily deploy this app on your cluster like this

oc new-project gitea

YAML=$(ls | grep yaml)

for y in $YAML;

do oc apply -f $y;

doneIt'll take a few minutes for things like the letsencrypt cert to get requested and the TLS secret created. But once all the pods come online, your Gitea instance will be ready for initial setup. But first, let's dive into the nginx reverse proxy for a bit, as that's where all the 'magic' really happens. Here's my complete nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

stream {

upstream gitea-ssh {

server gitea:2222;

}

server {

listen 22;

proxy_pass gitea-ssh;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

# Load modular configuration files from the /etc/nginx/conf.d directory.

# See http://nginx.org/en/docs/ngx_core_module.html#include

# for more information.

include /etc/nginx/conf.d/*.conf;

# set max upload size to 1000M for container registry/git commits;

client_max_body_size 1000M;

server {

listen 80 default_server;

listen [::]:80 default_server;

server_name _;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

location / {

}

}

server {

listen 443 ssl;

ssl_certificate /etc/nginx/fullchain.pem;

ssl_certificate_key /etc/nginx/privkey.pem;

server_name gitea.cudanet.org;

location / {

proxy_set_header Host $host:$server_port;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto "https";

proxy_pass http://gitea:3000;

}

}

}First let's take a look at how we set up reverse proxy for SSH. It goes without saying, but SSH does not use HTTP/S protocol, it uses TCP. Nginx can handle arbitrary protocols including TCP and UDP when you configure it as a load balancer. This is effectively what I did, I set up a load balancer frontend on port 22 and round-robin a pool of 1 service on port 2222.

stream {

upstream gitea-ssh {

server gitea:2222;

}

server {

listen 22;

proxy_pass gitea-ssh;

}

}Now for the Gitea web service. A short primer on how kubernetes networking works - a pod gets a NATed DHCP IP addres in the 172.30.0.0/16 network (by default - this is tunable at cluster-up, but this is the standard pod network). Services resolve to the FQDN servicename.namespace.svc.cluster.local, or you can just use the short name servicename since all services within a namespace share the same domain. So when we set up our reverse proxy from our nginx pod, we can just specify http://gitea:3000, like this

server {

listen 443 ssl;

ssl_certificate /etc/nginx/fullchain.pem;

ssl_certificate_key /etc/nginx/privkey.pem;

server_name gitea.cudanet.org;

location / {

proxy_set_header Host $host:$server_port;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto "https";

proxy_pass http://gitea:3000;

}

}Nginx is handling SSL, so if we so choose we don't even need to create an ingress or route as long as we create the appropriate DNS entries to point to the nginx LoadBalancer service's externalIP. However, if you want your gitea instance to be routeable outside your network (assuming you have the appropriate port-forwards and external DNS entries configured) you'll need to create an ingress. Thankfully, due to cert-manager, the nginx pod and the ingress will use the exact same TLS secret containing your certificate and private key.

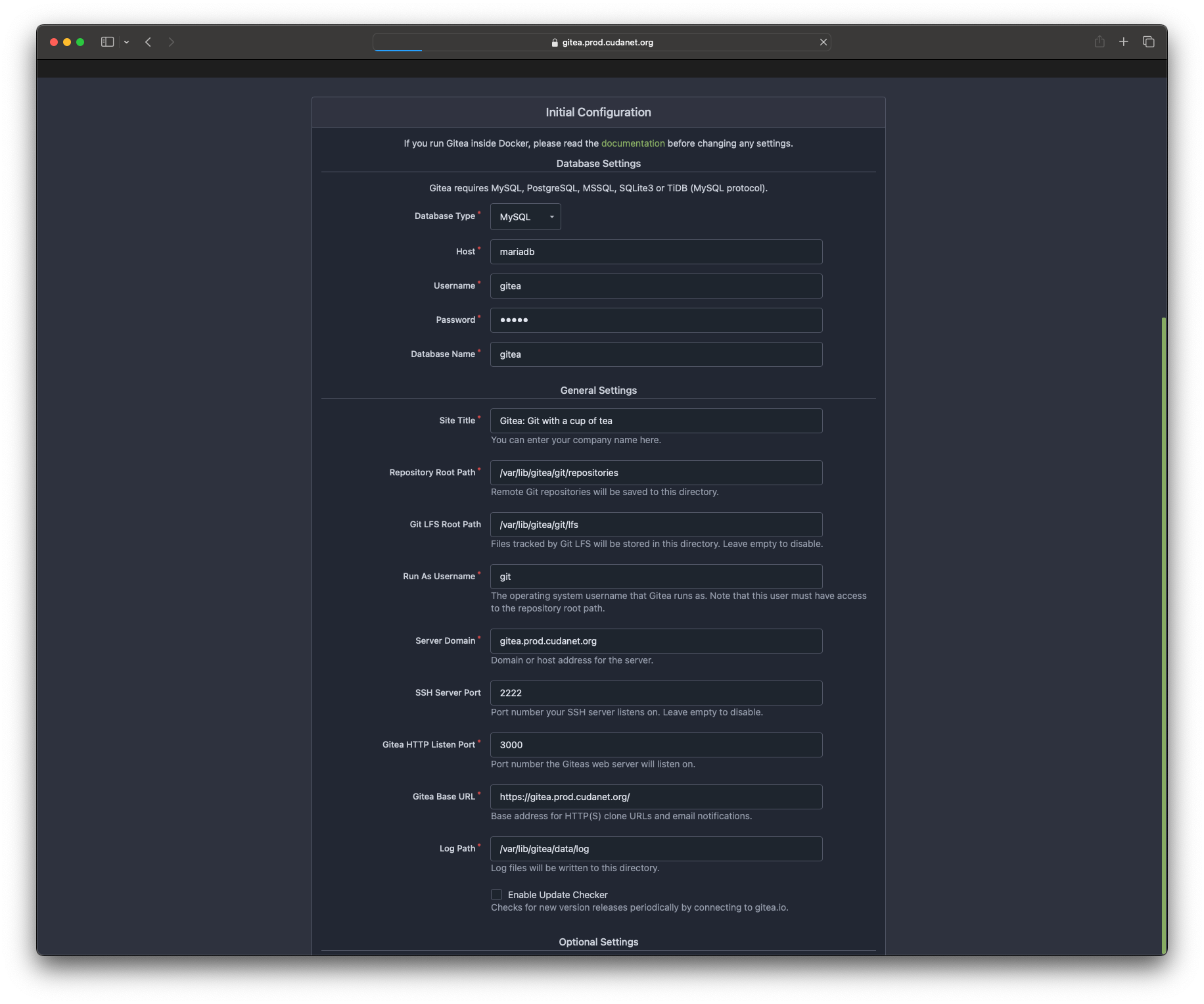

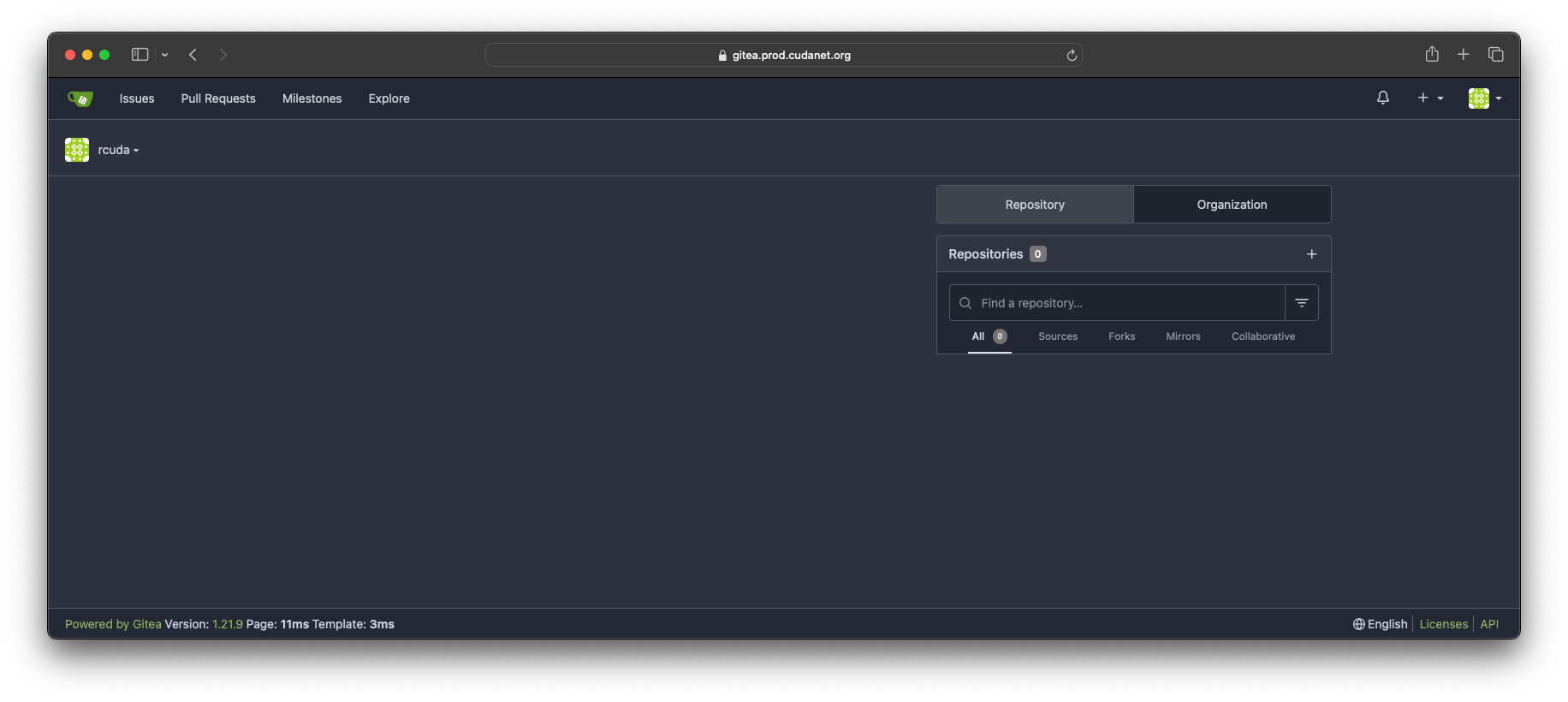

Once you've deployed your Gitea instance it should be available at the ingress you created, eg; gitea.prod.cudanet.org in my case. In order to access your Gitea server via the ingress, you can create a DNS alias for it that resolves to your Openshift ingress. Otherwise, you can skip creating the ingress and just hit the nginx VIP. Either way works.

Once you've created the appropriate DNS record, access the Gitea web UI and complete the initial setup. You can accept the default values and click save

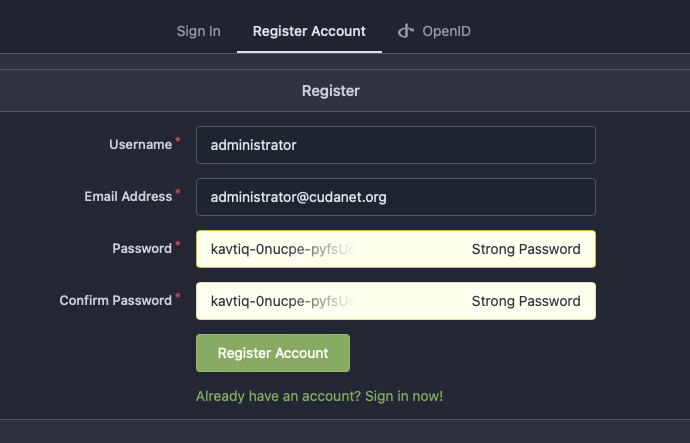

After a few minutes while the database is populated, you'll be redirected to the login screen. You'll need to register your first user, which will be the local administrator.

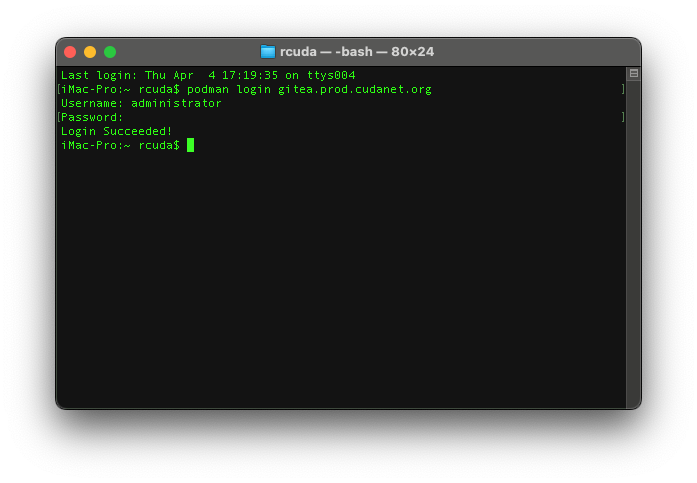

At which point your Gitea server is ready to use. You might want to verify that you can access the container registry as well using your new admin account

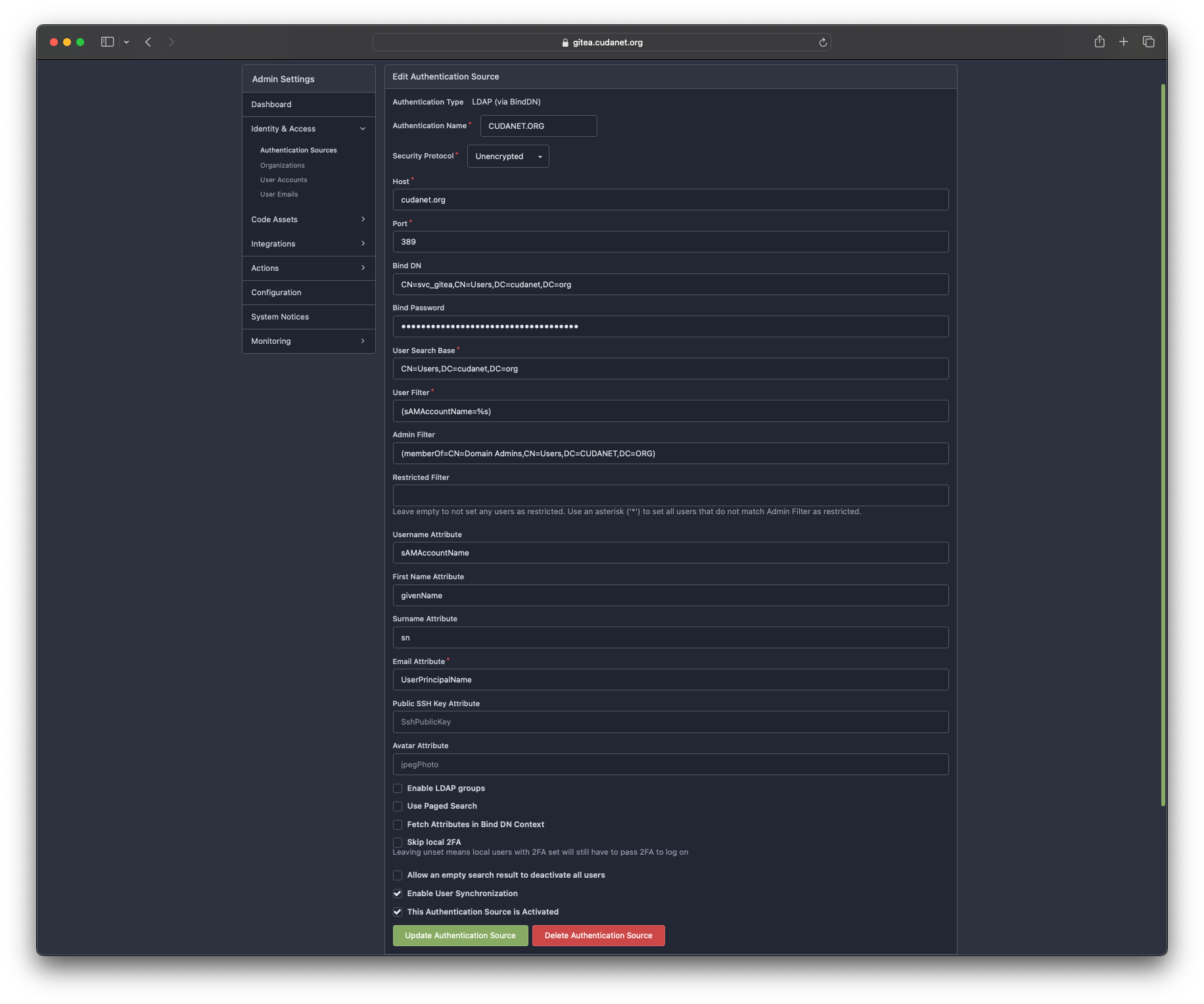

You may also want to set up LDAP authentication as well. Click on your avatar (top right corner). Select 'Site Administration' from the drop down menu and navigate to 'Identity & Access' > 'Authentication Sources' and click on 'Add Authentication Source'.

For Active Directory, these are the settings that worked for me.

Authentication Type: LDAP via BindDN

Authentication Name: CUDANET.ORG

Security Protocol: Unencrypted

Host: cudanet.org

Port :389

Bind DN: CN=svc_gitea,CN=Users,DC=CUDANET,DC=ORG

Bind Password: <password>

Users Search Base: CN=Users,DC=CUDANET,DC=ORG

User Filter: (sAMAccountName=%s)

Admin Filter: (memberOf=CN=Domain Admins,CN=Users,DC=CUDANET,DC=ORG)

Restricted Filter: leave blank

Username Attribute: sAMAccountName

First Name Attribute: givenName

Surname Attribute: sn

Email Attribute: UserPrincipalName

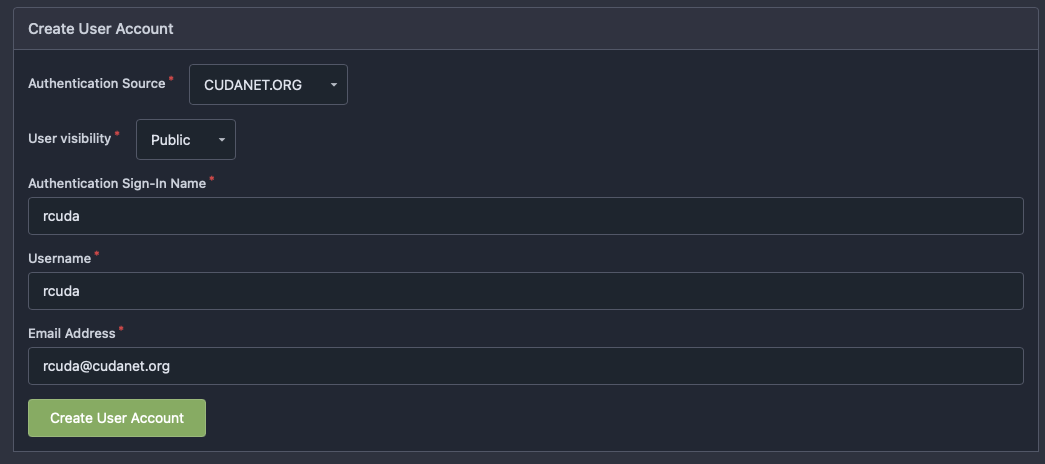

Then once you've created your LDAP authentication source, you need to register your user account(s). I wish that Gitea would automatically register LDAP users, but I can overlook that. Got to 'User Accounts' and select 'Create User Account'.

Verify that you can log in with your LDAP credentials

Repeat the process for as many LDAP users as you need.

Migration

Now comes the tricky part. Migrating from Gitlab to Gitea consists of two major parts, migrating your git repos and migrating your container registry. Migrating git repos is actually pretty easy. I found this project here https://github.com/h44z/gitlab_to_gitea

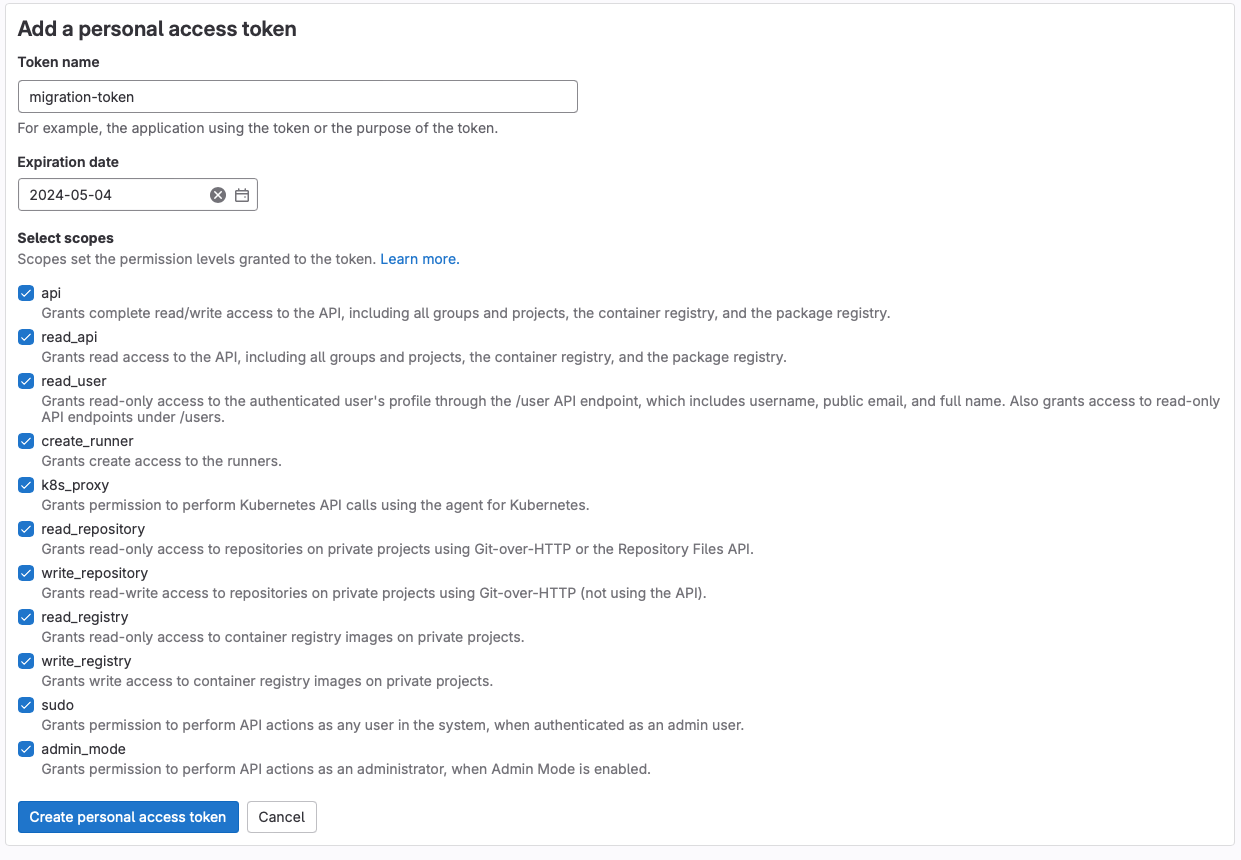

The process is quite simple, you need to create an access token on both your Gitlab server and your Gitea server with an administrator account. On Gitlab, log in as an admin user. Click on your avatar (top left) and select Preferences, then navigate to 'Access Tokens' and select 'add new token'. Select all scopes and click 'Create personalacces token'.

Copy the token somewhere safe because you'll never be able to see it again.

On Gitea, click on your avatar (top right) and select 'Settings'. Select 'Applications' from the menu to the left and generate a new token. Select 'Read and Write' token permissions for all fields and click 'Generate Token'. Again, copy this token somewhere safe because it will never be seen again.

Before we actually kick off the migration, I found that there was one more thing that needed to be done to prepare Gitea to import the data. You need to add the following to your gitea app.ini

[migrations]

ALLOW_LOCALNETWORKS = true

make the changes necessary

POD=$(oc get pod | grep gitea | cut -d ' ' -f 1)

oc cp $POD:/etc/gitea/app.ini ./app.ini

cat << EOF >> app.ini

[migrations]

ALLOW_LOCALNETWORKS = true

EOF

oc cp app.ini $POD:/etc/gitea/app.ini

oc delete pod $PODThen with our app.ini file patched, we can start with the migration. You'll need podman and podman-compose installed.

git clone https://github.com/h44z/gitlab_to_gitea

cd gitlab_to_gitea

then we need to make .env file with contents like the following. NOTE: don't forget to escape any special characters in your password with backslash

GITLAB_URL=https://gitlab.cudanet.org

GITLAB_TOKEN=<gitlab token>

GITLAB_ADMIN_USER=administrator

GITLAB_ADMIN_PASS=password

GITEA_URL=https://gitea.prod.cudanet.org

GITEA_TOKEN=<gitea token>

MIGRATE_BY_GROUPS=1

GITLAB_ARCHIVE_MIGRATED_PROJECTS=0then with the .env file populated, run

podman compose build

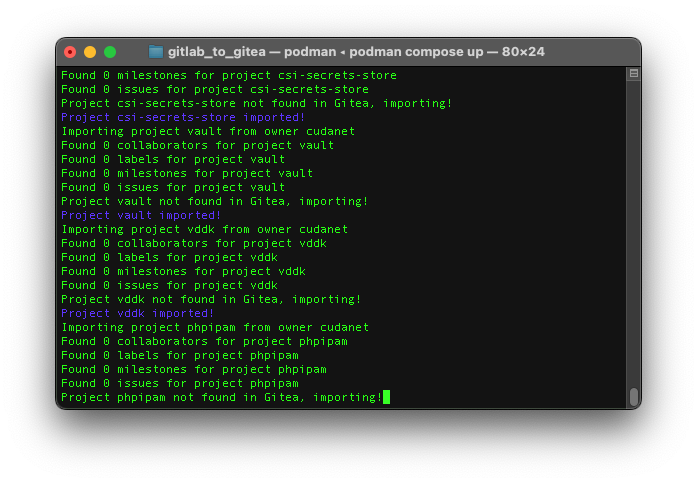

podman compose upAfter a few minutes while the pod spins up, you should see output like this

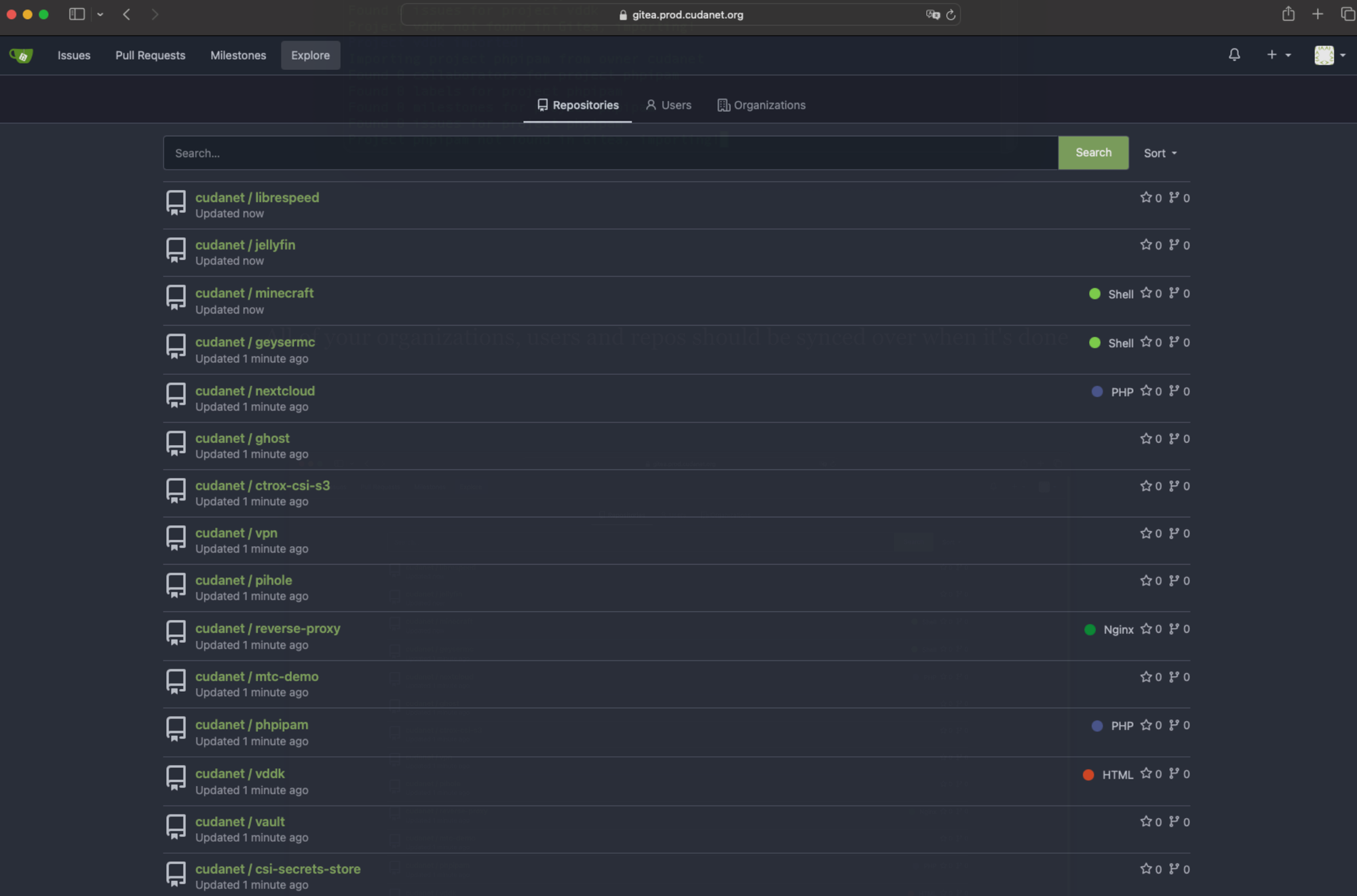

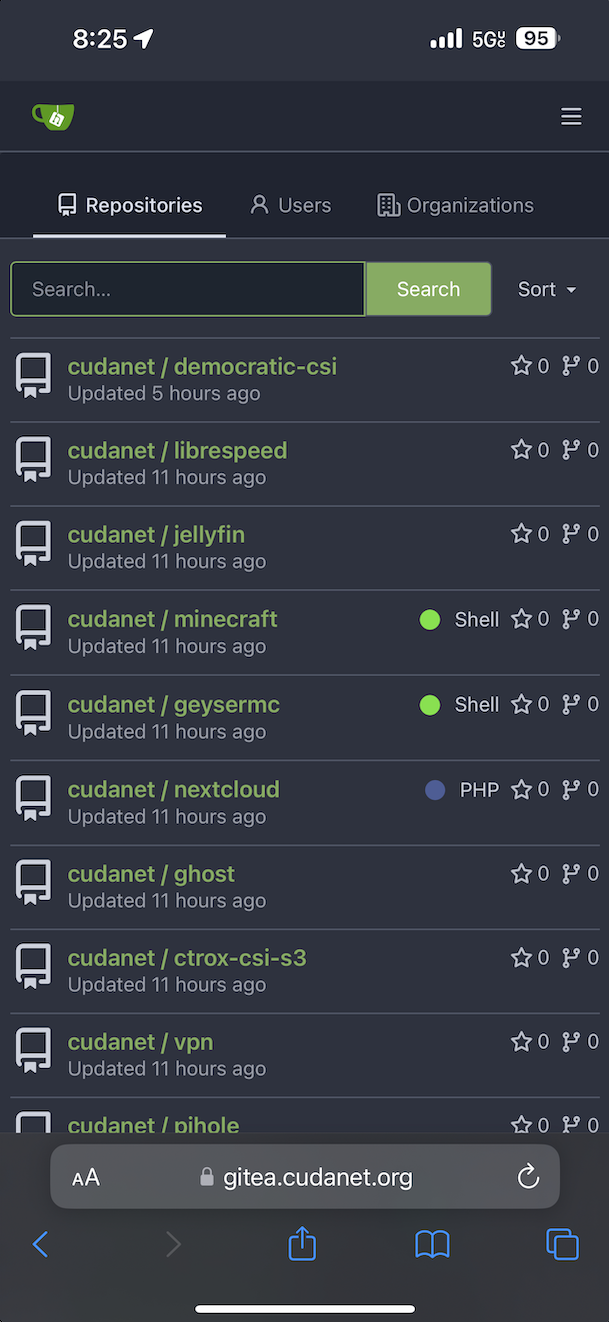

All of your organizations, users and repos should be synced over when it's done

You will want ot take a minute to double check that all your repos made it over. I found that in my migration, one repo was missed. Not a huge deal, just do a git clone from Gitlab and push it to your Gitea instance.

That was the easy part. The next part was a bit harder to figure out, mainly because Gitlab's container registry doesn't behave the same way as most do and tools like crane and skopeo can't crawl the registry to view the entire catalog. Not only that, but Gitlab's own API documentation contains a lot of incorrect information, so I had to figure out how to do this by trial and error, but was finally able to get it done with a few bash scripts.

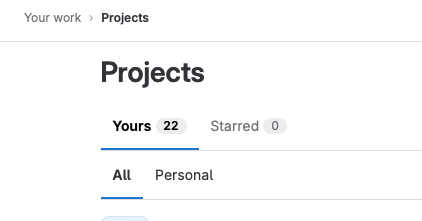

First, you're going to need skopeo installed. Next you'll need to get your Gitlab token again. Then with our token set to $TOKEN, we can crawl the Gitlab API with a script like this. I set the while loop to iterate 22 times because that's how many projects I have in Gitlab. I'm sure there's a scriptomatic way to figure out how many projects you have but it was easier just to take a look at the web UI

TOKEN=<gitlab token>

i=0

while [ $i -le 22 ];

do

echo $i

TAG=$(curl --header "PRIVATE-TOKEN: $TOKEN" "https://gitlab.cudanet.org/api/v4/projects/$i/registry/repositories/" | jq | grep '"id"' | awk -F ":" '{print $2}' | sed 's/,//g')

for t in $TAG;

do curl --header "PRIVATE-TOKEN: $TOKEN" "https://gitlab.cudanet.org/api/v4/projects/$i/registry/repositories/$t/tags" | jq | grep location | cut -d ' ' -f 6 | sed 's/"//g' >> output.txt;

done

((i++));

done

which will spit out a file called output.txt with contents like this

registry.cudanet.org/cudanet/democratic-csi/busybox:1.32.0

registry.cudanet.org/cudanet/democratic-csi/csi-grpc-proxy:v0.5.3

registry.cudanet.org/cudanet/democratic-csi/democratic-csi:latest

...

registry.cudanet.org/cudanet/gitea/gitea:1.21.9-rootless

registry.cudanet.org/cudanet/gitea/mariadb:11.4.1

registry.cudanet.org/cudanet/gitea/nginx:1.25so now all we need to do is write a script that will copy the image list from output.txt and push the images to our Gitea registry.

PASSWORD=<gitea admin password>

IMG=$(cat output.txt)

for i in $IMG;

do

echo "Source = $i"

d=$(echo $i | sed 's/registry/gitea.prod/g')

echo "Destination = $d";

skopeo copy docker://$i docker://$d --dest-creds=administrator:$PASSWORD

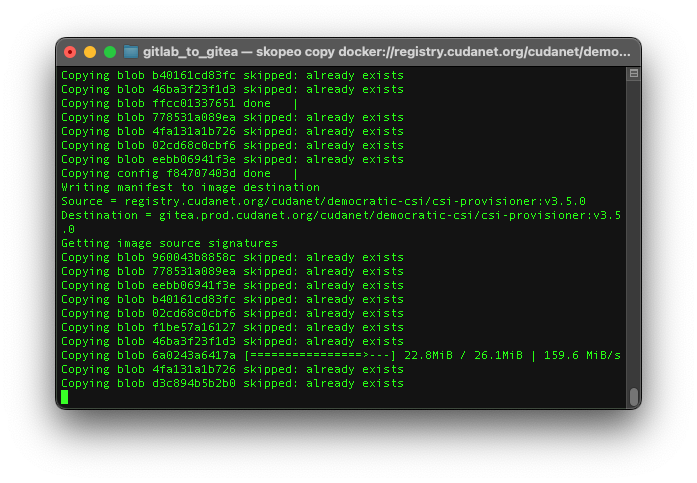

doneAnd then just sit back and watch the magic happen

This part may take a while to copy all those container images over depending on how many and how big the images are. Once the script is done, you'll probably want to double check and make sure all of your ontainer images made it over. I didn't find any that the script missed when I ran it.

Cleaning up

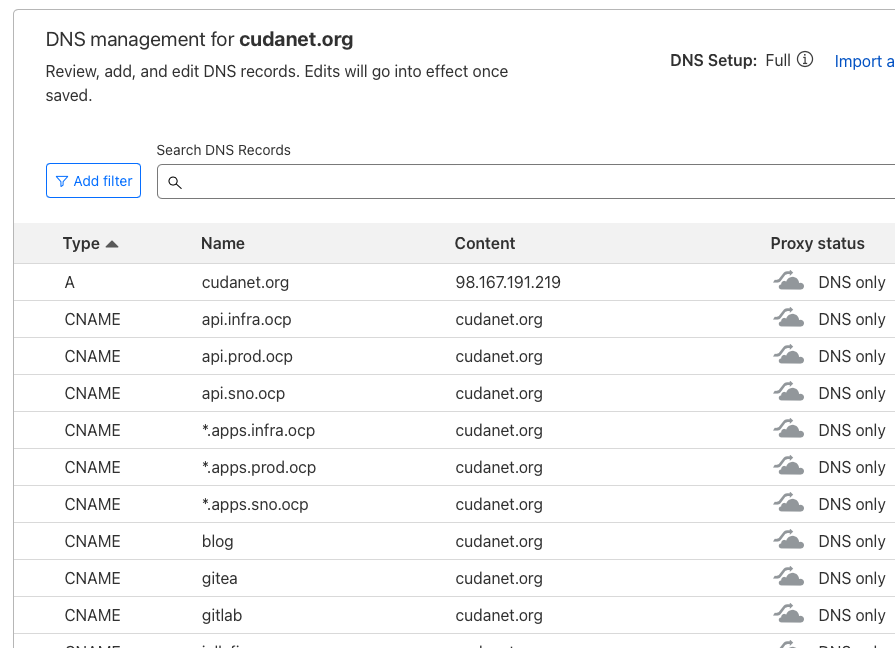

With all of my repos and container images migrated over to Gitea, I had to decide how I wanted to do the cutover. Option 1 would be to update the image: URL on every single deployment on all of my clusters. Not too hard to do, assuming you have properly designed CI/CD pipelines set up for all of your projects (I don't... I'm only partly automated). Option 2, the "band-aid" option is to create a DNS alias for your old Gitlab registry (in my case registry.cudanet.org) to the ingress route for your Gitea instance. I chose option 2. Just be aware, if you go with option 2, you'll need to do more than just update DNS, you'll also have to create another reverse proxy on your nginx pod and an ingress for the old registry URL.

Add a stanza like this to the nginx.conf configmap

server {

listen 443 ssl;

ssl_certificate /etc/nginx/fullchain.pem;

ssl_certificate_key /etc/nginx/privkey.pem;

server_name registry.cudanet.org;

location / {

proxy_set_header Host $host:$server_port;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto "https";

proxy_pass http://gitea:3000;

}

} Don't forget to request a new cert to cover both your gitea and registry ingresses.

Once you're done, you can check if everything is working by deleting a pod on Openshift that was previously deployed from your Gitlab registry, or just deploying a new app pointing to one of the old registry.cudanet.org URLs for the image. If the pod spins up, then you're good to go! Feel free to power down your Gitlab server, but I'd hold off on deleting it for a few days until you're sure that everything is good. I'm still assuming I'm going to run into some snags here and there as new pods spin up, so we'll see. But so far, so good!

Eventually I will get around to updating all the deployment manifests to use the Gitea URL and clean up the DNS alias, but that day is not going to be today. I've performed enough open heart surgery on my lab lately, I don't want to risk really breaking something.

External Access

I can't possibly go over every single way that you could expose your Gitea instance outside your network, but the thing that all of them have in common is something is reverse-proxying traffic and that thing needs to be port forwarded on the appropriate ports outside your network. In my case, my Openshift cluster Ingress is externally accessible, so any route I create on Openshift is accessible from outside my network, assuming I have created a corresponding DNS record on my external DNS provider (Cloudflare).

You could also run your reverse proxy any number of other ways. HAProxy is a common one I see people use. You could spin up a standalone nginx server on like a Raspberry Pi and let that handle it. I've personally been playing around with the idea of moving to Traefik running outside of my Openshift clusters.

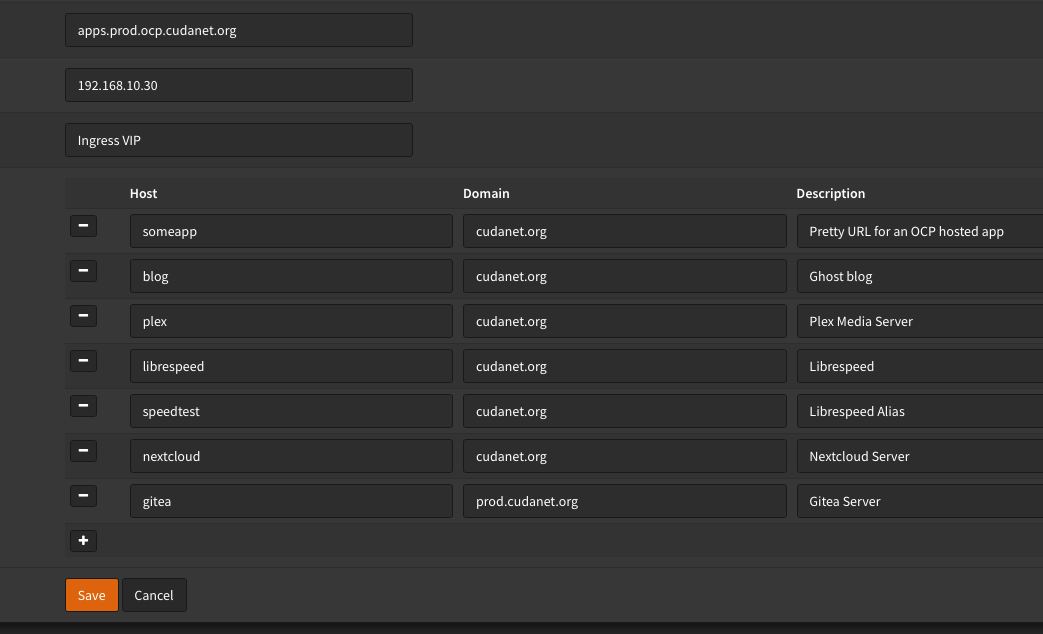

If like me, your Openshift ingress is port forwarded, then all you need to do is use the nginx-ingress.yaml I provided and make sure that you have proper SSL termination. Then make the corresponding DNS entry on your external DNS provider

Then you can check that you have access from your phone (not connected to your Wifi... duh).

Oh, and one more thing. The directive client_max_body_size 1000M; in the nginx.conf file is very important. Without it, you'll run into issues pushing container images to your registry, especially if you're working outside your network.